Computational Analysis of Digital Communication

Week 1: Introduction to Computational Methods in Communication Science

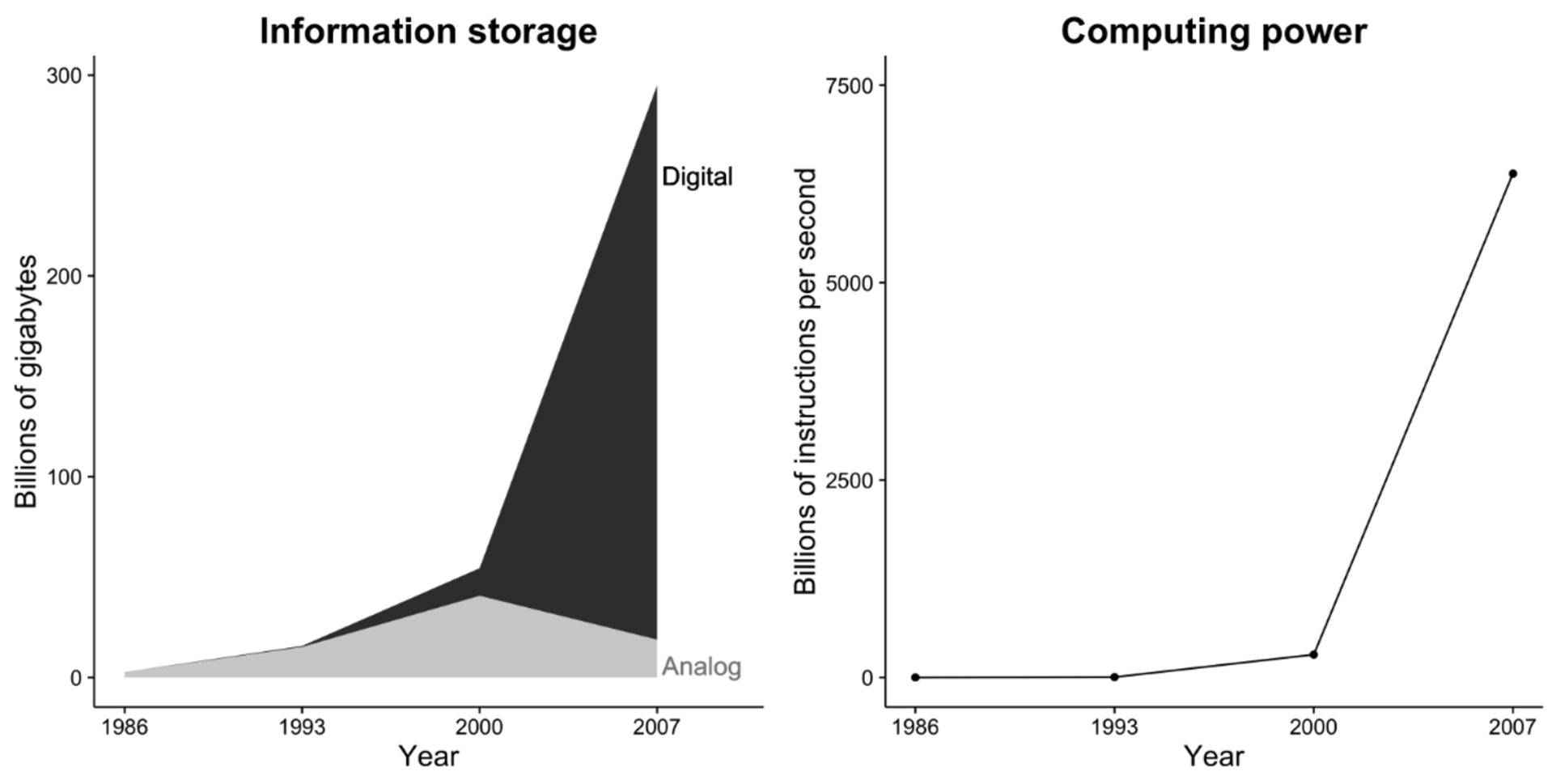

Increasing amount of data available online

Hilbert & Lopez, 2011

Much of what we know about human behavior…

…is based on what people tell us:

- in self-report measures in surveys

- in responses in experimental research

- in qualitative interviews

Note: Although valuable, such measurements can be biased (Scharkow, 2013; Parry et al., 2021)!

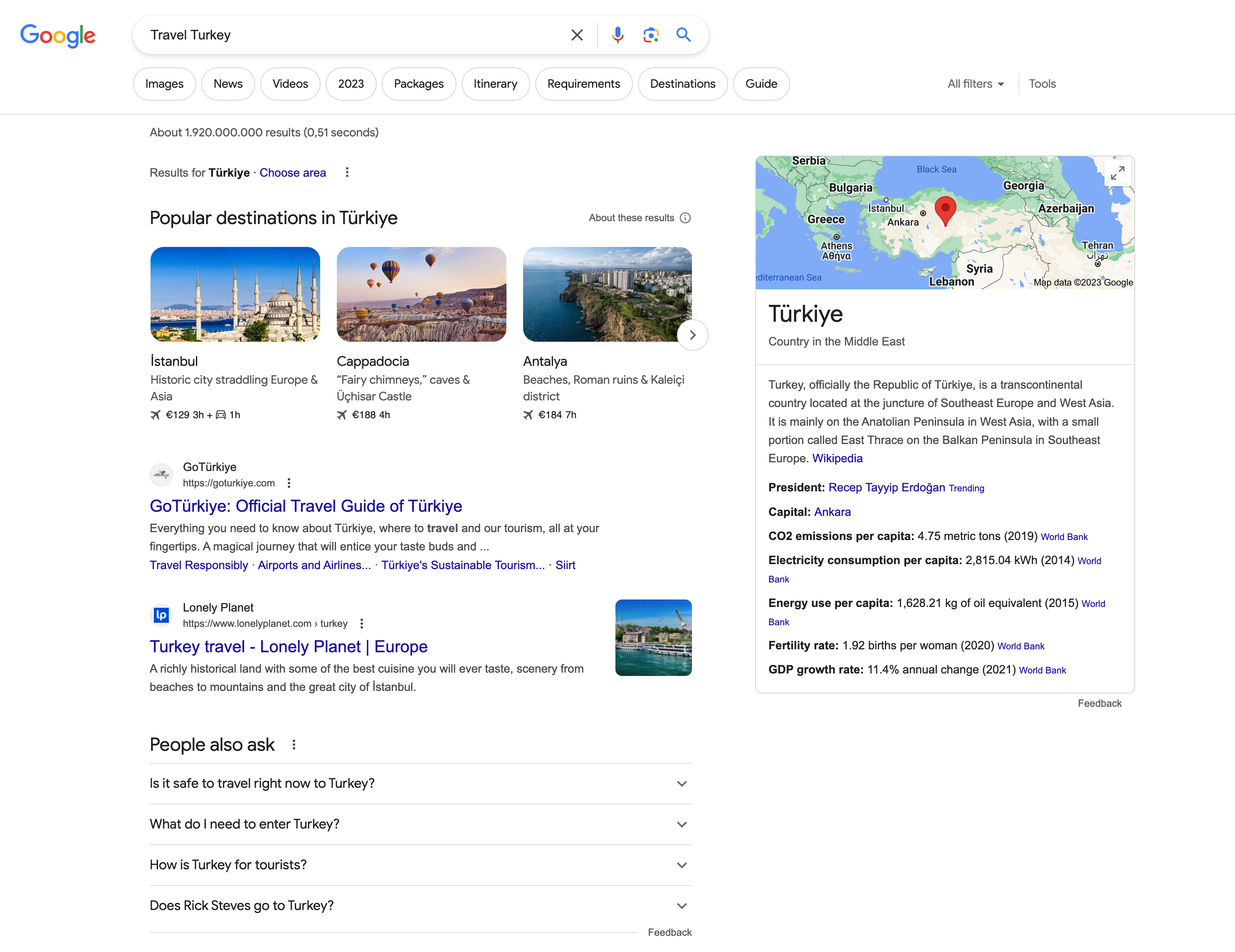

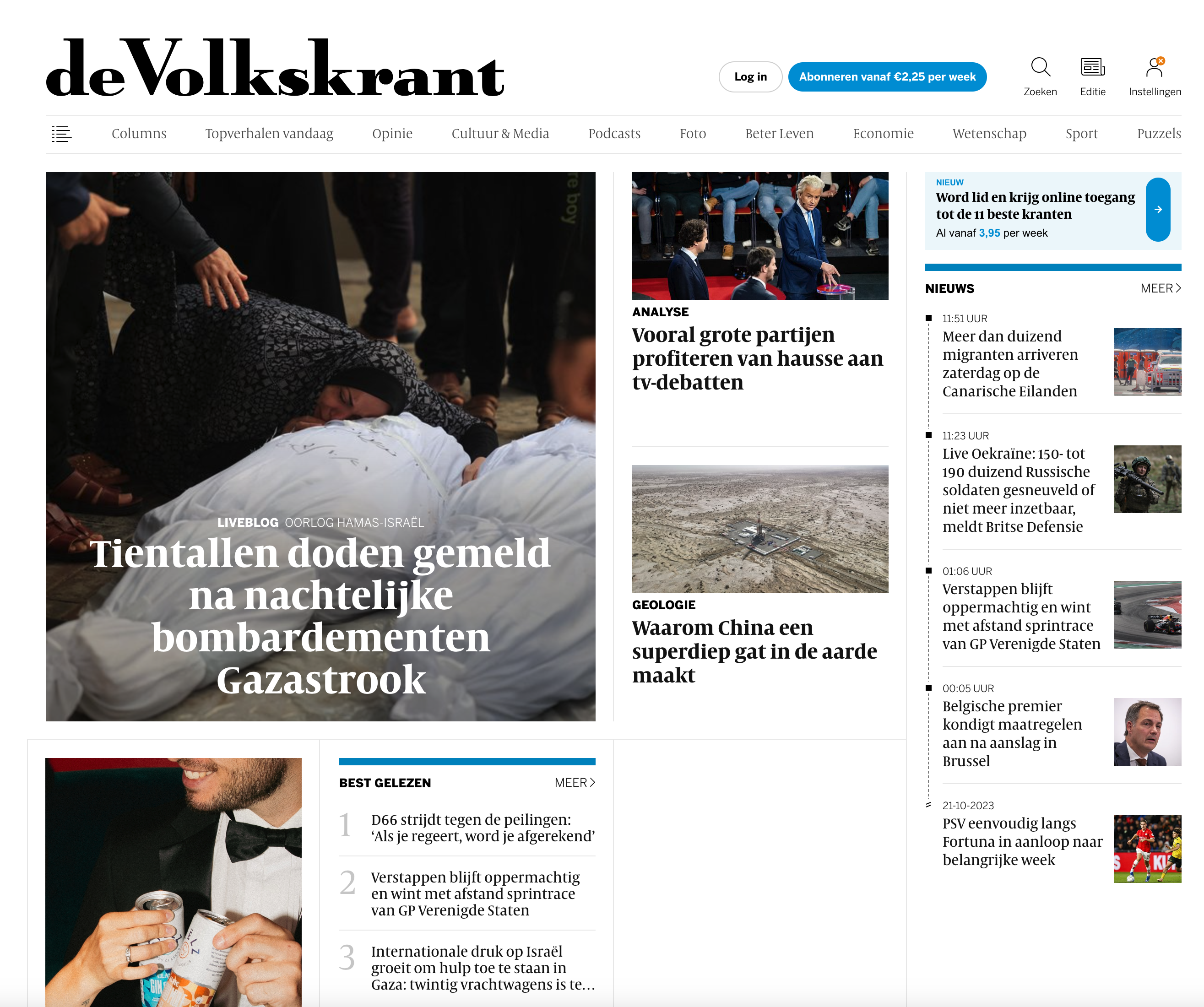

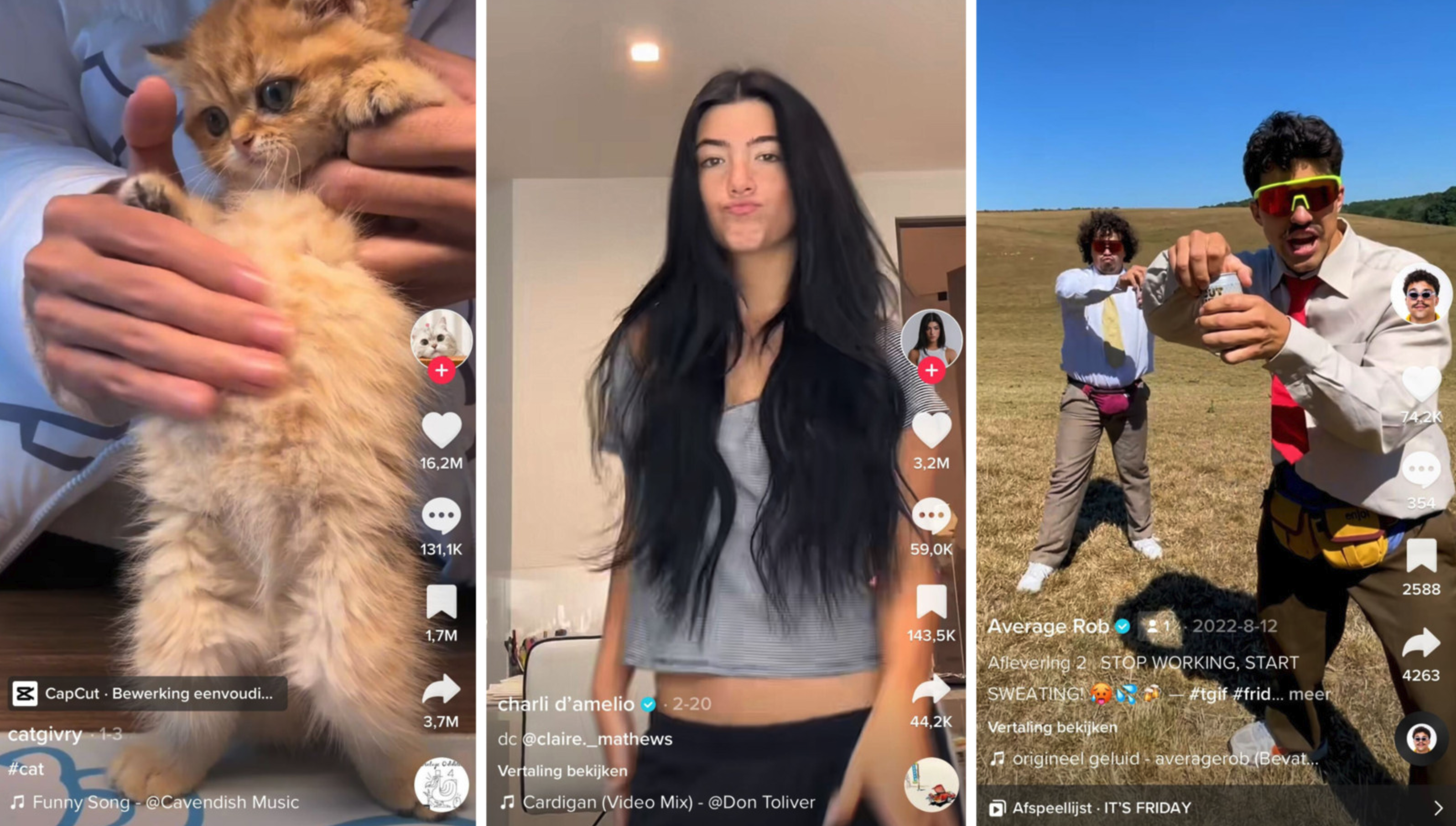

But a lot of (mass) communication looks like this…

…or is based on user-generated content

How can we analyze large amount of texts?

This is what we will discuss in this course!

Objectives and Learning goals

After completion of the course, you will…

be able to identify data analytic problems, analyze them critically, and find appropriate solutions

have a good understanding of the general text classification pipeline

have practical knowledge about different approaches of text classification (incl. dictionary approaches, machine learning, large language models…)

Skills and methods

With regard to the specific methods being taught in R, you will be able to…

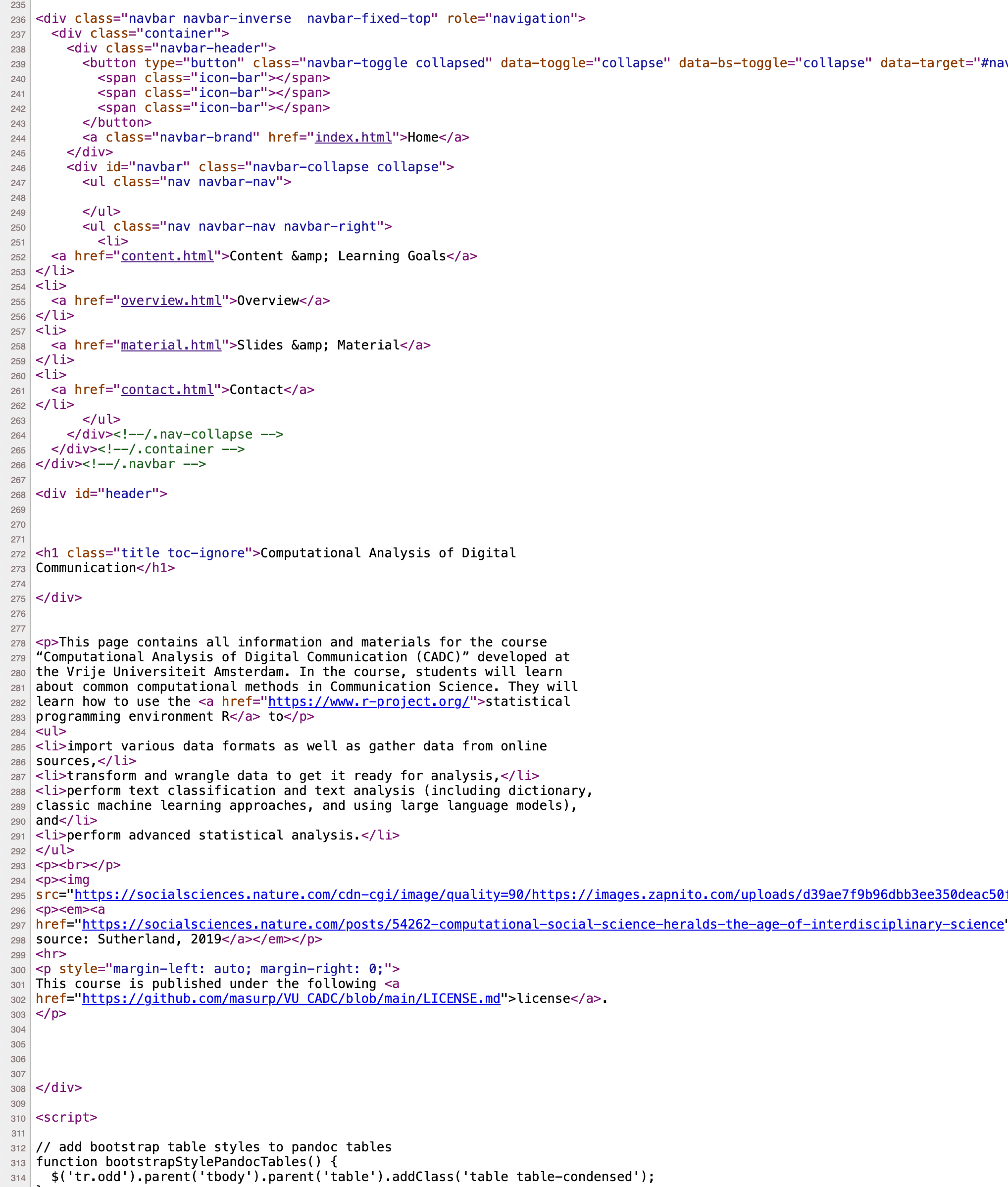

gather, scrape, and import data from different file types, APIs, and websites

link data from different sources to create new insights

clean and transform messy data into a tidy data format ready for text classification and analysis

use different approaches (e.g., dictionary, classic machine learning, transformer, LLMs) to extract information from textual data

perform statistical analyses on the substantive data

Who am I?

Assistant Professor of Communication Science

Research Interest

- Privacy & Data Protection Online

- Social Influence and Contagion

- Media Literacy

Methodological Interests

- Scale Development and Validation

- Bayesian Statistics

- Flexibility in Data Analysis

- Computational Methods and Machine Learning

More info: www.philippmasur.de

Content of this lecture

What is Computational Social Science?

1.1. Definition and Examples

1.2. Relevance of Computational Methods

1.3. Characteristics of Big Data

1.4. Opportunities and Pitfalls

What is Computational Communication Science?

2.1. Definition

2.2. Typical Research Areas

2.3. Examples of Computational Communication Research

Introduction to Automated Text Analysis

3.1. Text as Data

3.2. The General Text Classification Pipeline

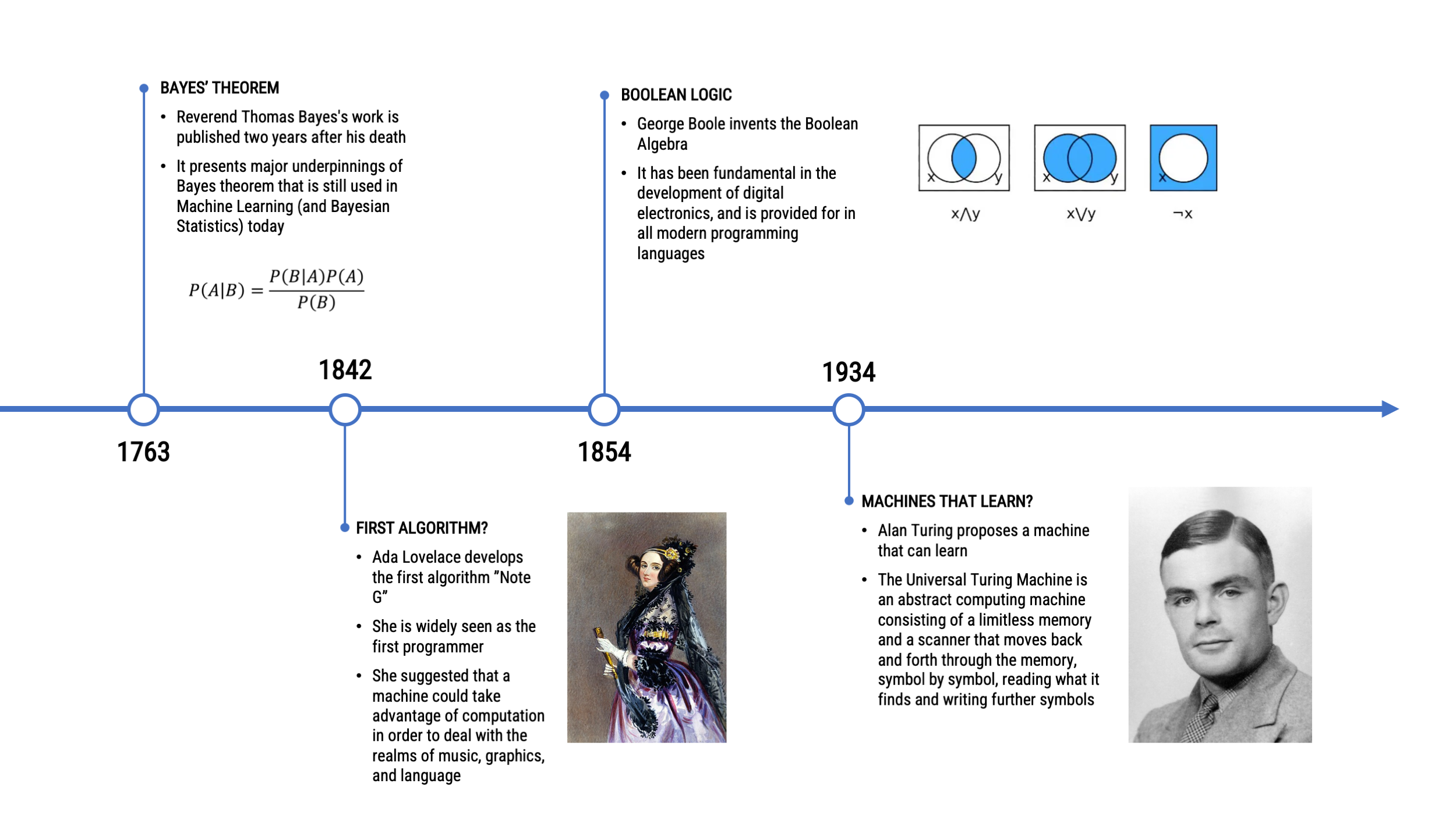

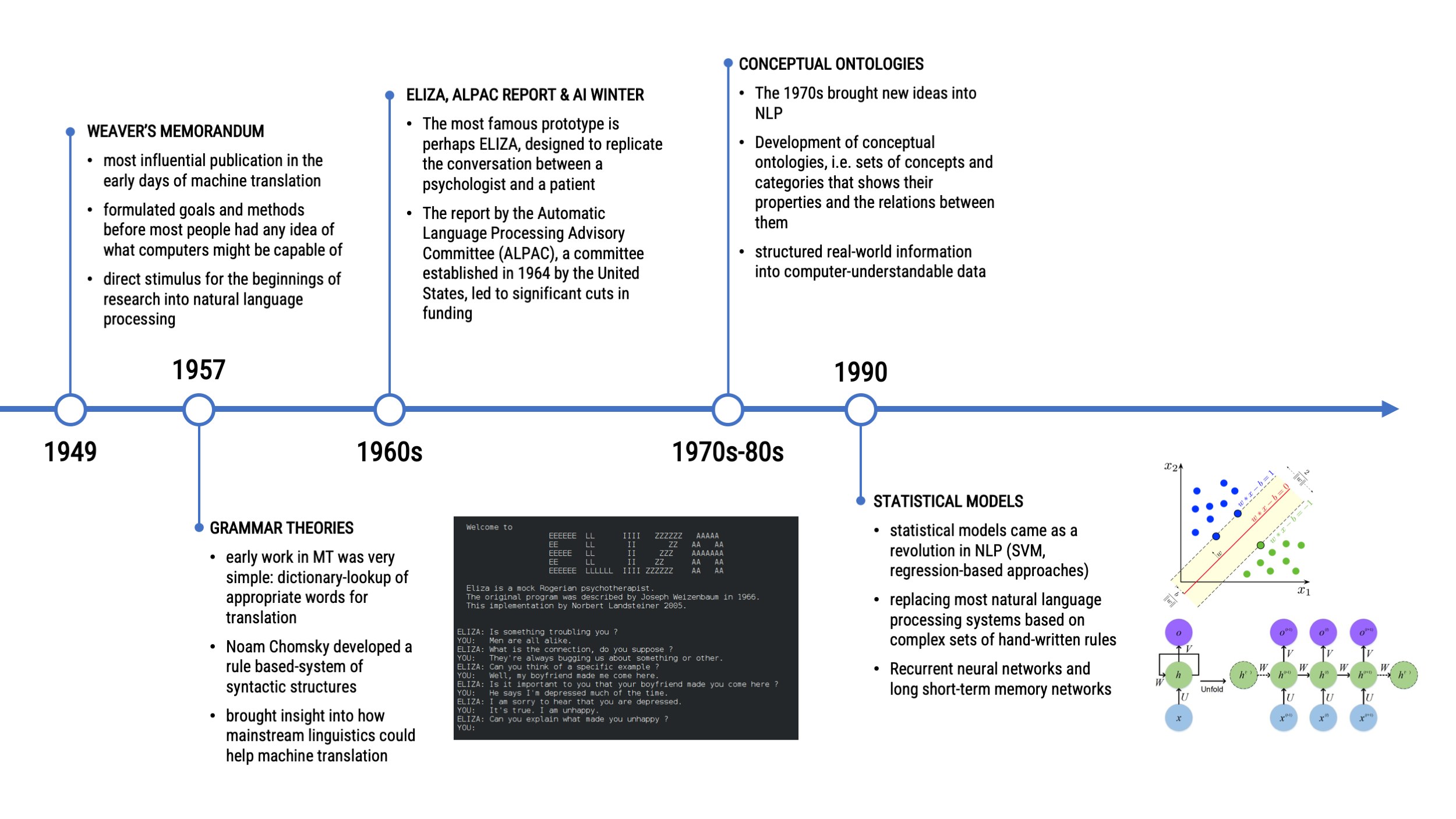

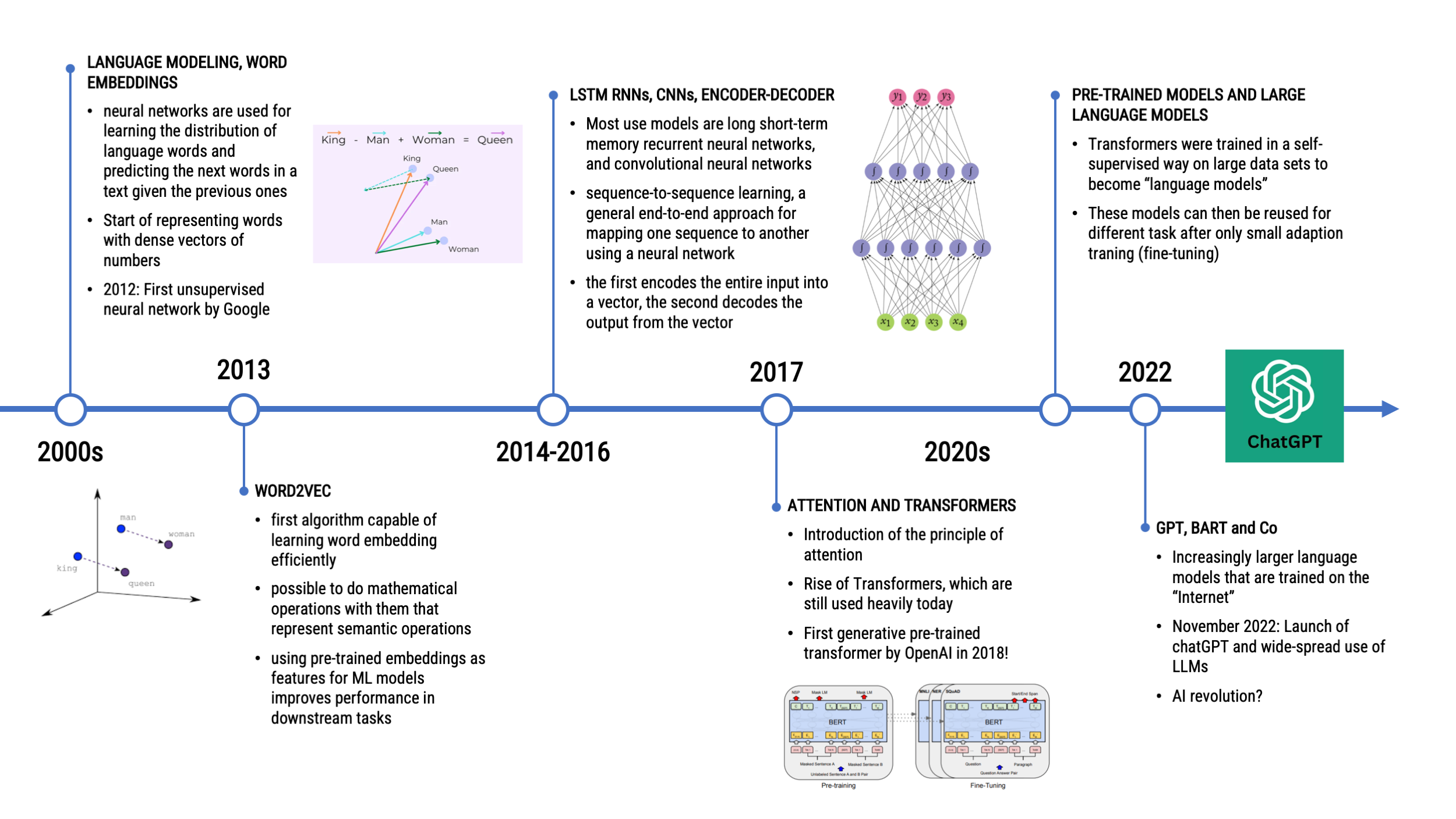

3.3. Timeline of Natural Language Processing

3.3. A Small Example

Ethics of Computational Communication Research

4.1. A Controversional Study (Kramer et al., 2014)

4.2. Ethical Challenges

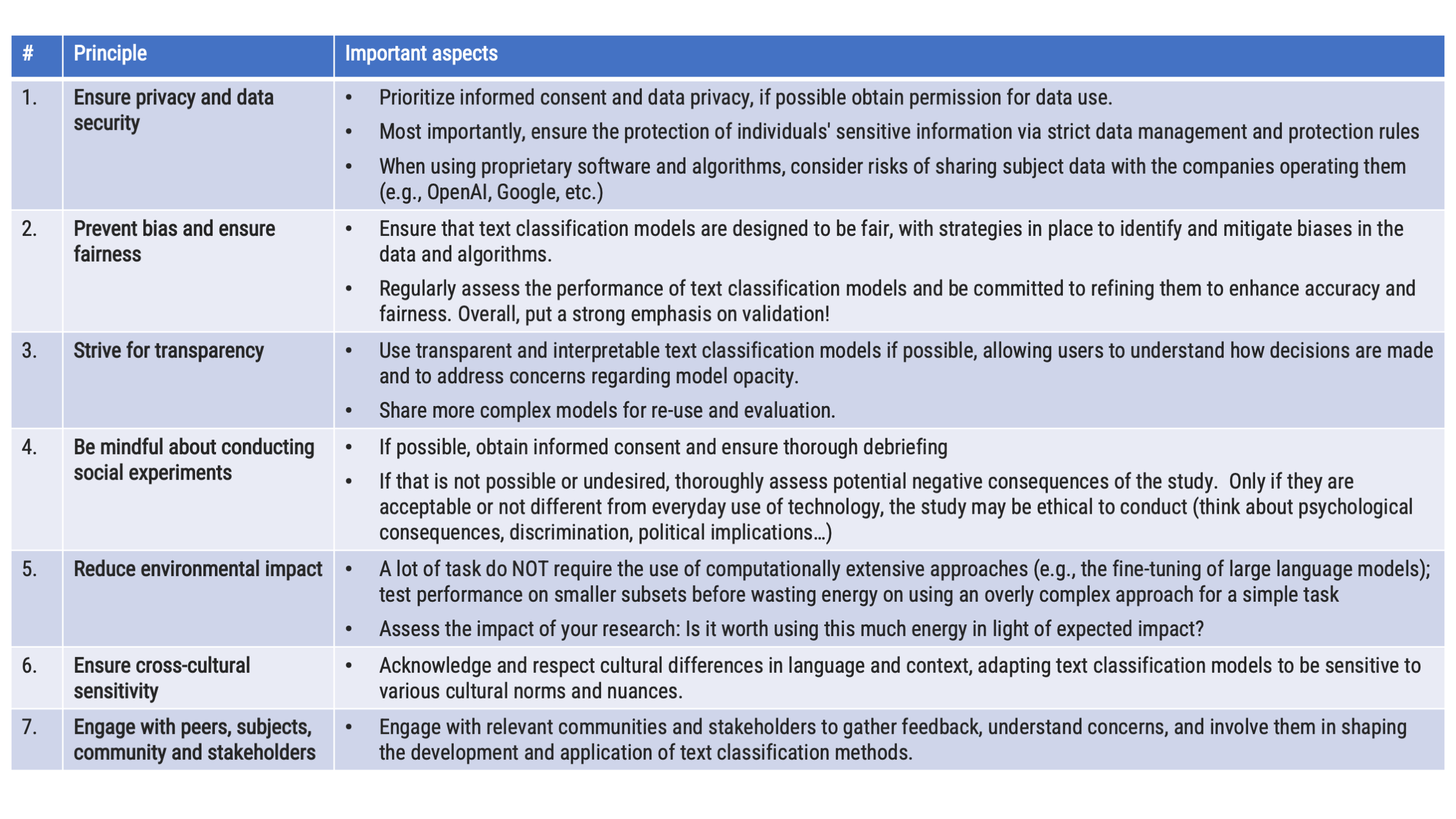

4.3. Practical Guidelines

Course Formalities

5.1. Introduction of Teachers

5.2. Course Material and Schedule

5.3. Attendance and Assignments

What is Computational Social Science?

…and why should we care?

Example: Surprising Sources of Information

In 2009, researchers wanted to study wealth and poverty in Rwanda

They conducted a survey with a random sample of 1,000 customers of the largest mobile phone provider

They collected demographics, social, and economic characteristics (incl. wealth)

So far, traditional social science, right?

The authors also had access to complete call records from 1.5 million people

Combining both data sources, they used the survey data to “train” a machine learning model to predict a person’s wealth based on their call records

They also estimated the places of residence based on the geographic information embedded in call records

Blumenstock, Cadamura, & On, 2015

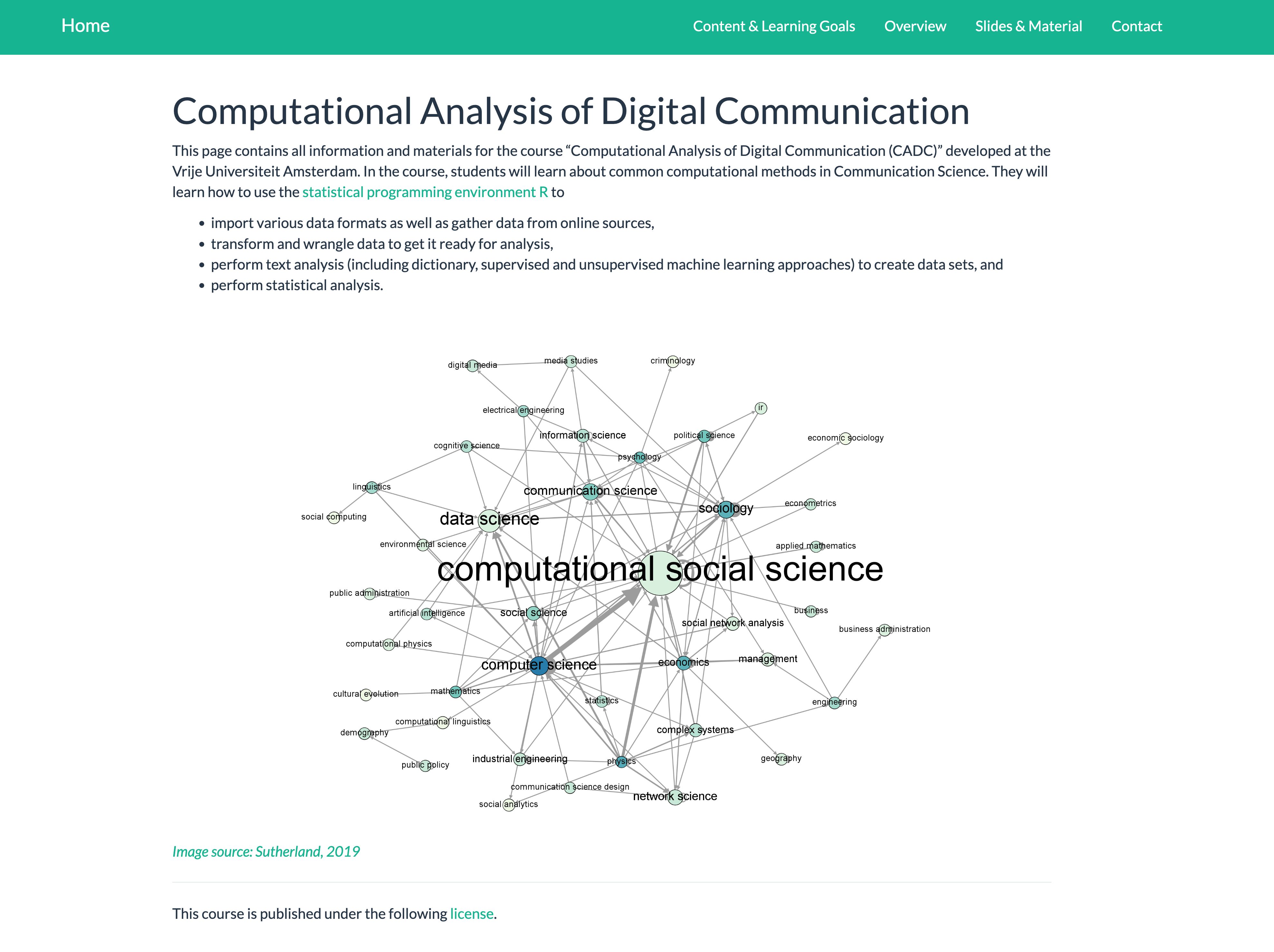

Computational Social Science

Field of social science that uses algorithmic tools and large/unstructured data to understand human and social behavior

Complements rather than replaces traditional methodologies: Methods are not the goal, but contribute to data generation

Includes methods such as, e.g.,:

- Data mining (e.g., scraping and gathering of large data sets)

- Software development for social science experiments

- Automated text analysis (e.g., sentiment analysis, keyword extraction, dictionary approaches)

- Image classification (e.g., face recognition, visual topic modeling)

- Machine learning approaches (e.g., for classification, prediction, topic modeling)

- Actor-based modeling (e.g., simulation of social behavior, spreading of information)

- …

Why is this important now?

Vast amounts of digitally available data, ranging from social media messages and other digital traces to web archives and newly digitized newspaper and other historical archives

Large-scale records (big data) of persons or businesses are created constantly

Powerful and comparatively cheap processing power, and easy to use computing infrastructure for processing these data

Improved tools to analyze this data, including network analysis methods and automatic text analysis methods such as supervised text classification, topic modeling, word embeddings, as well as large language models

10 Characteristics of Big Data

| # | Characteristic | Description |

|---|---|---|

| 1 | Big | The scale or volume of some current data sets is often impressive. However, big data sets are not an end in themselves, but they can enable certain kinds of research including the study of rare events, the estimation of heterogeneity, and the detection of small differences |

| 2 | Always-on | Many big data systems are constantly collecting data and thus enable to study unexpected events and allow for real-time measurement |

| 3 | Nonreactive | Participants are generally not aware that their data are being captured or they have become so accustomed to this data collection that it no longer changes their behavior. |

| 4 | Incomplete | Most big data sources are incomplete, in the sense that they don’t have the information that you will want for your research. This is a common feature of data that were created for purposes other than research. |

| 5 | Inaccessible | Data held by companies and governments are difficult for researchers to access. |

10 Characteristics of Big Data

| # | Characteristic | Description |

|---|---|---|

| 6 | Nonrepresentative | Most big datasets are nonetheless not representative of certain populations. Out-of-sample generalizations are hence difficult or impossible. |

| 7 | Drifting | Many big data systems are changing constantly, thus making it difficult to study long-term trends |

| 8 | Algorithmically confounded |

Behavior in big data systems is not natural; it is driven by the engineering goals of the systems. |

| 9 | Dirty | Big data often includes a lot of noise (e.g., junk, spam, spurious data points…) |

| 10 | Sensitive | Some of the information that companies and governments have is sensitive. |

Salganik, 2017, chap. 2.3

Pro’s and Con’s of Computational Methods

Opportunities

- We can study actual behavior instead of simply self-reports

- We can study human beings in their social context instead of in an artificial lab setting

- We can increase our N (higher power)

- Potential to uncover patterns and insights that we couldn’t investigate before

Pitfalls

- Techniques often (rather) complicated

- Data is often proprietary (not shared openly)

- Samples are often biased

- Often, data have only insufficient metadata

- Risks of no longer understanding the models we use (black box)

Computational Communication Science

Why computational methods are important for communication research…

Definition

“Computational Communication Science (CCS) is the label applied to the emerging subfield that investigates the use of computational algorithms to gather and analyze big and often semi- or unstructured data sets to develop and test communication science theories”

Van Atteveldt & Peng, 2018

Typical research areas

Computational communication science studies thus usually involve:

- large and complex data set

- consisting of digital traces and other “naturally occurring” data

- requiring algorithmic solutions to analyze (e.g., machine learning, LLMs)

- allowing the study of human communication by applying and testing communication theory

Political Communication

- Democratization and Polarization

- Hate Speech

Social Media Use

- Tracking of actual social media use

- Spreading of behavior, information, or emotions

Health Communication

- Prevalence of health information online

(Online) Journalism

- News coverage across decades

- Gender equality

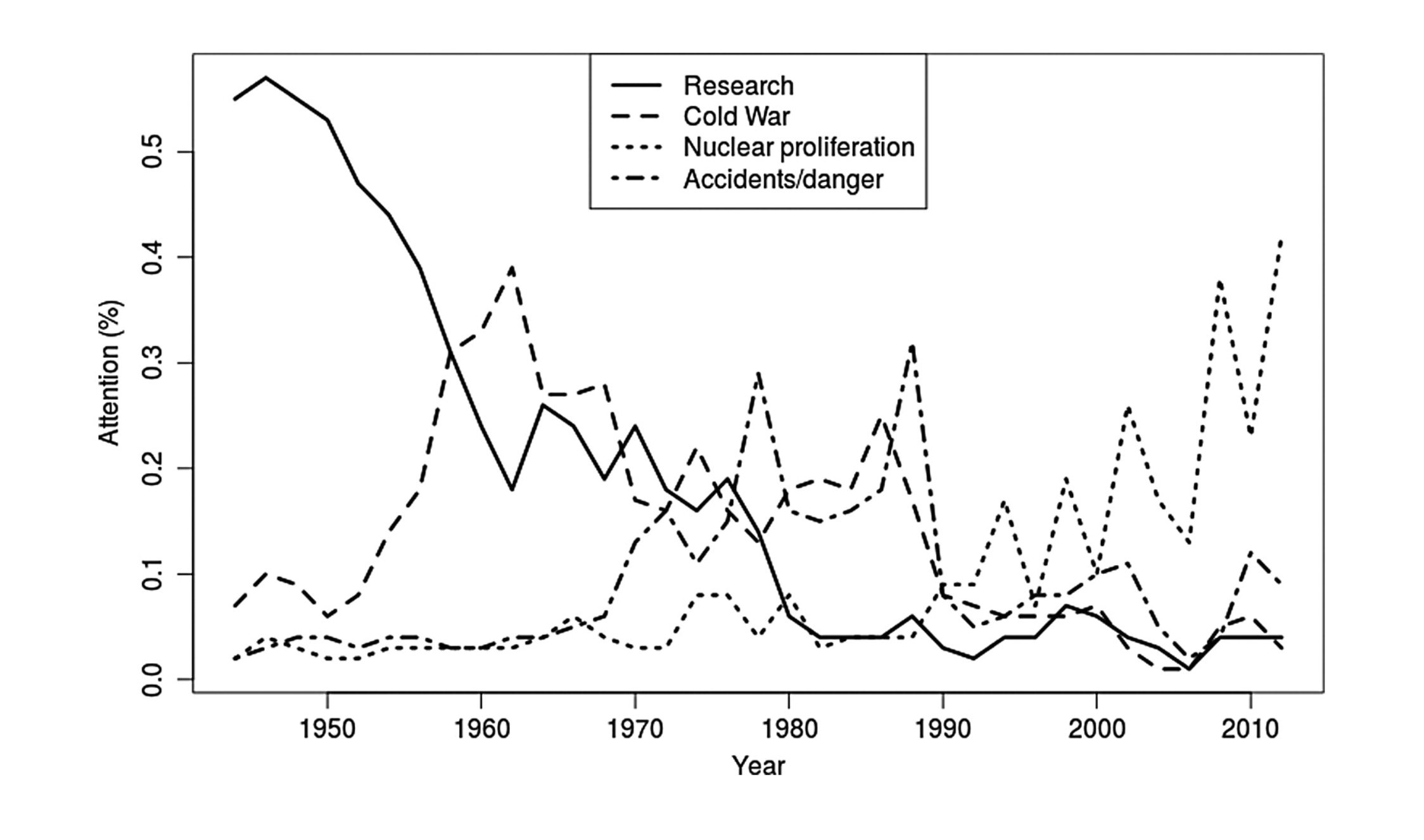

Example 1: Analyzing News Coverage

Jacobi and colleagues (2016) analyzed the coverage of nuclear technology from 1945 to 2014 in the New York Times

Analysis of 51,528 news stories (headline and lead): Way too much for human coding!

Used “LDA topic modeling” to extract latent topics and analyzed their occurrence over time

Example 2: Facebook Data to Predict Personality

Kosinski and colleagues (2013) used a dataset of over 58,000 volunteers who provided their Facebook Likes, detailed demographic profiles, and the results of several psychometric test

Were able to show that one can predict a variety of personal characteristics and personality traits from simple Facebook likes

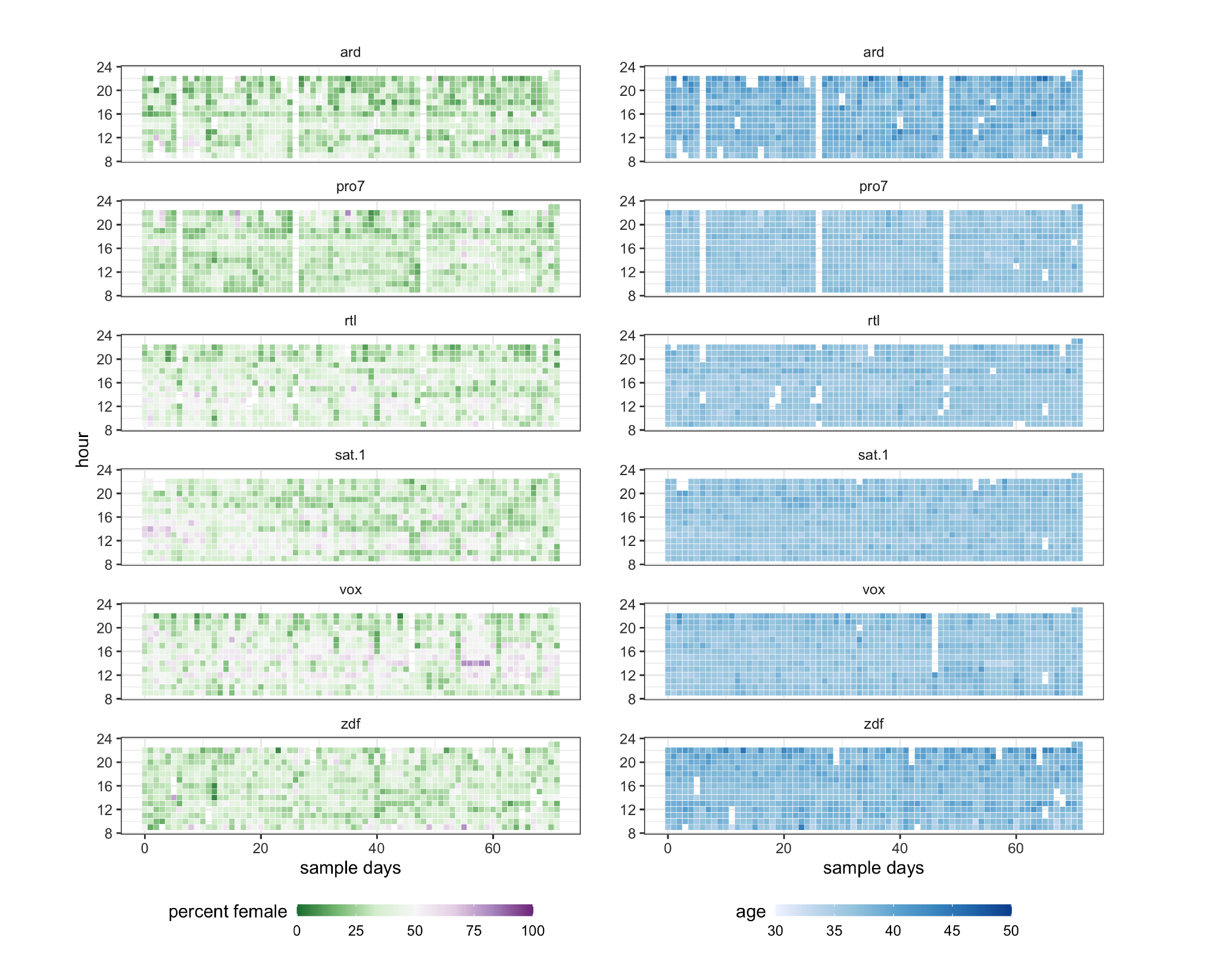

Example 3: Gender representation in TV

Women on average remained underrepresented on TV, with 6.3 million female faces out of 16 million total (estimated proportion .39, 95% CI: .37-.42)

This strong overall bias was mirrored across specific subsamples (news, sports, advertising…)

Introduction to Automated Text Analysis

The core topic of this course!

A “new” kind of data

A lot of communication is encoded in texts

But text does not look like data we can easily analyze…

Experimental data

Traditional text analysis

Choosing the texts that contain the content and one wants to analyze (1)

Define the units and categories of analysis (2) & (3)

Describe categories and develop a set of rules for the manual coding process (4)

Coding the text according to the rules (5), which usually requires a lot of manual work

Make sense of codes (6) and rework the codes and rules (7) and redo the analysis

Analyze frequencies, relationships, differences, similarities between units/codes

Problem: Requires a lot of work and there are always more texts than humans can possibly code manually!

Definition

Text analysis is “a research technique for making replicable and valid inferences from texts (or other meaningful matter) to the contexts of their use”

Krippendorff, 2004

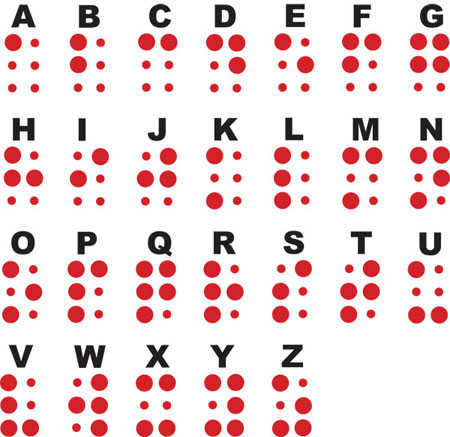

What is text?

But text can also look very different

Symbols and Meaning

Text consists of symbols

Symbols by themselves do not have meaning

A symbol itself is a mark, sign, or word that indicates,

signifies, or is understood as representing an idea,

object, or relationshipSymbols thereby allow people to go beyond what is

known or seen by creating linkages between otherwise

very different concepts and experiencesText (a collection of symbols) only attains meaning

when interpreted (in its context)Main challenge in Automatic Text Analysis:

Bridge the gap from symbols to meaningful

interpretation

Understanding language

“As natural language processing (NLP) practitioners, we bring our assumptions about what language is and how language works into the task of creating modeling features from natural language and using those features as inputs to statistical models. This is true even when we don’t think about how language works very deeply or when our understanding is unsophisticated or inaccurate […] We can improve our machine learning models for text by heightening that knowledge.”

Hvitfeldt & Silge, 2021

A short overview of linguistics

Each field studies a different level at which language exhibits organization

When we engage in text analysis, we use these levels of organization to create language features (e.g., tokens, n-grams,…)

In classic machine learning, they often depend on the morphological characteristics of language, such as when text is broken into sequences of characters, words, sentences

In modern approaches (e.g. using LLMs), it also involves syntactical and pragmatic characteristics. IN case of audio-text transformation also phonetical or phonological characteristics!

Hvitfeldt & Silge, 2021 (chap. 1)

| Subfield | What does it focus on? |

|---|---|

| Phonetics | Sounds that people use in language |

| Phonology | Systems of sounds in particular languages |

| Morphology | How words are formed |

| Syntax | How sentences are formed from words |

| Semantics | What sentences mean |

| Pragmatics | How language is used in context |

Morphology

When we build text classification models for text data, we use these levels of organization to create natural language features (i.e. predictors for our models)

What features we extract often depend on the morphological characteristics of language, such as when text is broken into sequences of characters

Yet, because the organization and the rules of language can be ambiguous, our ability to create text features for machine learning is constrained by the very nature of language

| Type of Feature | Example |

|---|---|

| Sentence | “Include Your Children When Baking Cookies” |

| Word | “Include”, “Your”, “Children”, “When”, “Baking”, “Cookies” |

| Bigrams | “Include”, “Your Children”, “When”, “Baking Cookies” |

| n-grams | “Include Your Children”, “When Baking Cookies” |

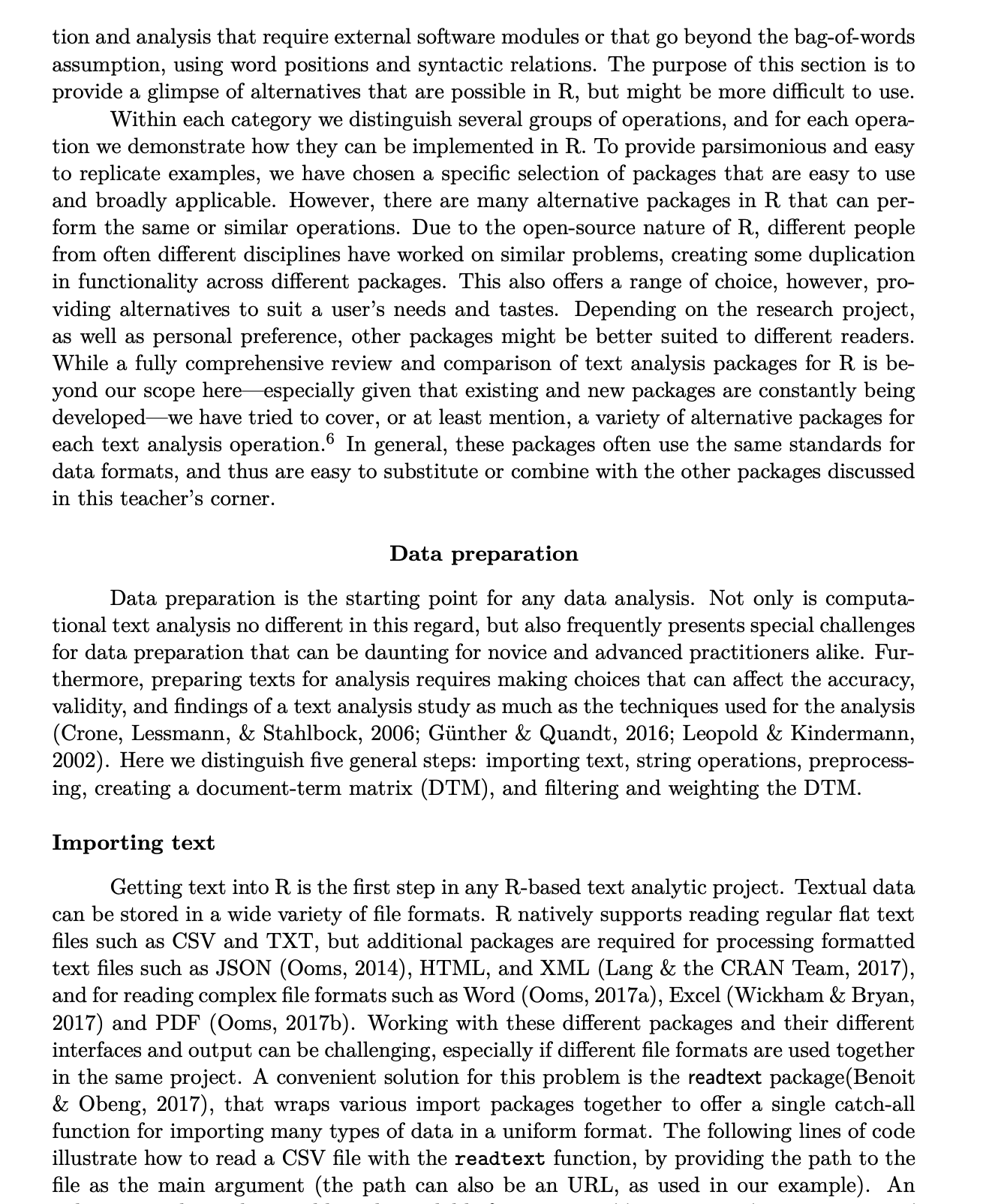

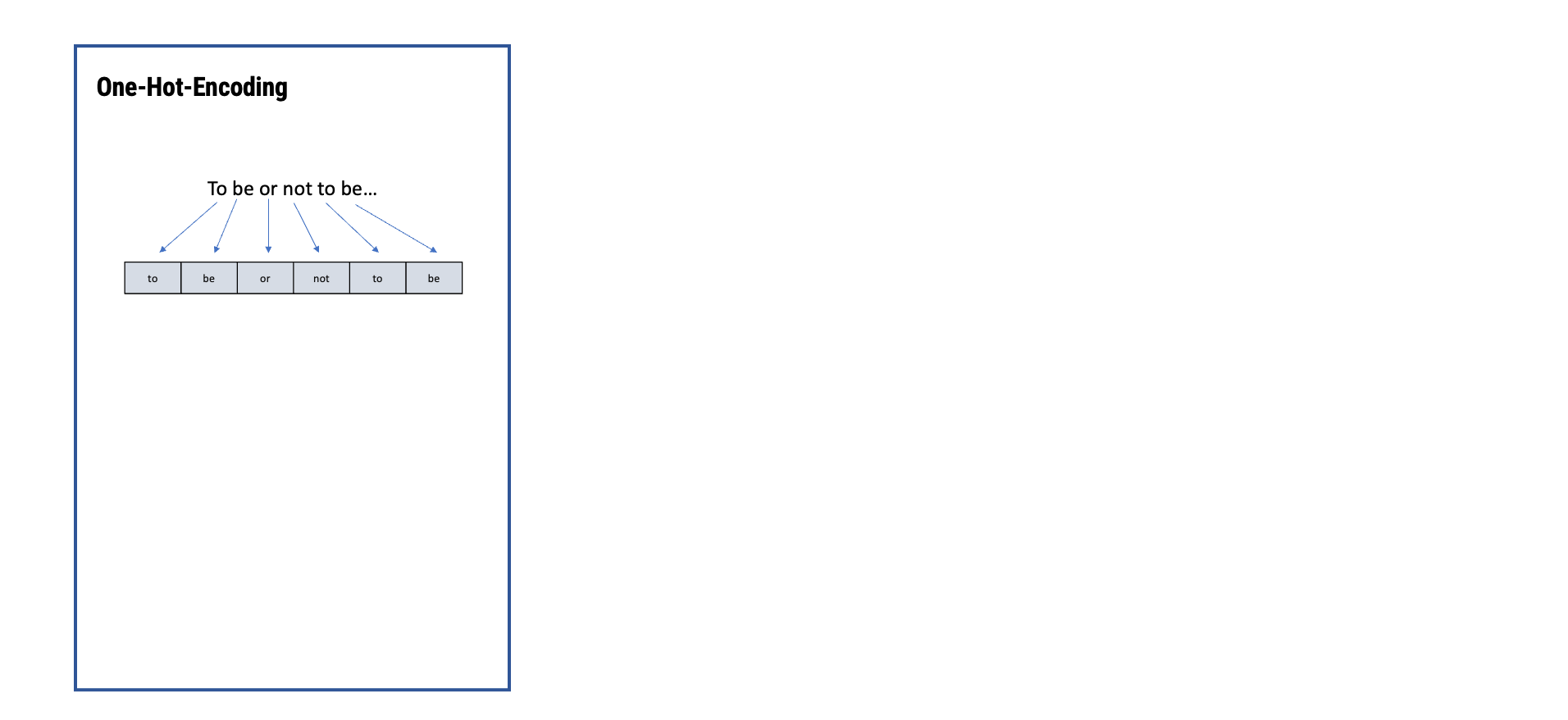

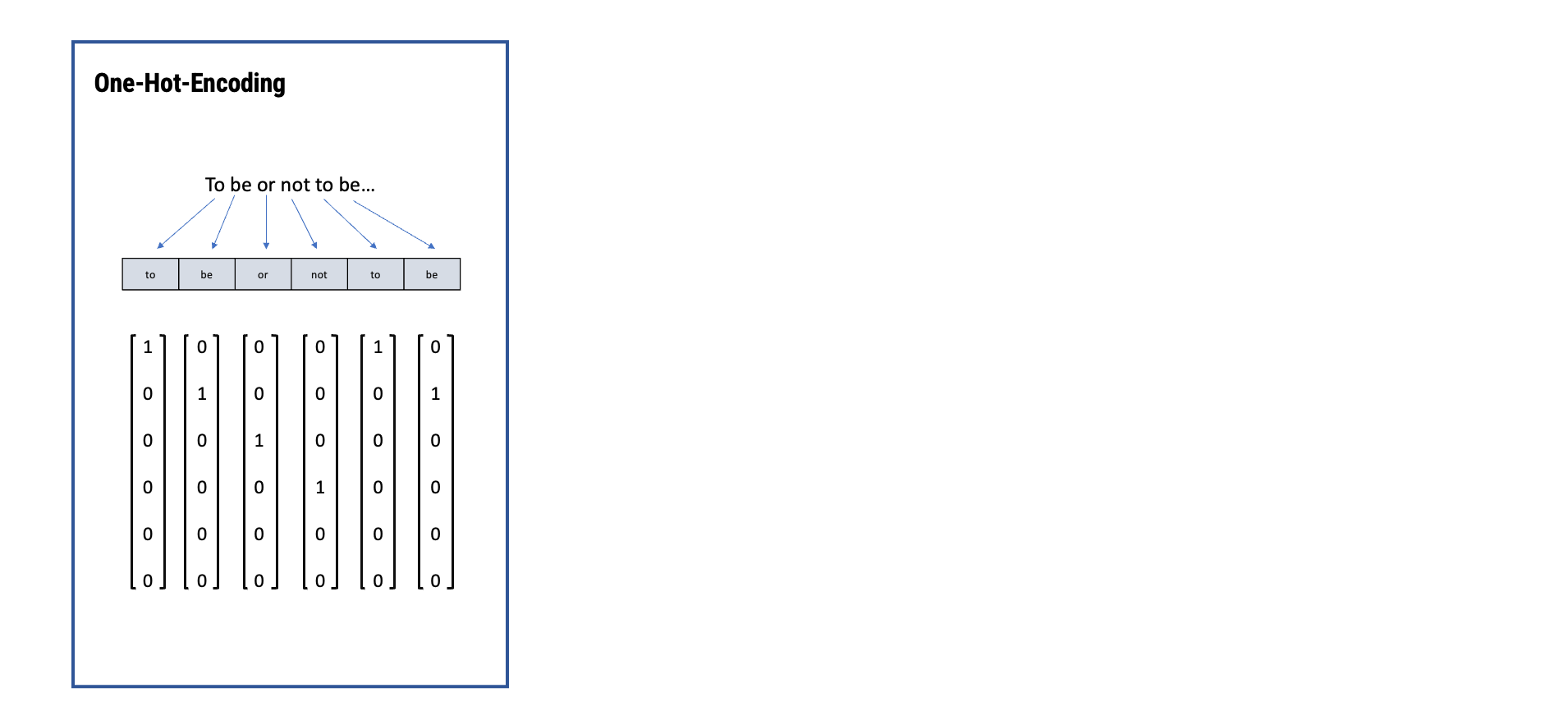

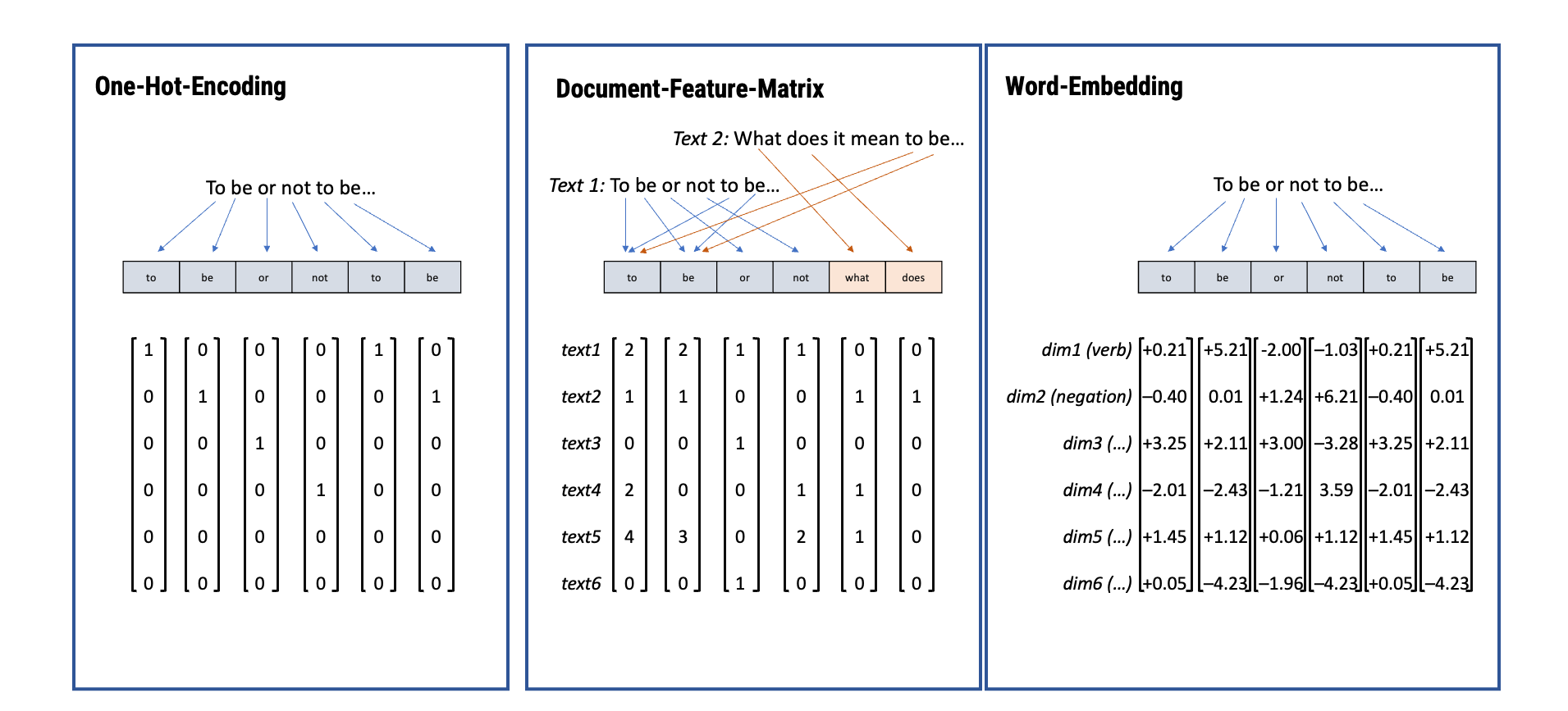

From text to numbers

In automated text classification, we use understandings of morphology (and other fields of linguistics) to break text into tokens and then represent these tokens as numbers, so that a computer can read them:

From text to numbers

In automated text classification, we use understandings of morphology (and other fields of linguistics) to break text into tokens and then represent these tokens as numbers, so that a computer can read them:

From text to numbers

In automated text classification, we use understandings of morphology (and other fields of linguistics) to break text into tokens and then represent these tokens as numbers, so that a computer can read them:

From text to numbers

In automated text classification, we use understandings of morphology (and other fields of linguistics) to break text into tokens and then represent these tokens as numbers, so that a computer can read them:

From text to numbers

In automated text classification, we use understandings of morphology (and other fields of linguistics) to break text into tokens and then represent these tokens as numbers, so that a computer can read them:

From text to numbers

In automated text classification, we use understandings of morphology (and other fields of linguistics) to break text into tokens and then represent these tokens as numbers, so that a computer can read them:

From text to numbers

In automated text classification, we use understandings of morphology (and other fields of linguistics) to break text into tokens and then represent these tokens as numbers, so that a computer can read them:

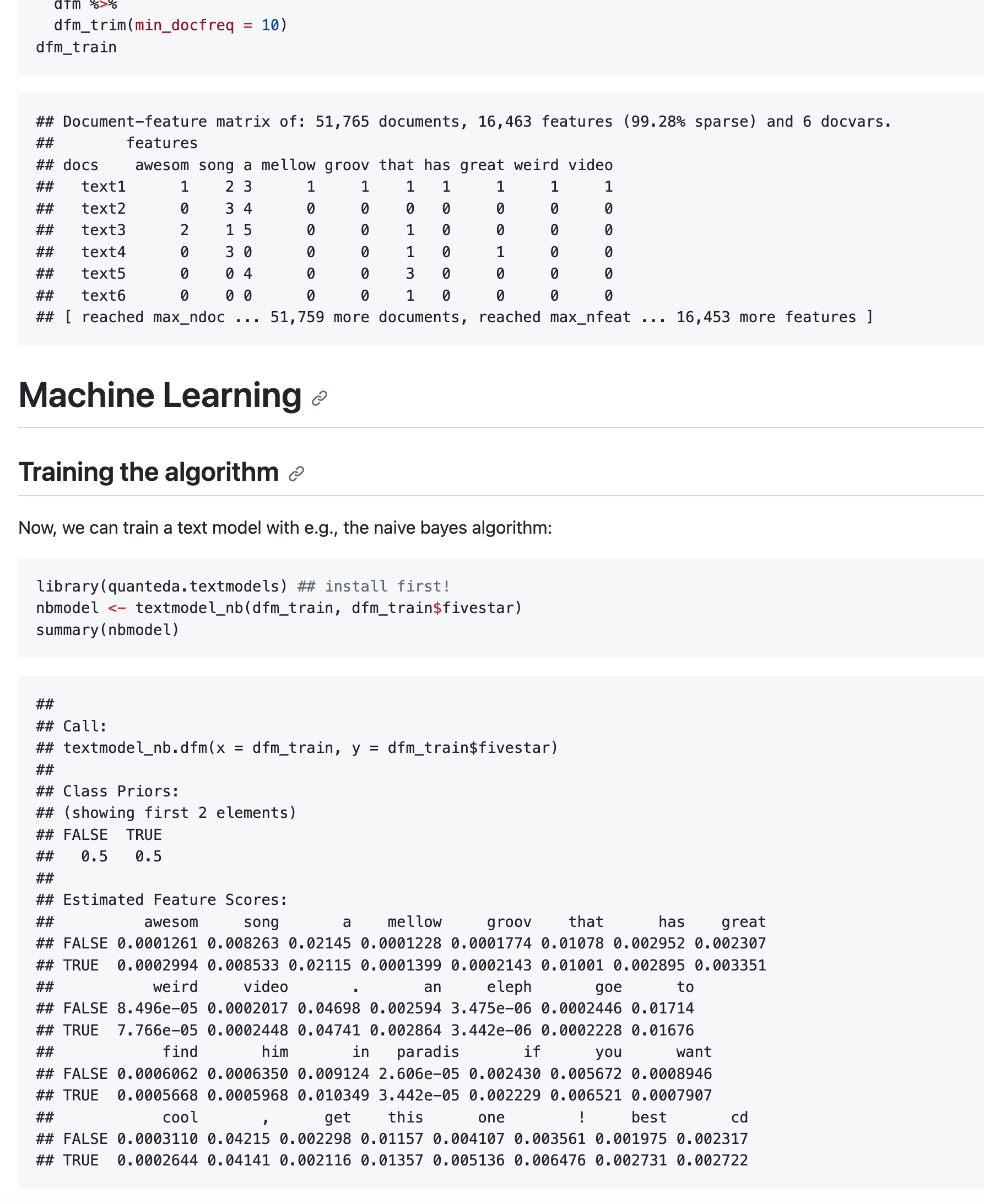

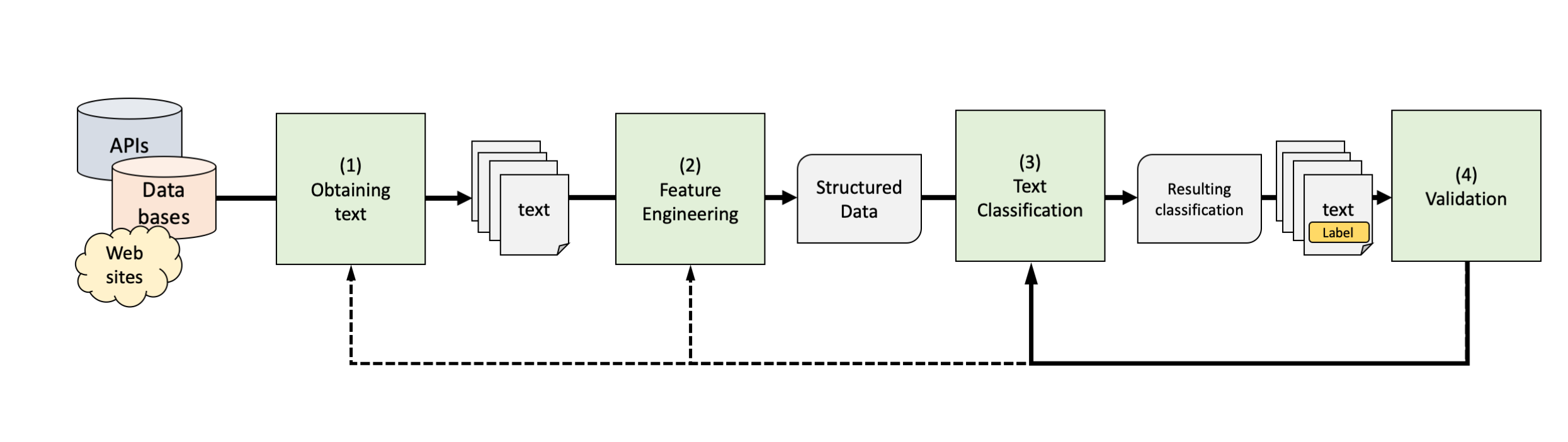

General Text Classification Workflow

A Framework for this Course

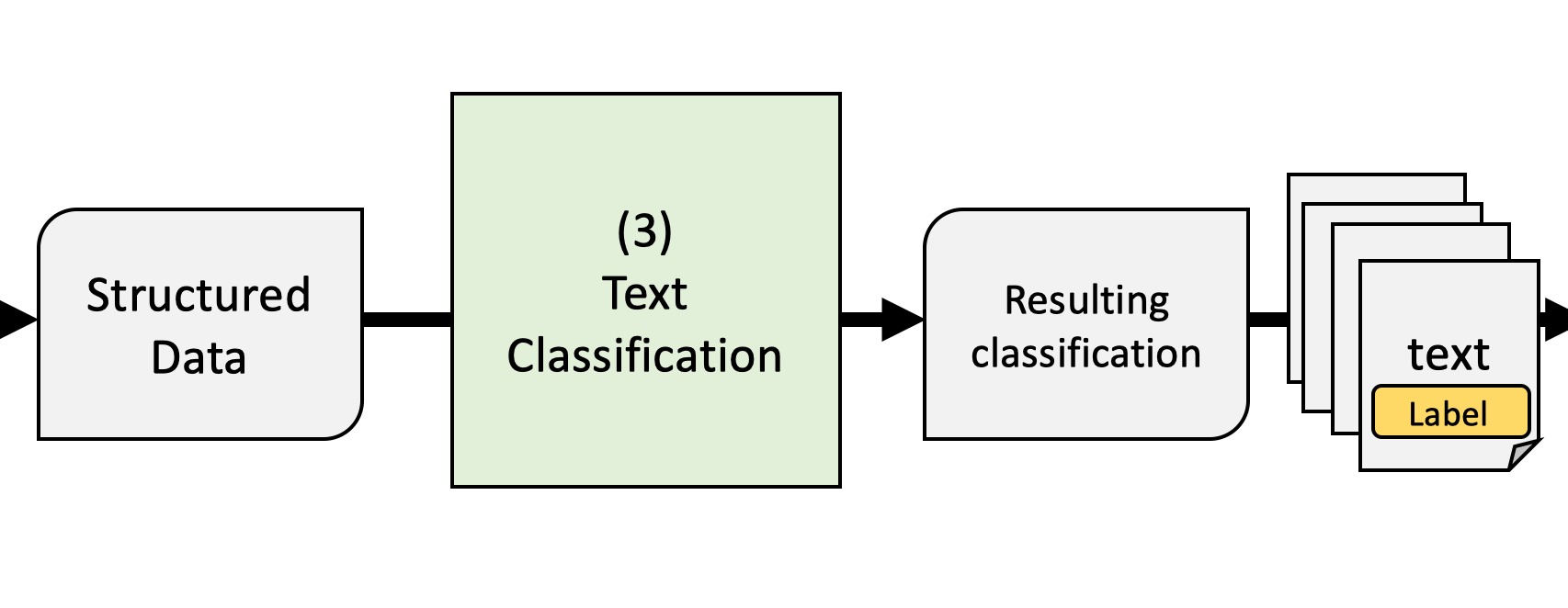

General Text Classification Pipeline

General Goal: to label (or annotate) previously unlabeled text

- Entails labeling a sentence, paragraph, or entire text (e.g., with the topic, the sentiment,…)

- Specific methods may differ, but the necessary steps (1-4) usually remain the same

We will always come back to this general pipeline to make sure we understand the core principles and goals

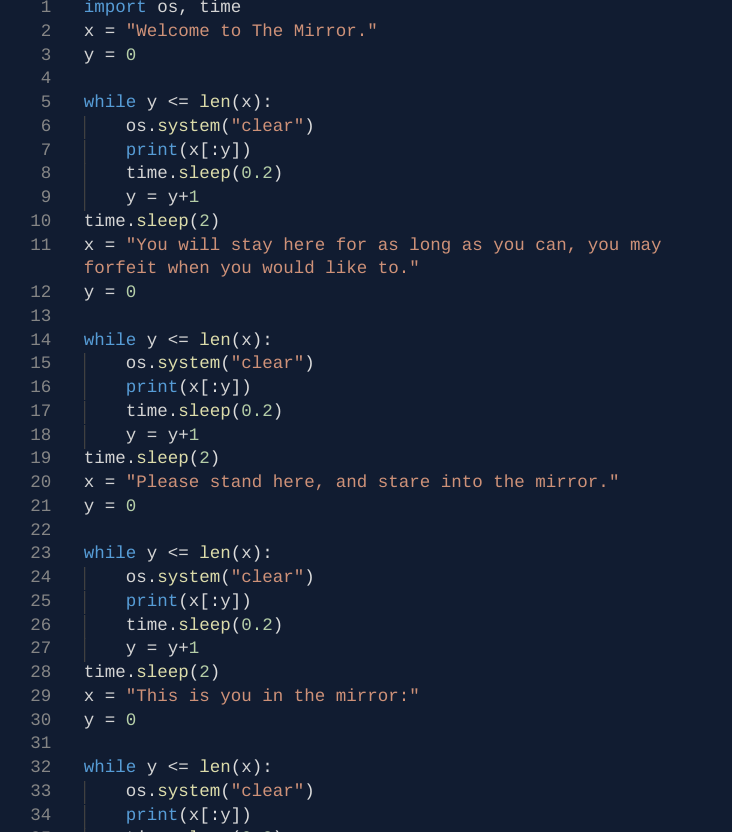

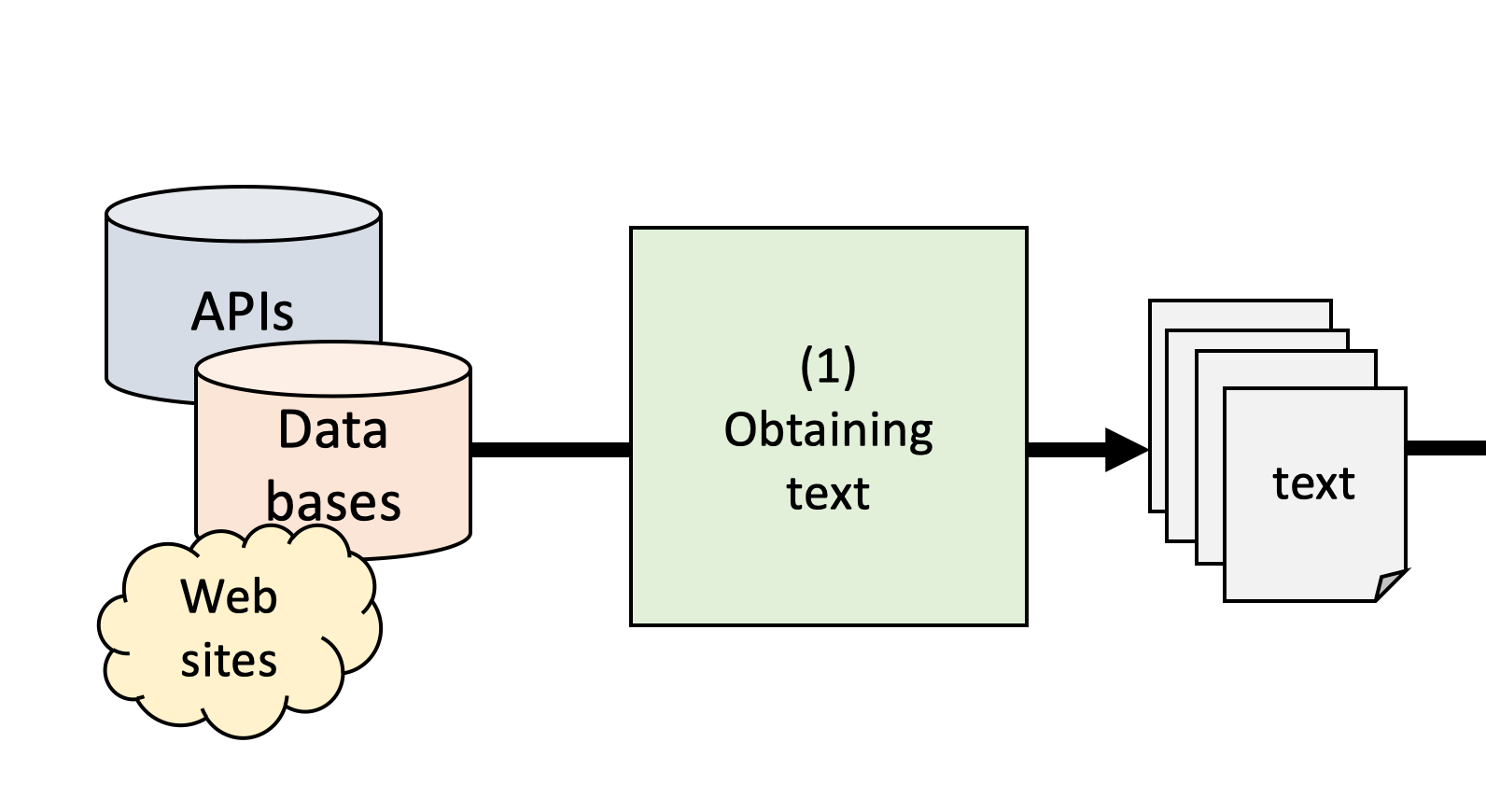

(1) Obtaining text

From publicly available data sets

- e.g.: Political texts, news from publisher / library

- Great if you can find it, often not available

By scraping primary sources

- e.g.: Press releases from party website, existing archives

- Writing scrapers can be trivial or very complex depending on web site

- Make sure to check legal issues

Via proprietary texts from third parties

- e.g.: digital archives (LexisNexis, factiva etc.), social media APIs

- Often custom format, API restrictions, API changes

- Terms of use not conducive to research, sharing

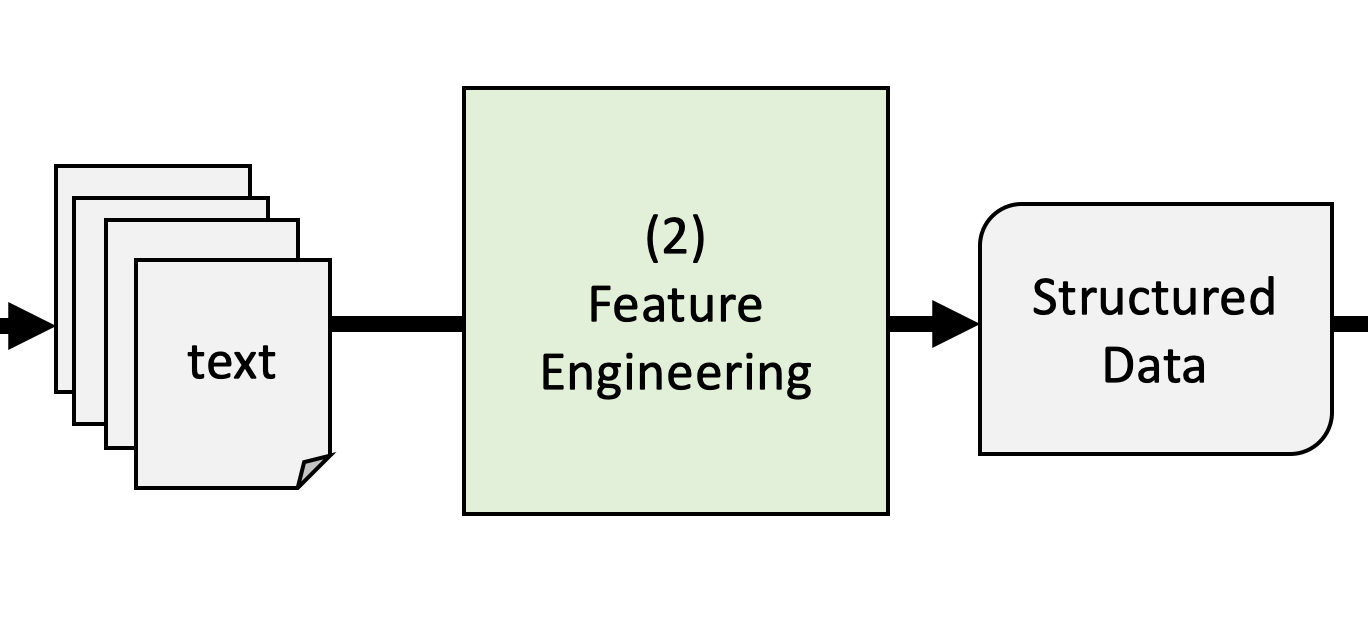

(2) Feature engineering

Feature engineering is the process of selecting, manipulating,

and transforming raw data into so-called featuresWhat type of feature engineering is necessary or useful

strongly depends on the method used, but may include- feature creation: breaking down text into the features that we want to analyze (so-called tokens such as words, sentence, bi-grams…)

- feature transformation: involves text cleaning such as stopword removal, stemming, normalization

- feature selection: frequency trimming

- creation of structured data: translating tokens into vectors or numbers (e.g., a document-feature matrix for classic ML approaches or dense vector-matrices for deep learning

Modern approaches such require less and less manual feature engineering and at times, make it entirely obsolete (because these models automatically “do it”)

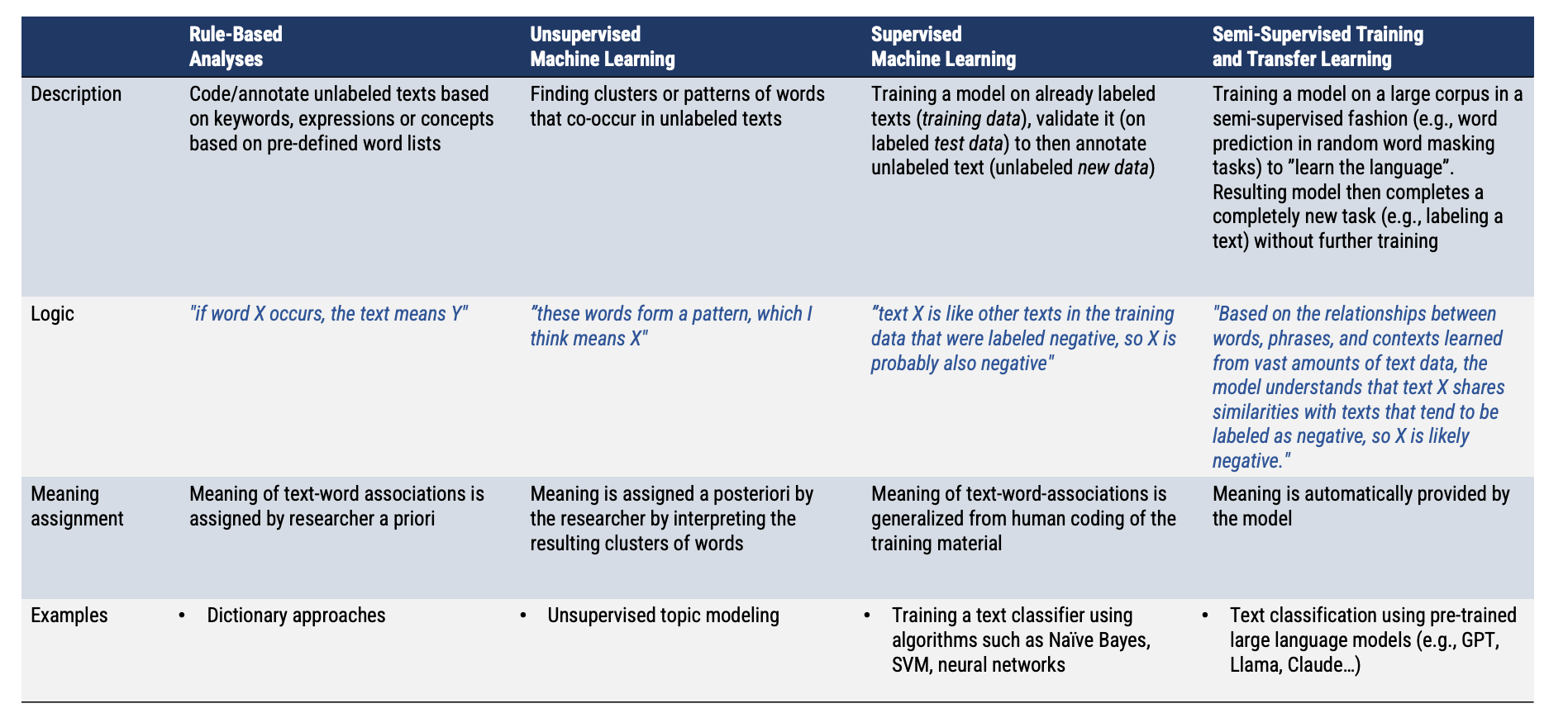

(3) Text classification

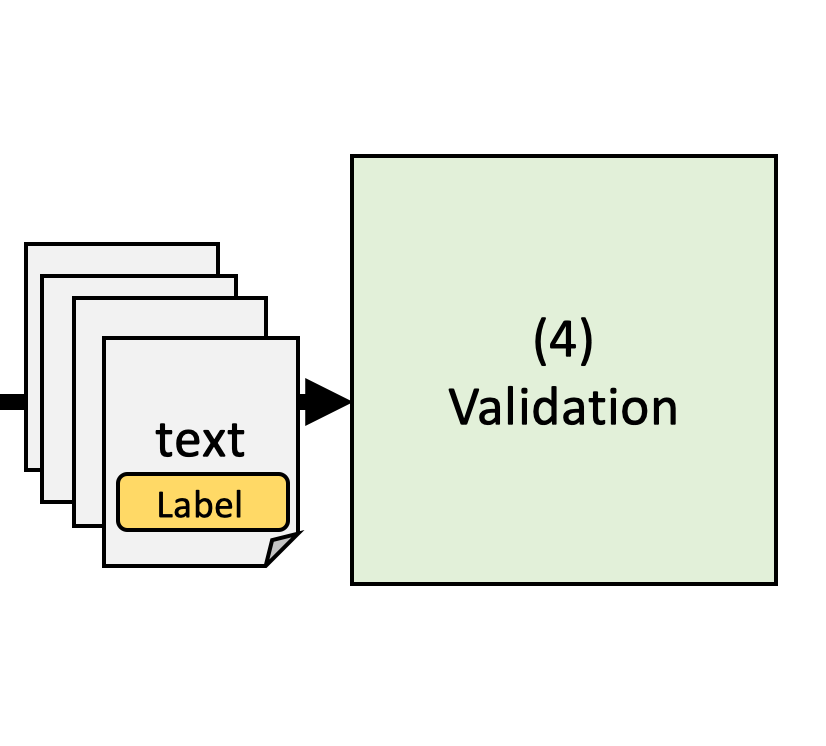

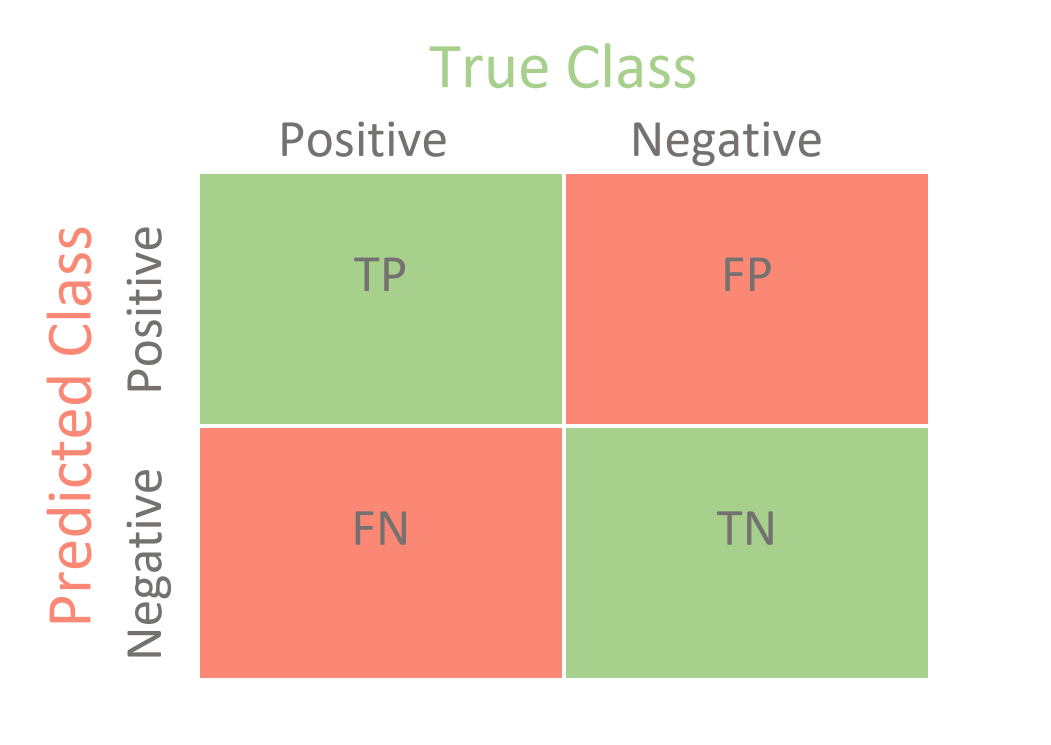

(4) Validation

Many text analysis processes are ‘black boxes’

- even manual coding

- dictionaries are ultimately opaque

- complex algorithms cannot be deciphered

Computer does not ‘understand’ natural language

- It just predicts labels based on features

- False-positive and false-negatives occur

We need to prove that the analysis is valid

- Validate by comparing text analyis output to a known good

- Reference: often manual annotation of a ‘gold standard’

TIMELINE OF NATURAL LANGUAGE PROCESSING

TIMELINE OF NATURAL LANGUAGE PROCESSING

TIMELINE OF NATURAL LANGUAGE PROCESSING

Ethics of Computational Communication Research

Ethical challenges in using computational methods

Ethical challenges in computational research (1)

Privacy and Data Security: A key ethical concern is the handling of personal data, including the risk of data breaches and misuse. When using proprietary software/algorithms, should we share personal data with the company behind it (e.g., OpenAI, Google)?

Bias and Fairness: Computational methods can inherit biases from the data they are trained on. This can result in unfair or discriminatory outcomes, especially when later applied to contexts like hiring, lending, and criminal justice. Addressing these biases remains an ongoing challenge. Validation is of utmost importance!

Transparency and Accountability: Many computational algorithms are complex and not easily understandable by humans. This lack of transparency can make it difficult to hold individuals or organizations accountable for the decisions made by these algorithms. Lack of explainability can lead to a lack of trust.

Social Manipulation: Computational methods can be used to manipulate public opinion or behavior, often in unethical ways (see Kramer et al.). This includes spreading misinformation or conducting social experiments without consent and debriefing.

Ethical challenges in computational research (2)

Environmental Impact: The massive computational power required for some methods, such as training deep learning models, can have a significant environmental impact, contributing to concerns about energy consumption and climate change.

Ownership and Intellectual Property: Questions of intellectual property rights, data and model ownership, and access to computational methods can raise more ethical issues. Some may argue that access to certain methods should be open to all (see also point 3 transparency), while others may seek to protect intellectual property (among other things, to e.g., prevent misuse).

Ethical AI: In the field of artificial intelligence (AI) and machine learning, ethical concerns often revolve around the development of AI systems that can make autonomous decisions. Ensuring these decisions align with human values is a significant challenge. Ethical dilemmas arise around the degree of control we should and are able to maintain over these systems and who should be responsible for their actions.

see also van Atteveldt & Peng, 2018

General ethical guidelines

Salganik (2013) proposes the following four ethical guidelines for evaluating the ethical nature of a computational study. They are loosely based on the Belmont Report (1978), which is a fundamental document in prescribing ethical guidelines for the protection of human subjects of research:

Respect for persons: Treating people as autonomous and honoring their wishes

Beneficence: Understanding and improving the risk/benefit profile of a study

Justice: Risks and benefits should be evenly distributed among participants/research subjects

Respect for law and public interest: Respect privacy, copyright, and access rights

Salganik, 2018, chap. 6

Ethical principles for CCR

Formalities

How this course is going to work?

Teachers

| Dr. Philipp K. Masur | Dr. Kaspar Welbers | Emma Diel |

Roan Buma |

|||

|---|---|---|---|---|---|---|

|

|

|

|

|||

| Lecturer & Course Coordinator | Teacher | Teacher | Teacher |

R help desk

Not everything will work at the first time.

Because we work with code, tiny mistakes can produce errors.

If you have any problems related to installing software, running packages or code, or simply an error message you do not understand, you can address the R Help Desk.

For office hours and how to get in contact with the R Help Desk, consult the course canvas page.

Information and Materials

The major hub for this course is the following website:

Communication and assignment submission via Canvas:

- S_CADC: Computationele analyse van digitale communicatie

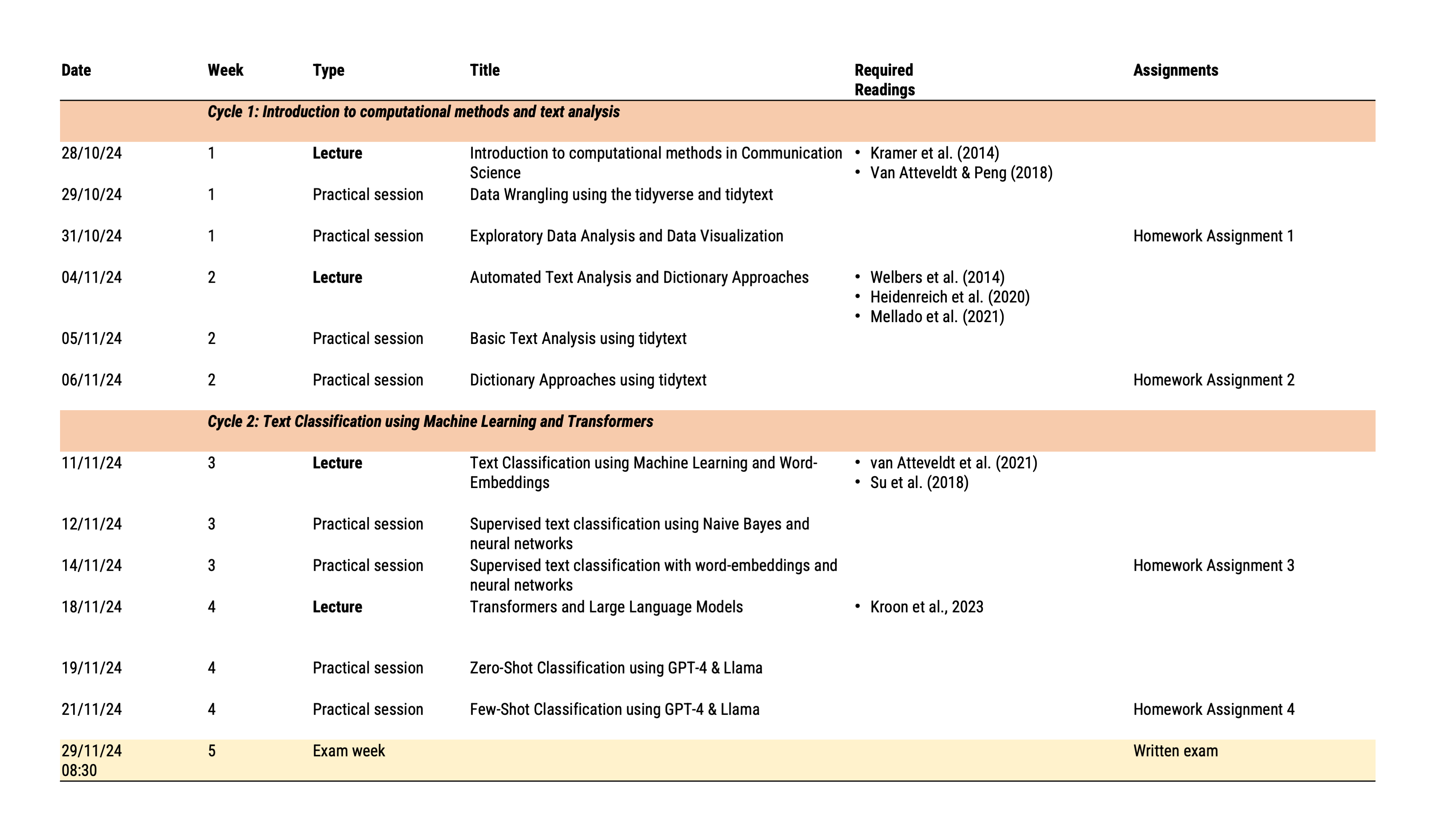

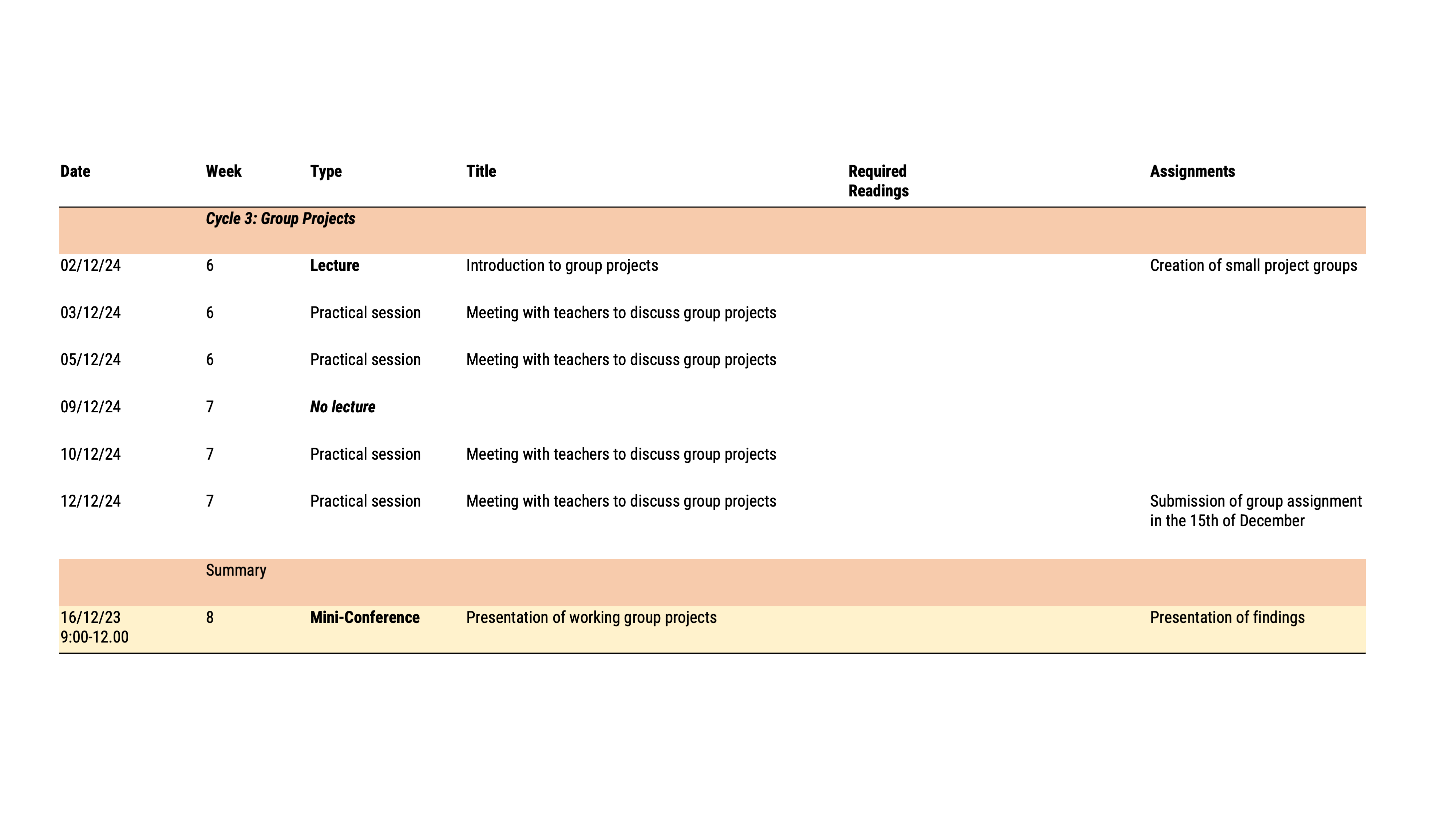

Course Schedule

Course Schedule

Attendance

You will realize that this course has a comparatively steep learning curve. We will learn about research papers and recreate their analyses in R. It is thus generally recommended to follow all lectures and practical sessions! BUT: Despite some initial challenges, you will also experience a lot of self-efficacy: Learning R and computational methods is very rewarding and at the end, you can be proud of what you have achieved!

Attendance during the regular lectures is highly recommended (this is the content for the exam).

Attendance of the practical sessions is mandatory.

One absence from one of the workgroup sessions, for serious health, family, or work reasons, can be excused if the instructor is advised in advance.

Exam

After the first two cycles, there will be a written exam (40% of the final grade):

The exam will consist of ~36 multiple choice questions and ~8 open-ended questions

Exam questions will be based on all material discussed in the first two cycles, including lecture content, class materials, and required readings

Example questions will be provided throughout the course

Please register for the exam via VUweb

Homework Assignments

After each week, students are required to hand in a “homework assignment”, which represents a practical application of some of the taught analysis methods (e.g. with a new data set, specific research question) (30% of the final grade):

Each week’s assignment requires students to apply the methods they have learned to a new data set.

Students will receive an RMarkdown template for their code and the respective data set(s), explanations for how to use these templates will be providee in the practical sessions and via Canvas.

Students are required to hand in the RMarkdown file (.rmd) and a compiled html document (.html) on Thursday (24:00, hard deadline!) the week after. All homework assignments must be submitted to pass!

Homework assignment will be graded as follows as fail, pass or good.

Group presentation

In the third cycle, students will be assigned to small working groups in which they independently conduct a research project. A final poster presentation on the 16th of December (most likely 09.00 to 12.00) will be graded per group (30% of the final grade).

Students are required to hand in the poster (as PDF) and analyses (again .rmd and .html) beforehand (Deadline: 15th of December 2021 at 24:00, hard deadline!)

Questions?

If anything is unclear, do ask now.

Required Reading

Kramer, A. D. I., Guillory, J. E, & Hancock, J. (2014). Experimental Evidence of Massive-Scale Emotional Contagion Through Social Networks. Proceedings of the National Academy of Sciences, 111(24), 8788-8790.

Van Atteveldt, W., & Peng, T.-Q. (2018). When communication meets computation: Opportunities, challenges, and pitfalls in computational communication science. Communication Methods and Measures, 12(2-3), 81–92. https://doi.org/10.1080/19312458.2018.1458084

(available on Canvas)

References

Andrich, A., Bachl, M., & Domahidi, E. (2023). Goodbye, Gender Stereotypes? Trait Attributions to Politicians in 11 Years of News Coverage. Journalism & Mass Communication Quarterly, 100(3), 473-497. https://doi-org.vu-nl.idm.oclc.org/10.1177/10776990221142248

Blumenstock, J. E., Cadamuro, G. , and On, R. (2015). Predicting Poverty and Wealth from Mobile Phone Metadata. Science, 350(6264), 1073–6. https://doi.org/10.1126/science.aac4420.

Haim, M., Scherr, S., & Arendt, F. (2021). How search engines may help reduce drug-related suicides. Drug and Alcohol Dependence, 226(108874). https://dx.doi.org/10.1016/j.drugalcdep.2021.108874

Jacobi,C., van Atteveldt, W. & Welbers, K. (2016) Quantitative analysis of large amounts of journalistic texts using topic modelling. Digital Journalism, 4(1), 89-106, DOI: 10.1080/21670811.2015.1093271

Jürgens, P., Meltzer, C., & Scharkow, M. (2021, in press). Age and Gender Representation on German TV: A Longitudinal Computational Analysis. Computational Communication Research.

Kramer, A. D. I., Guillory, J. E, & Hancock, J. (2014). Experimental Evidence of Massive-Scale Emotional Contagion Through Social Networks. Proceedings of the National Academy of Sciences, 111(24), 8788-8790.

Parry, D. A., Davidson, B. I., Sewall, C. J., Fisher, J. T., Mieczkowski, H., & Quintana, D. S. (2021). A systematic review and meta-analysis of discrepancies between logged and self-reported digital media use. Nature Human Behaviour, 5(11), 1535-1547.

Salganik, M. J. (2018). Bit by Bit: Social Research in the Digital Age. Princeton University Press.

Scharkow, M. (2016). The accuracy of self-reported internet use—A validation study using client log data. Communication Methods and Measures, 10(1), 13-27.

Simon, M., Welbers, K., Kroon, A. C. & Trilling, D. (2022). Linked in the dark: A network approach to understanding information flows within the Dutch Telegramsphere. Information, Communication & Society, https://doi.org/10.1080/1369118X.2022.2133549

References

Thompson, T. (2010). Crime software may help police predict violent offences. The Observer. Retrieved from https://www.theguardian.com/uk/2010/jul/25/police-software-crime-prediction

Van Atteveldt, W., & Peng, T.-Q. (2018). When communication meets computation: Opportunities, challenges, and pitfalls in computational communication science. Communication Methods and Measures, 12(2-3), 81–92. https://doi.org/10.1080/19312458.2018.1458084

Wimmer, A. & Lewis, K., (2010). Beyond and Below Racial Homophily: ERG Models of a Friendship Network Documented on Facebook. American Journal of Sociology, 116(2), 583-642.

Example Exam Question (Multiple Choice)

Why is the “Facebook Manipulation Study” by Kramer et al. ethically problematic?

A. People did not know that they took part in a study (no informed consent).

B. It overtly manipulated people’s emotion.

C. Both A and B are true.

D. The study was not ethically problematic.

Example Exam Question (Multiple Choice)

Why is the “Facebook Manipulation Study” by Kramer et al. ethically problematic?

A. People did not know that they took part in a study (no informed consent).

B. It overtly manipulated people’s emotion.

C. Both A and B are true.

D. The study was not ethically problematic.

Example Exam Question (Open Format)

Name and explain two characteristics of big data.

(4 points, 2 points for correctly naming them and 2 points for correctly explaining them)

Big data are often “incomplete”: This means they do not have the information that you will want for your research. This is a common feature of data that were created for purposes other than research. For example, log data (e.g., browser history) includes all links a person has visited over time, but does not provide any additional information. More over, it may contain gaps where the software failed or the person purposefully hid his surfing behavior.

Big data are often “algorithmically confounded”: Behavior in big data systems is not natural; it is driven by the engineering goals of the systems. For example, what you see on a facebook news feed depends on algorithm that Facebook has built into their platform. Behavior of individuals is thus also driven by these system-immanent features.

Thank you for your attention!

Computational Analysis of Digital Communication