# Load data

library(tidyverse)

url <- "https://raw.githubusercontent.com/vanatteveldt/ecosent/master/data/intermediate/sentences_ml.csv"

d <- read_csv(url) |>

select(id, text = headline, lemmata, sentiment=value) |>

mutate(sentiment = factor(sentiment, levels = c(-1, 0, 1),

labels = c("negative", "neutral", "positive")))

head(d)Word Embeddings, Transformers, and Large Language Models

Week 4: Bert, GPT, and Co

Dr. Philipp K. Masur

How we did things so far…

Remember the last practical session?

We dealt with the following data set of Dutch finance news headlines:

# A tibble: 6 × 4

id text lemmata sentiment

<dbl> <chr> <chr> <fct>

1 10007 Rabobank voorspelt flinke stijging hypotheekrente Raboban… neutral

2 10027 D66 wil reserves provincies aanspreken voor groei D66 wil… neutral

3 10037 UWV: dit jaar meer banen UWV dit… positive

4 10059 Proosten op geslaagde beursgang Bols proost … positive

5 10099 Helft werknemers gaat na 65ste met pensioen helft w… neutral

6 10101 Europa groeit voorzichtig dankzij lage energieprijzen Europa … positive The classic machine learning approach

- A lot of different steps…

# Create test and train data sets

split <- initial_split(d, prop = .50)

# Feature engineering

rec <- recipe(sentiment ~ lemmata, data = d) |>

step_tokenize(lemmata) |>

step_tf(all_predictors()) |>

step_normalize(all_predictors())

# Setup algorithm/model

mlp_spec <- mlp(epochs = 600, hidden_units = c(6),

penalty = 0.01, learn_rate = 0.2) |>

set_engine("brulee") |>

set_mode("classification")

# Create workflow

mlp_workflow <- workflow() |>

add_recipe(rec) |>

add_model(mlp_spec)

# Fit model

m_mlp <- fit(mlp_workflow, data = training(split))What if things were easier?

- Wouldn’t it be great if we would not have to wrangle with the data, not engage in text preprocessing, and simply let the “computer” figure this out?

Content of this Lecture

Machine Learning vs. Deep Learning

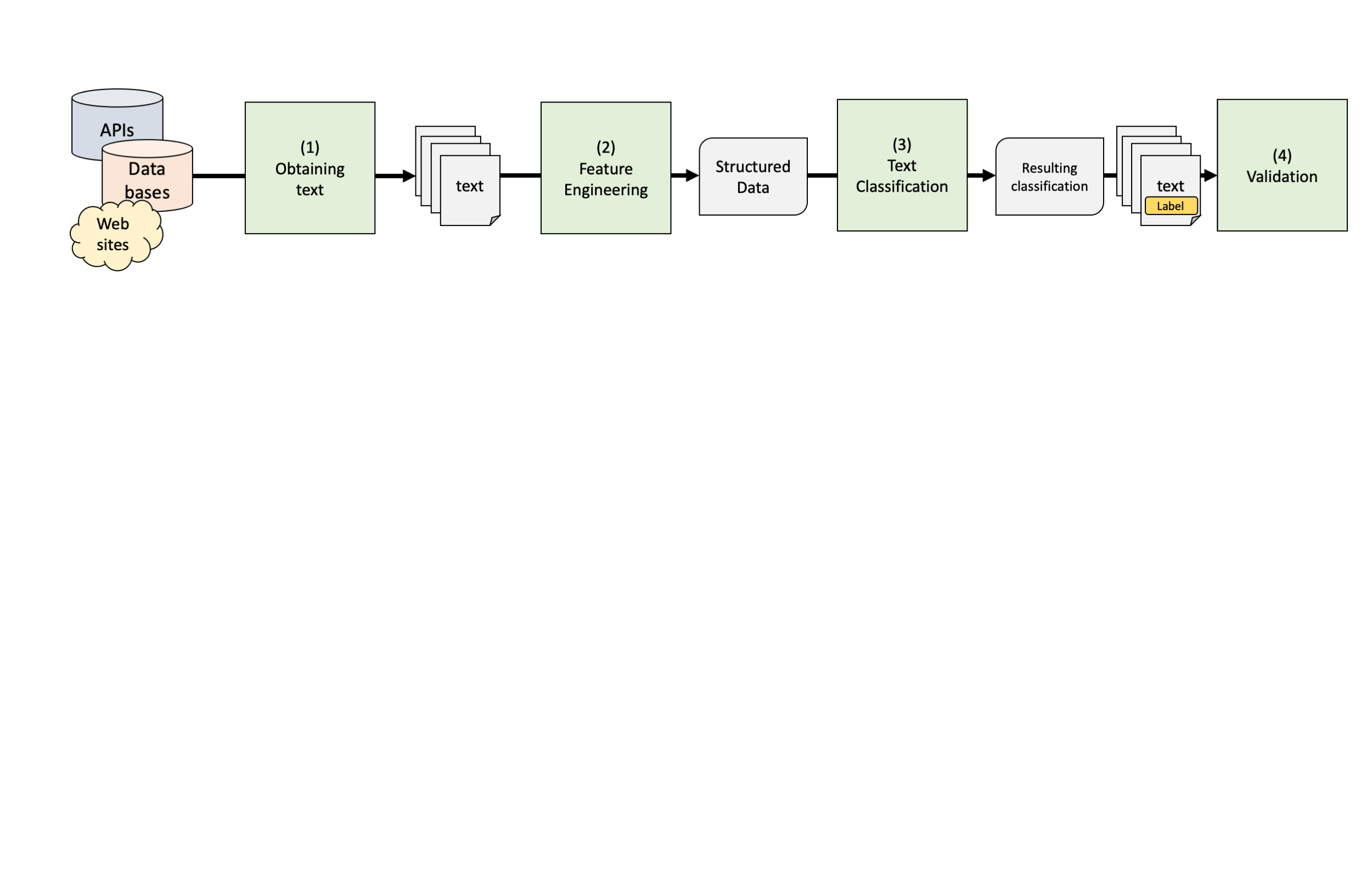

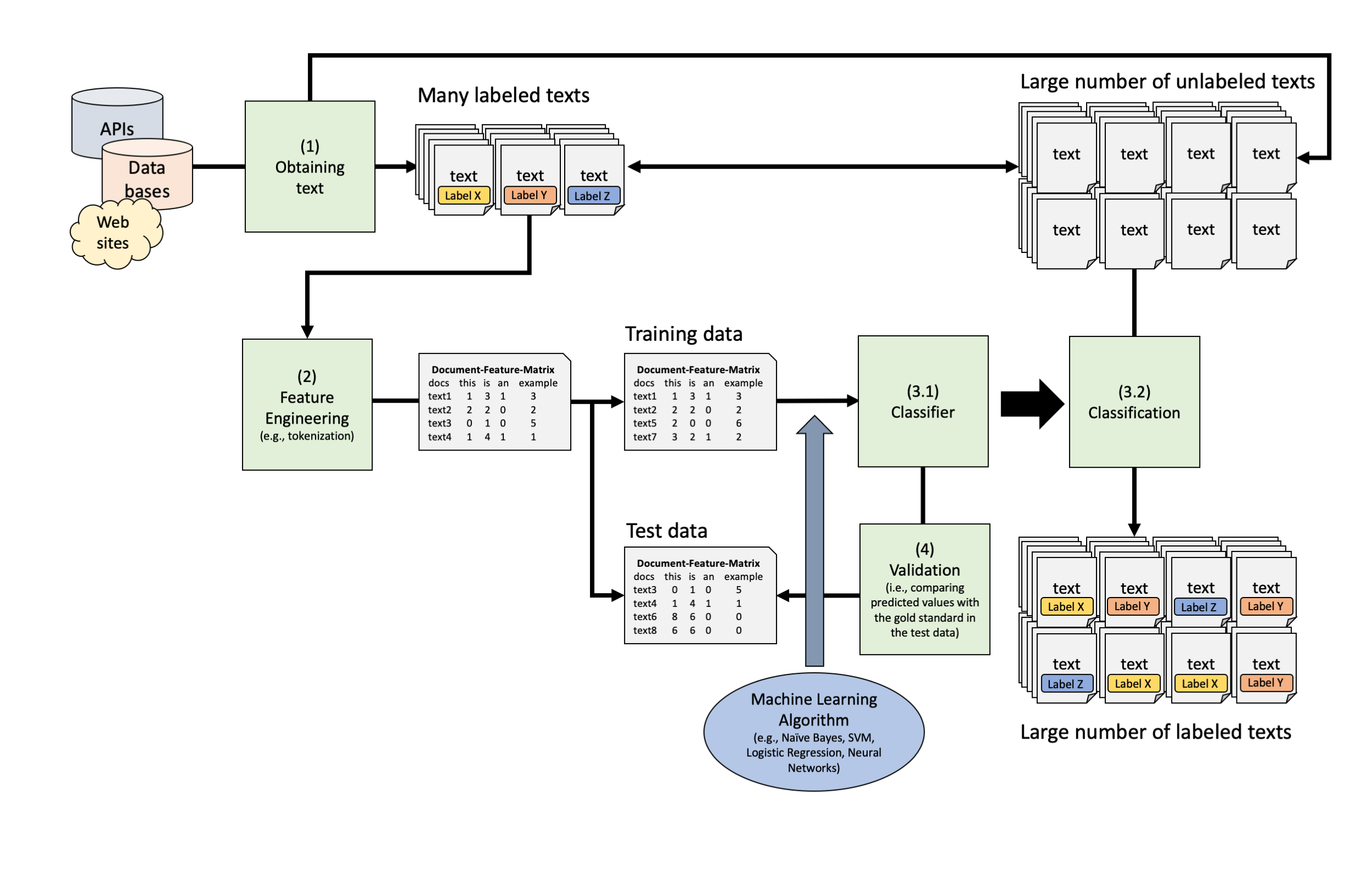

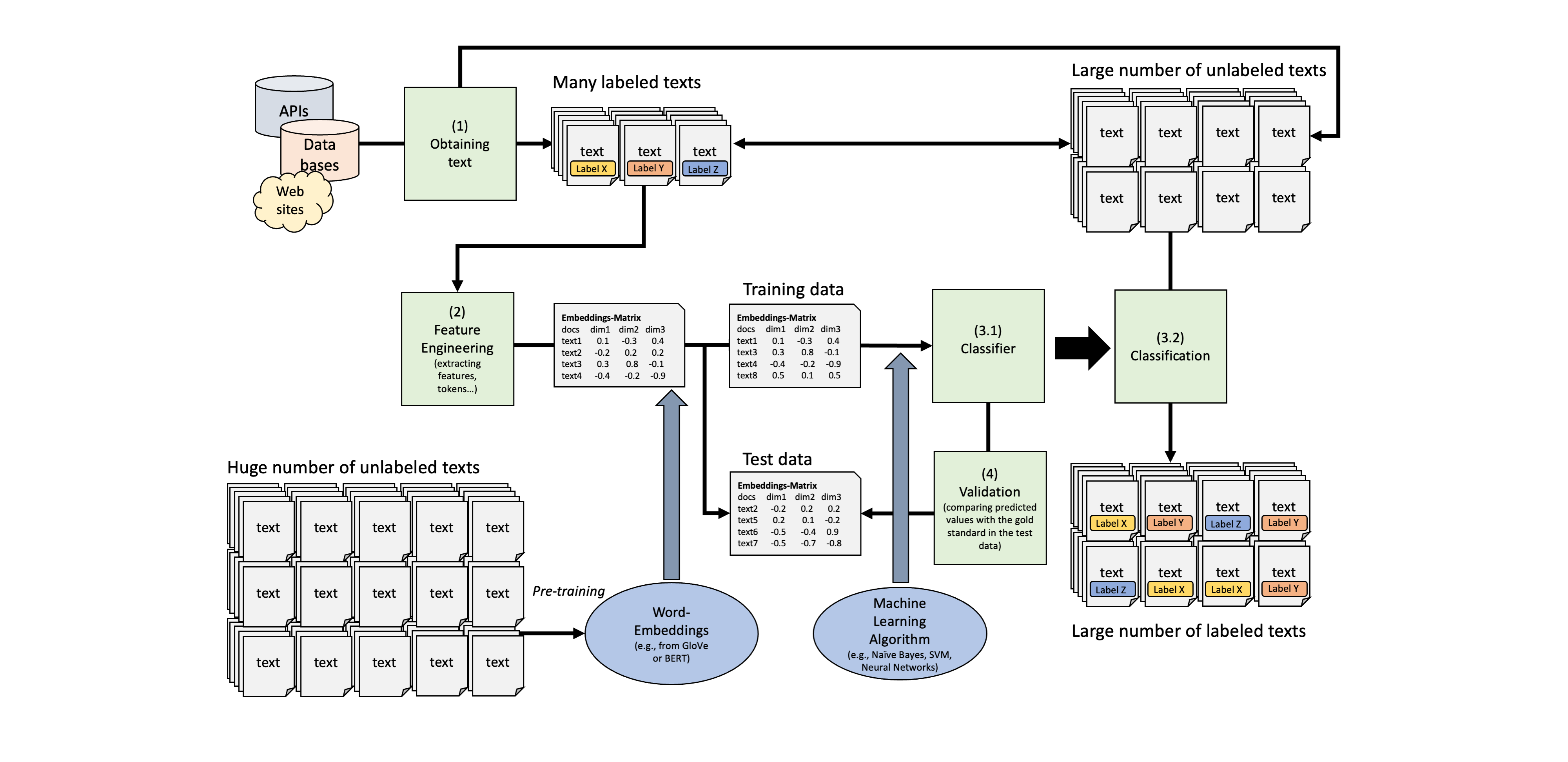

1.1. Reminder: Machine Learning Text classification Pipeline

1.2. How did the field move on?

From Term Frequencies to Word-Embeddings

2.1. Basic principles

2.2. Similarity of words and texts

2.3. Pre-trained Word-Embeddings

2.4. Text Classification Pipeline with Word-Embeddings

2.5. Examples in the literature

The Rise of Transformers and Transfer Learning

3.1. Overview and Principles

3.2. Architecture of the Transformer Model

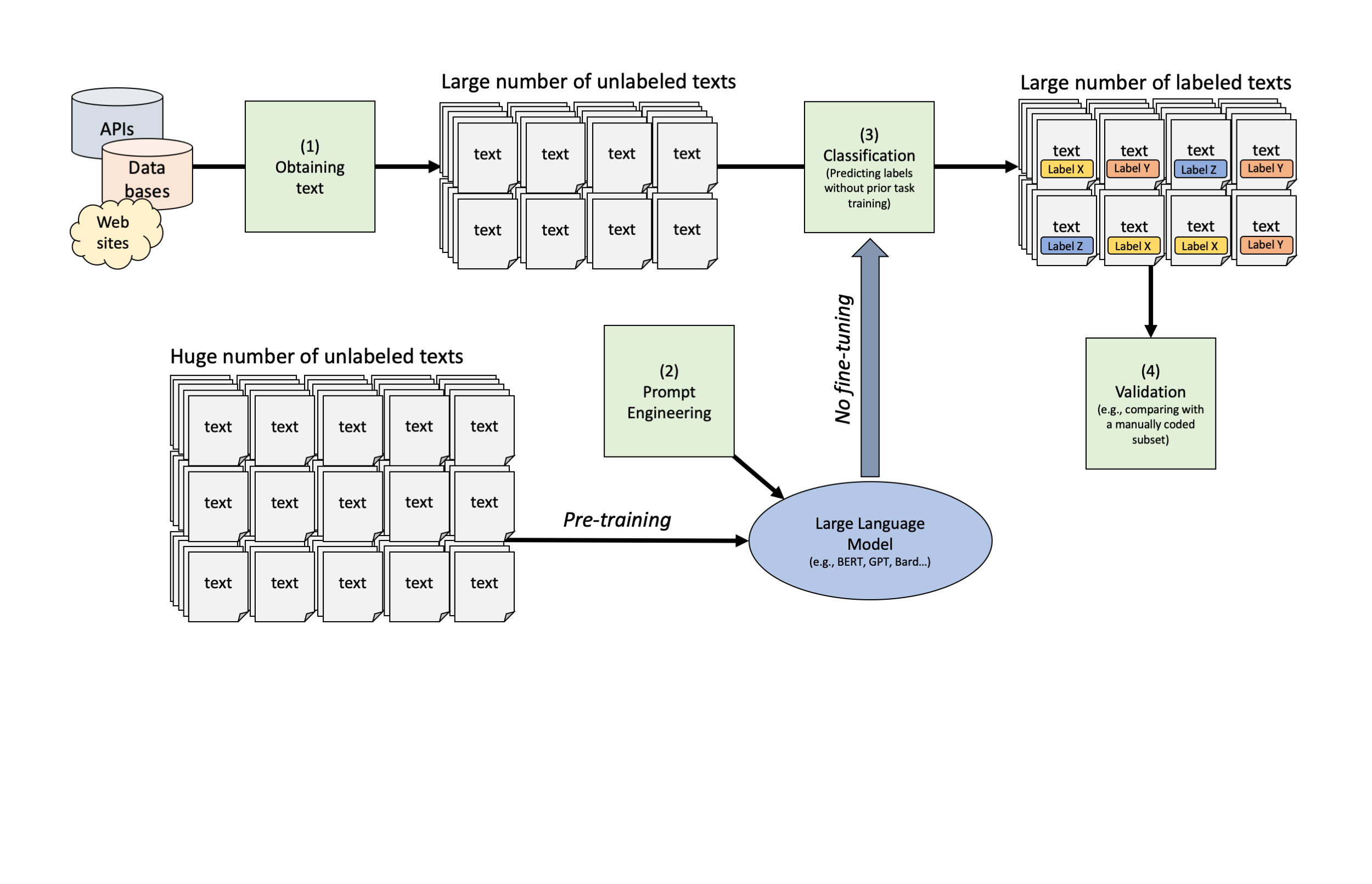

3.3. The Transformer/LLM Text Classification Pipeline

3.4. The Concept of Fine-Tuning

Large Language Models: BERT, GPT and the “AI Revolution”?

4.1. What are Large Language Models and Generative AI?

4.2. A Peek into the Architecture of Famous LLMs

4.3. Zero-Shot Text Classification Using BERT and GPT

4.4. Validation, validation, validation!

4.5. Examples in the Literature

Summary and conclusion

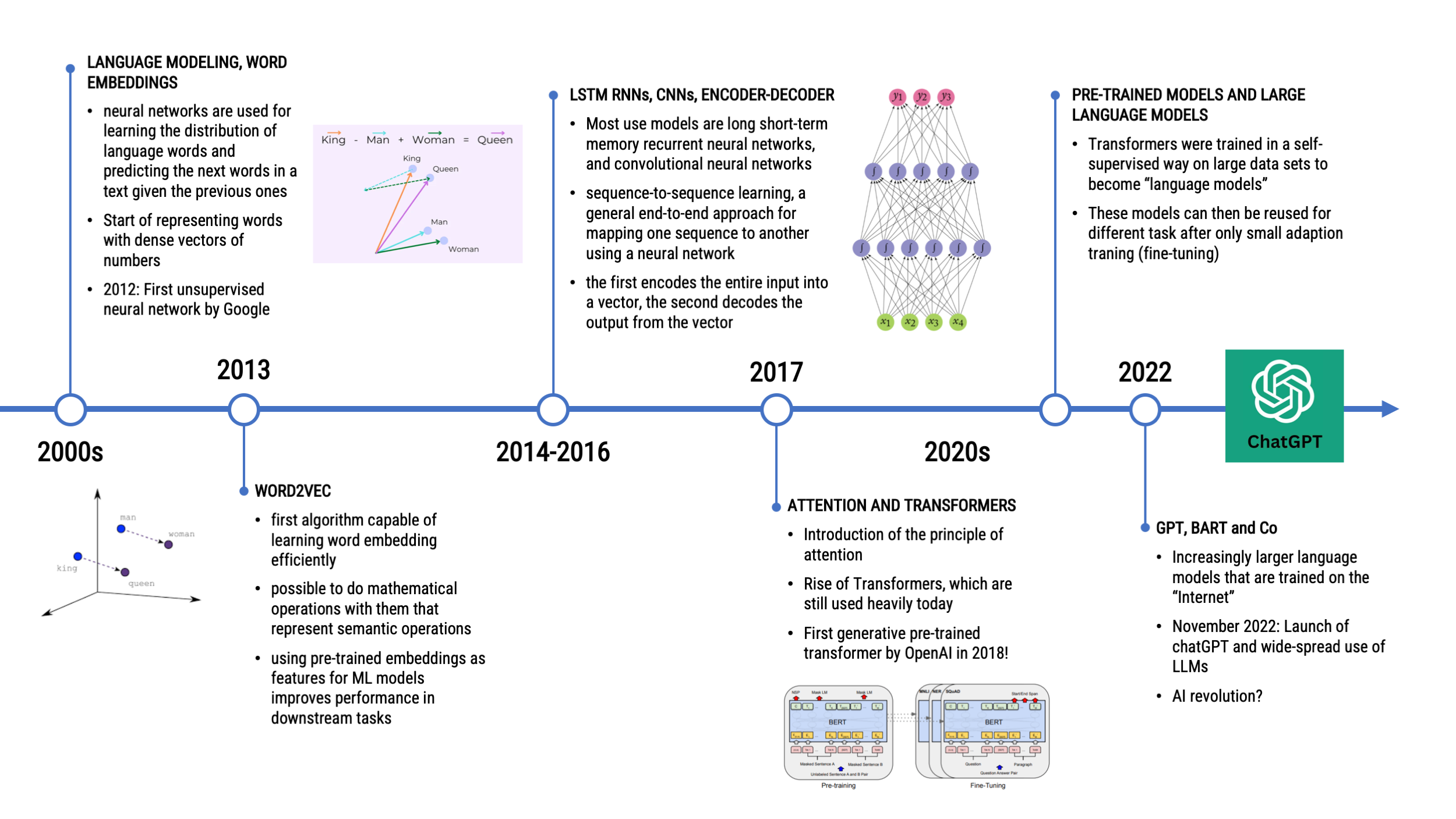

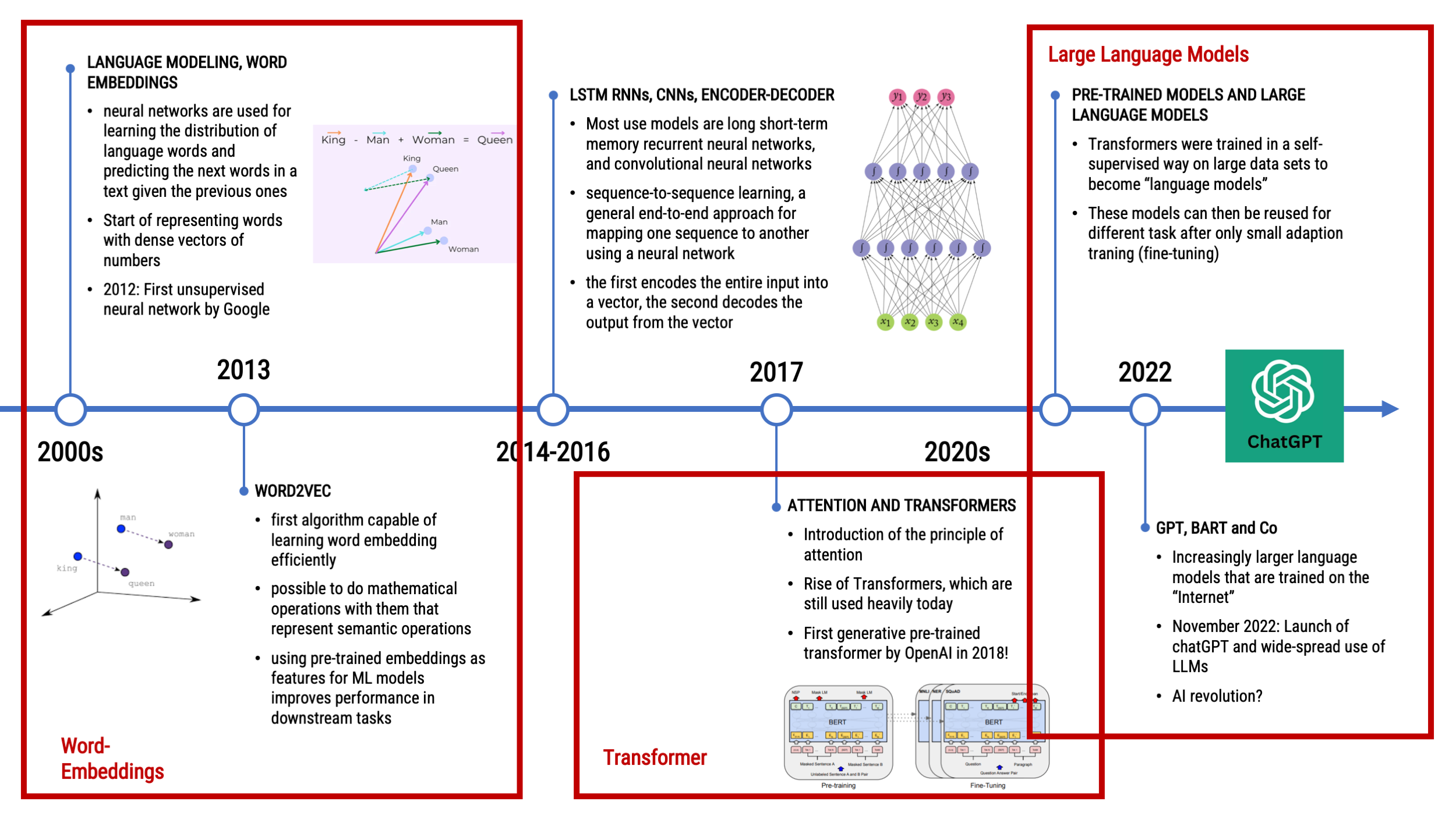

5.1. A Look Back at the Chronology of NLP

5.2. State-of-the-Art in Classification

5.3. Ethical considerations

5.4. Conclusion

Machine Learning vs. Deep Learning

Text Classification Pipeline

Machine Learning (1990-2010)

And now?

Massive advancements in recent years

Massive advancement in how text can be represented at numbers

- From simple word counts to word embeddings

- Static vs. contextual word embeddings

Pretraining and transfer learning

- Word embeddings can be trained on large scale corpus

- Pretrained word embeddings can fine-tuned (less training data) and then used for downstream tasks

Transformers and Generative AI

- Larger and larger “language models”

- Ever more powerful in solving rather complex tasks

- Conversational frameworks (e.g., GPT)

From Term Frequencies to Word-Embeddings

More powerful and informative ways to represent text as numbers.

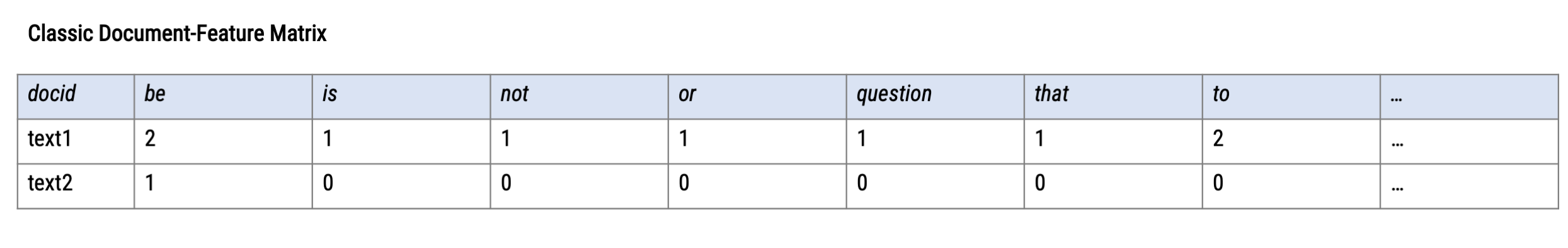

Rember: The initial problem of text analysis

Computers don’t read text, they only can deal with numbers

For this reason, so far, we tokenized our texts (e.g., in words) and summarized their frequency across texts to create a document-feature matrix within the bag-of-words model

Such a text representation has some issues:

- Treats words as equally important (→ requires removal of noise, stopwords…)

- Ignores word order and context

- Results in a sparse matrix (→ computationally expensive)

Alternative: Map words into a vector space

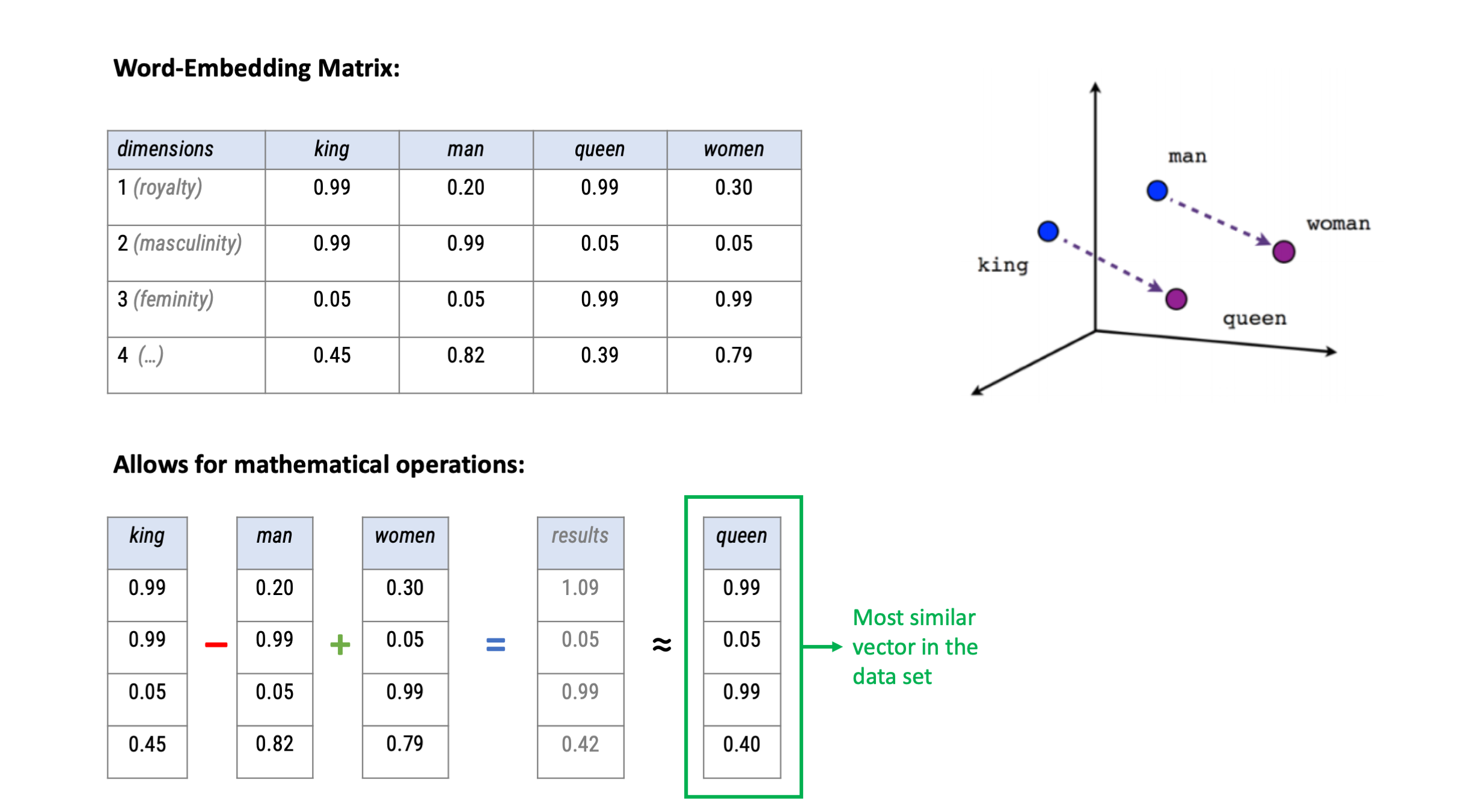

(Static) Word embeddings

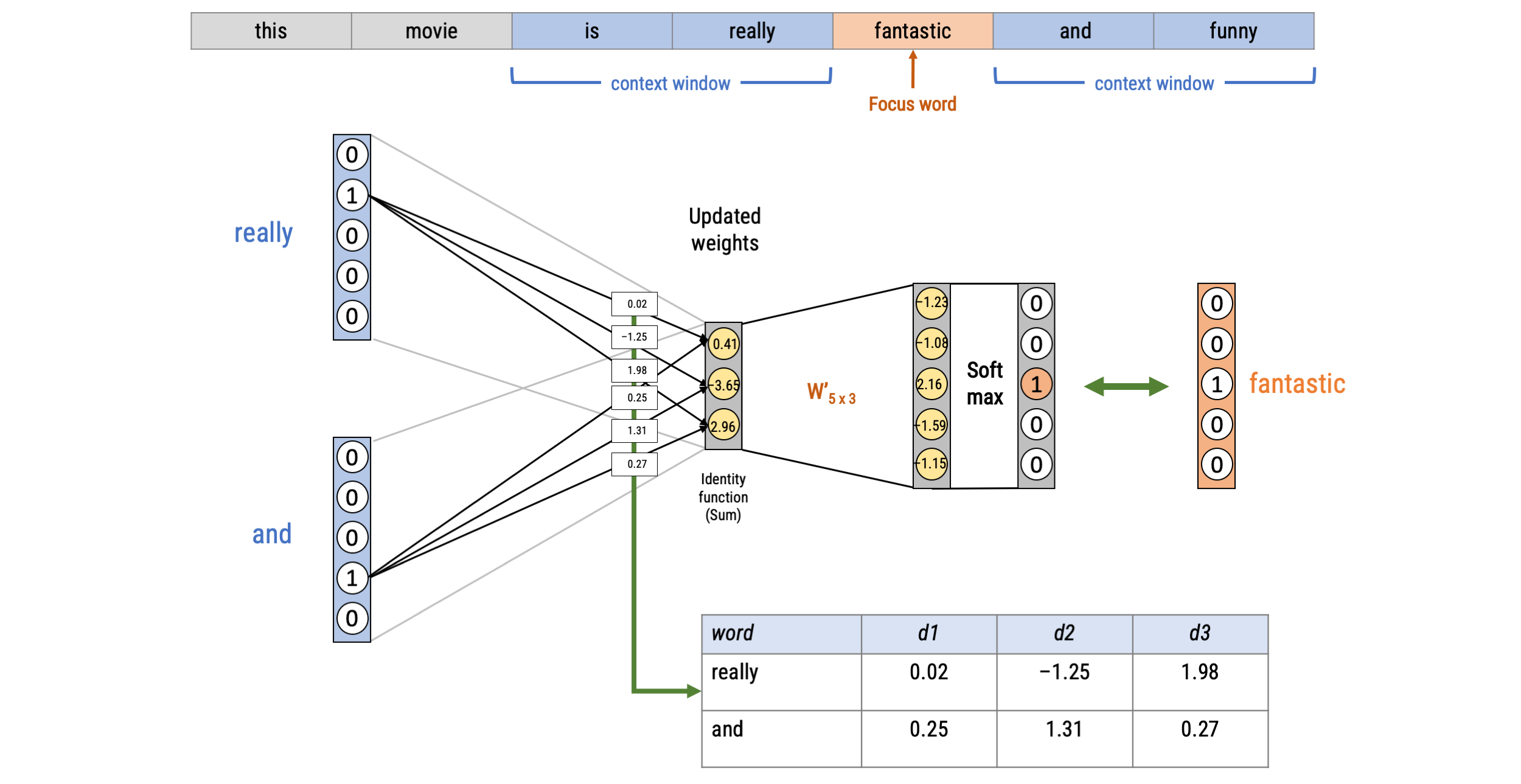

Word embeddings are a “learned” type of word representation that allows words with similar meaning to have a similar representation via a k-dimensional vector space

The first core idea behind word embeddings is that the meaning of a word can be expressed using a relatively small embedding vector, generally consisting of around 300 numbers which can be interpreted as dimensions of meaning.

The second core idea is that these embedding vectors can be derived by scanning the context of each word in millions and millions of documents.

This means that words that are used in similar ways in the training data result in similar representations, thereby capturing their similar meaning.

This can be contrasted with the crisp but rather limited representation of words in a bag of words model where different words have different representations, regardless of how they are used.

How do we get these “values” for each word?

All word embedding methods learn a real-valued vector representation for a predefined fixed sized vocabulary from a corpus of text.

There are many different ways to “learn” or use word embeddings:

Via an embedding layer in a neural network designed for a particular downstream task

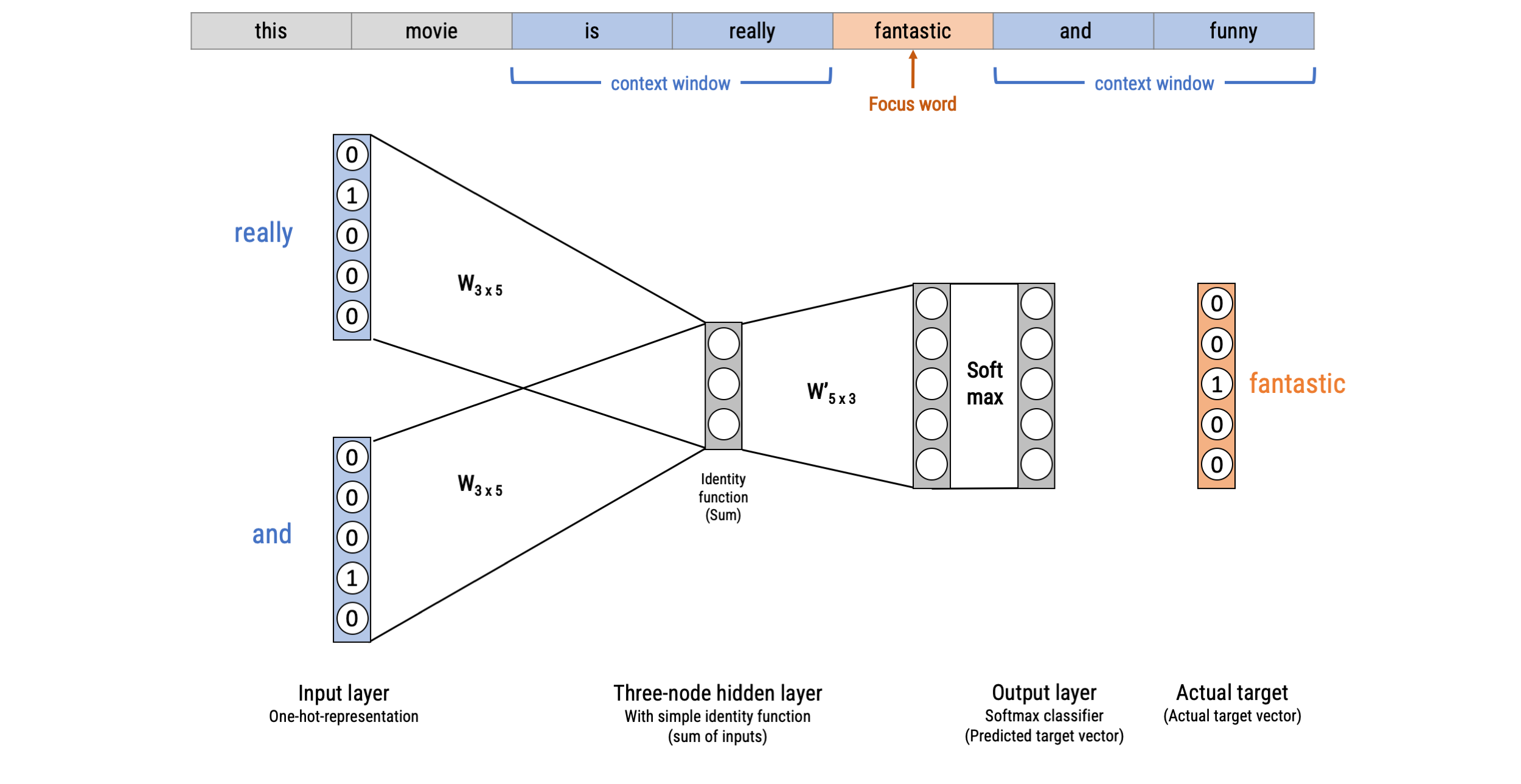

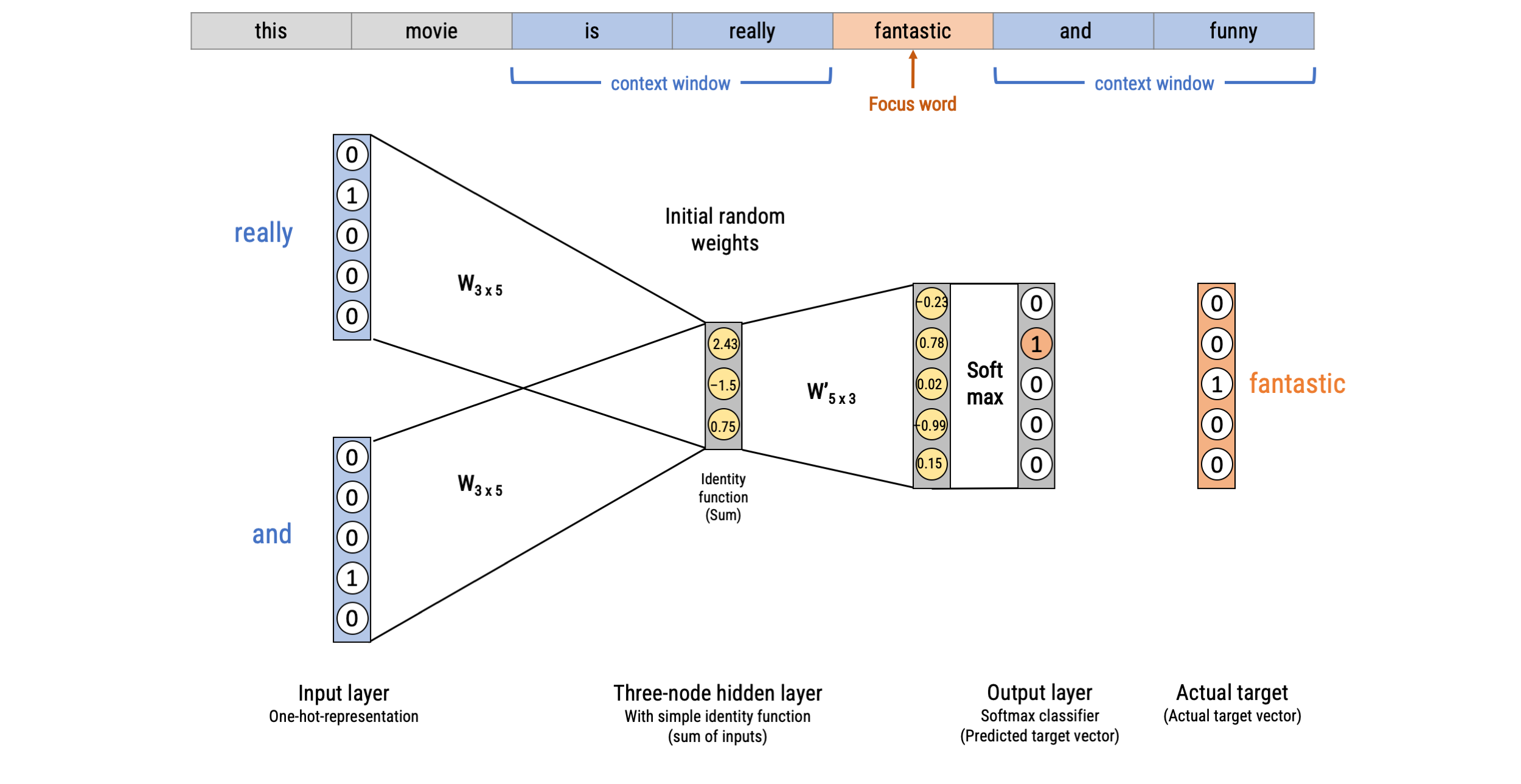

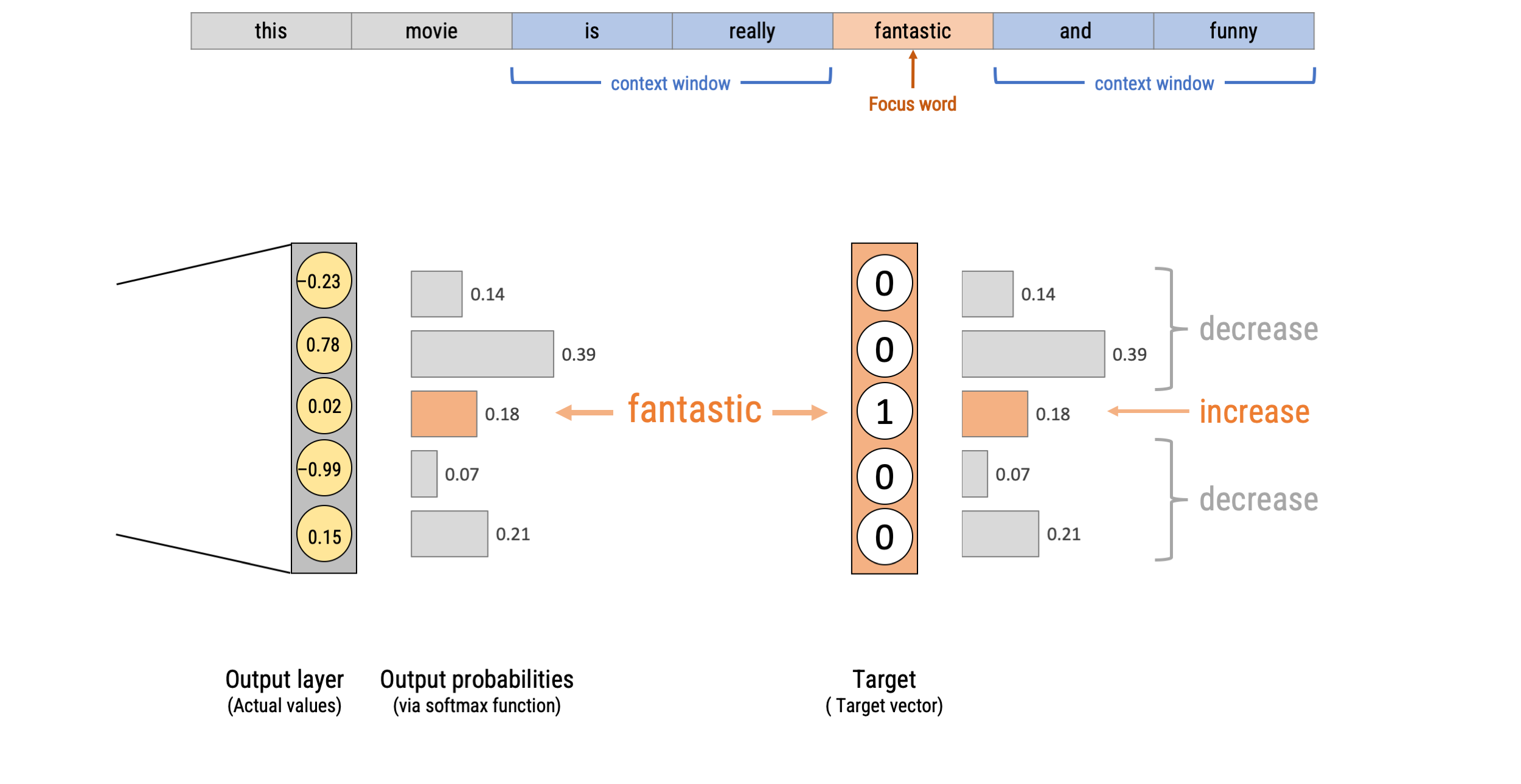

Learning word embeddings using a shallow neural network and context windows (e.g., word2vec)

Leatning word embeddings by aggregating global word-word co-occurrence matrix (e.g., GloVe)

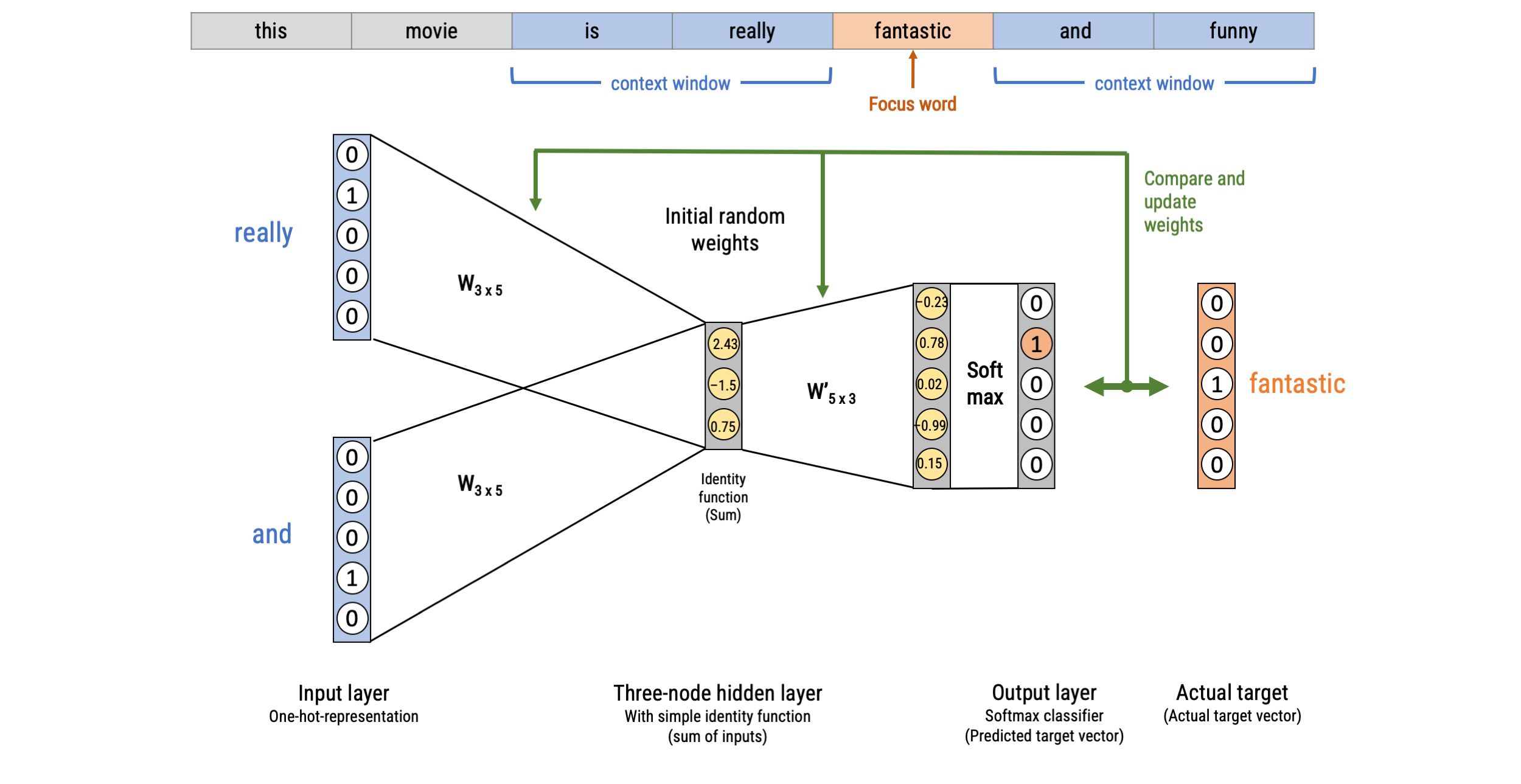

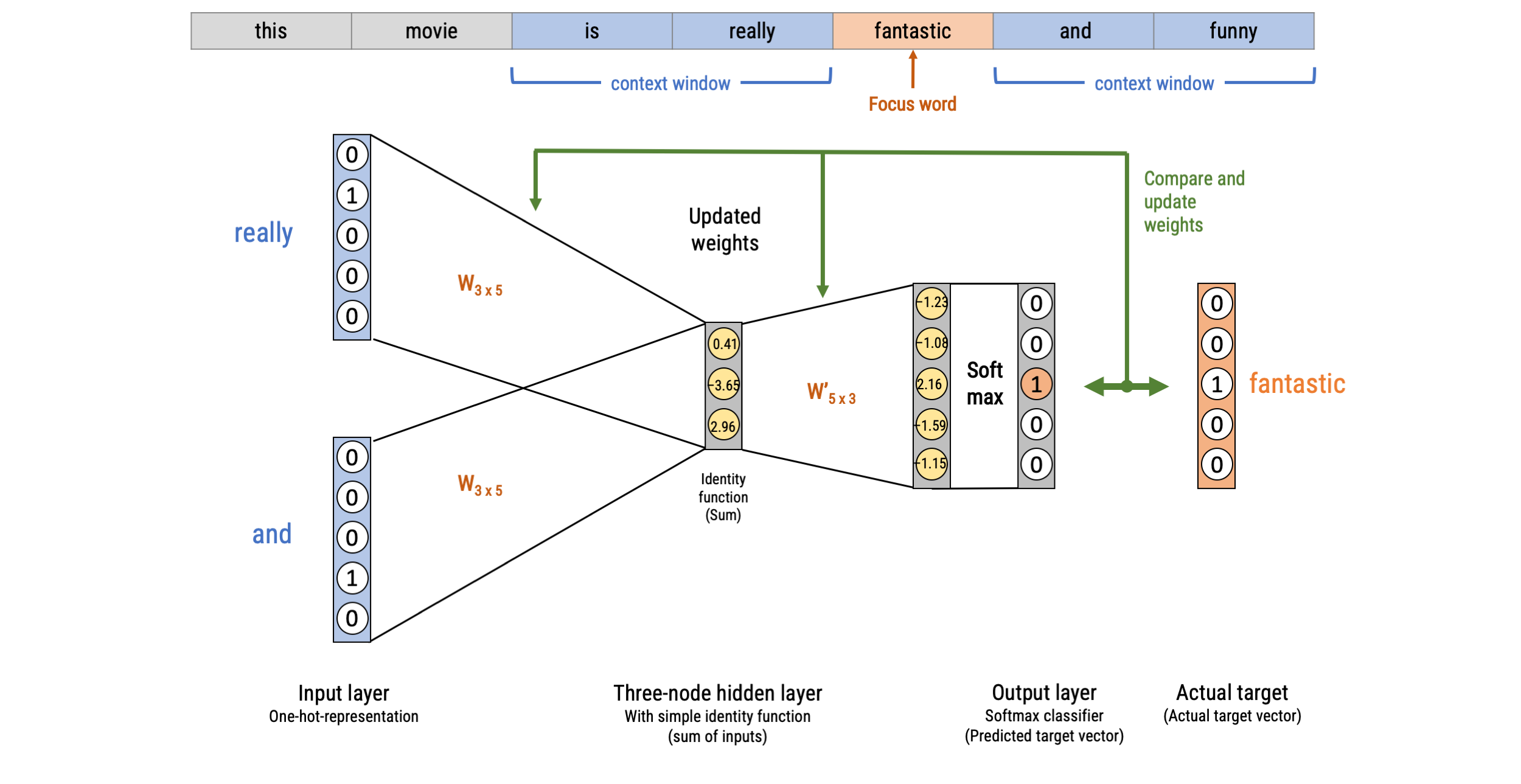

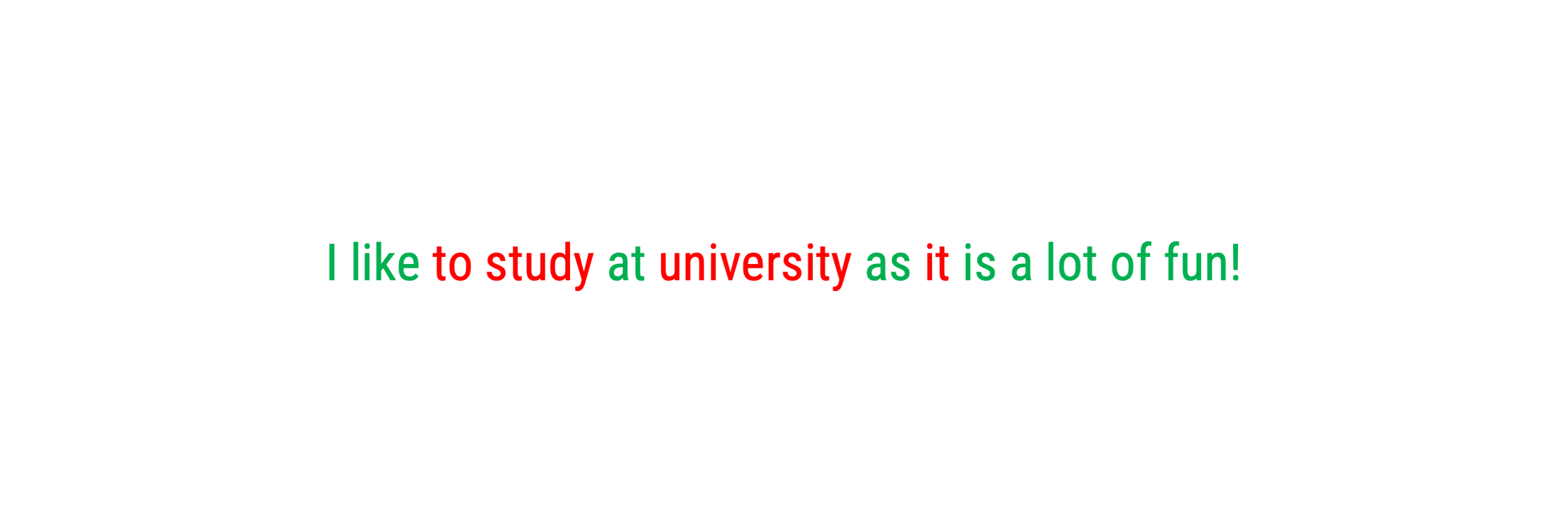

Word2Vec: Continuous Bag-of-words (CBOW)

Word2Vec: Continuous Bag-of-words (CBOW)

Word2Vec: Continuous Bag-of-words (CBOW)

Word2Vec: Continuous Bag-of-words (CBOW)

Word2Vec: Continuous Bag-of-words (CBOW)

Word2Vec: Continuous Bag-of-words (CBOW)

Pre-trained Word embeddings: GloVe

glove_fn = "glove.6B.50d.10k.w2v.txt"

url = glue::glue("https://cssbook.net/d/{glove_fn}")

if (!file.exists(glove_fn))

download.file(url, glove_fn)

# Data wrangling

wv_tibble <- read_delim(glove_fn, skip=1, delim=" ", quote="",

col_names = c("word", paste0("d", 1:50)))

# 10 highest scoring words on dimension 1

wv_tibble |>

arrange(-d1) |>

select(1:10)# A tibble: 10,000 × 10

word d1 d2 d3 d4 d5 d6 d7 d8 d9

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 airbus 2.60 -0.536 0.414 0.339 -0.0510 0.848 -0.722 -0.361 0.869

2 spacecraft 2.52 0.744 1.66 0.0591 -0.252 -0.243 -0.594 -0.417 0.460

3 fiat 2.29 -1.15 0.488 0.518 0.312 -0.132 0.0520 -0.661 -0.859

4 naples 2.27 -0.106 -1.27 -0.0932 -0.437 -1.18 -0.0858 0.469 -1.08

5 di 2.24 -0.603 -1.47 0.354 0.244 -1.09 0.357 -0.331 -0.564

6 planes 2.20 -0.831 1.34 -0.348 -0.209 -0.282 -0.824 -0.481 0.211

7 bombings 2.16 -0.222 0.383 0.0723 -0.305 0.632 0.125 -0.123 -0.171

8 flights 2.15 0.350 -0.0175 -0.0238 -1.39 -0.812 -0.742 0.204 1.23

9 orbit 2.14 1.08 1.66 -0.180 0.323 -0.394 0.0476 -0.0108 0.403

10 plane 2.11 -0.107 0.972 0.122 0.571 -0.136 -0.676 0.117 0.472

# ℹ 9,990 more rowsSimilarities between words

As mentioned before, we can compute similarity scores for word pairs:

wv <- as.matrix(wv_tibble[-1])

rownames(wv) <- wv_tibble$word

wv <- wv / sqrt(rowSums(wv^2))

wvector <- function(wv, word) wv[word,,drop=F]

wv_similar <- function(wv, target, n=5) {

similarities = wv %*% t(target)

similarities |>

as_tibble(rownames = "word") |>

rename(similarity=2) |>

arrange(-similarity) |>

head(n=n)

}Similarity of entire sentences or texts

But we can also generalize word embeddings to entire sentences (or even texts):

library(ccsamsterdamR)

# Example sentences

movies <- tibble(sentences = c("This movie is great, I loved it.",

"The film was fantastic, a real treat!",

"I did not like this movie, it was not great.",

"Today, I went to the cinema and watched a movie",

"I had pizza for lunch."))

# Get embeddings from a sentence transformer

movie_embeddings <- hf_embeddings(txt = movies$sentences)

# Each text has now 384 values

movie_embeddings # A tibble: 5 × 384

V1 V2 V3 V4 V5 V6 V7 V8 V9 V10 V11 V12 V13 V14

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 -0.0875 -0.0156 -0.0380 -0.0586 -0.0842 0.0164 -0.0222 0.0451 0.0449 -0.00546 -0.0115 -0.00166 0.00575 0.0362

2 -0.0544 0.0381 -0.0443 -0.0111 -0.0464 -0.00760 -0.0523 0.0173 -0.0725 -0.0432 0.0206 0.00903 0.0223 0.0208

3 -0.0838 0.0135 -0.0938 -0.0351 -0.0848 -0.0424 -0.00107 0.0537 0.0559 -0.0729 0.0153 0.0359 0.0396 0.0387

4 -0.0442 0.00655 0.0754 -0.0341 0.0637 0.00674 0.0993 -0.0369 0.0752 -0.0405 0.0222 0.0284 -0.0243 0.0916

5 -0.0257 0.0440 0.0407 0.00391 -0.0818 -0.0621 0.0836 -0.0138 -0.101 -0.0757 0.0882 -0.0530 0.0351 -0.0716

# ℹ 370 more variables: V15 <dbl>, V16 <dbl>, V17 <dbl>, V18 <dbl>, V19 <dbl>, V20 <dbl>, V21 <dbl>, V22 <dbl>,

# V23 <dbl>, V24 <dbl>, V25 <dbl>, V26 <dbl>, V27 <dbl>, V28 <dbl>, V29 <dbl>, V30 <dbl>, V31 <dbl>, V32 <dbl>,

# V33 <dbl>, V34 <dbl>, V35 <dbl>, V36 <dbl>, V37 <dbl>, V38 <dbl>, V39 <dbl>, V40 <dbl>, V41 <dbl>, V42 <dbl>,

# V43 <dbl>, V44 <dbl>, V45 <dbl>, V46 <dbl>, V47 <dbl>, V48 <dbl>, V49 <dbl>, V50 <dbl>, V51 <dbl>, V52 <dbl>,

# V53 <dbl>, V54 <dbl>, V55 <dbl>, V56 <dbl>, V57 <dbl>, V58 <dbl>, V59 <dbl>, V60 <dbl>, V61 <dbl>, V62 <dbl>,

# V63 <dbl>, V64 <dbl>, V65 <dbl>, V66 <dbl>, V67 <dbl>, V68 <dbl>, V69 <dbl>, V70 <dbl>, V71 <dbl>, V72 <dbl>,

# V73 <dbl>, V74 <dbl>, V75 <dbl>, V76 <dbl>, V77 <dbl>, V78 <dbl>, V79 <dbl>, V80 <dbl>, V81 <dbl>, V82 <dbl>, …The subtle similarity of some texts in the example

We can see that text 2 is most similar to text 1: Both express a very similar sentiment, just with different words (“great” ≈ “fantastic”; “I loved it” ≈ “A real treat”)

Text 2 is still similar to text 3 (after all it is about movies), but less so compared to text 1 (“fantastic” is the opposite of “not great”)

Text 4 still shares similarities (the context is the cinema/watching movies), but text 5 is very different as it doesn’t contain similar words and is not about similar things (except “I”).

Word Embeddings as Input

Word, sentence, or text embedding vectors can then be used as features in further text analysis tasks

Think about the example we just investigated: The sentence embeddings did capturesome difference between:

- “The film was fantastic, a real treat!” (positive)

- “I did not like this moive, it was not great.” (negative)

Approaches from the last lecture (classic machine learning) would have a hard time to detect the negation “not great”.

Yet, bear in mind: We do not actually know what the 100+ (often >300) dimensions actually mean (→ we cannot look under the hood later!)

From Sparse to Dense Matrix Representation

Using embedding vectors instead of word frequencies further has the advantages of strongly reducing the dimensionality of the DTM: instead of (tens of) thousands of columns for each unique word we only need hundreds of columns for the embedding vectors (→ dense instead of sparse)

This means that further processing can be more efficient as fewer parameters need to be fit, or conversely that more complicated models can be used without blowing up the parameter space.

Text classification with Word-Embeddings

Doing analysis with word-embedding themselves

Based on vector-based computations, we can also analyse semantic relationships

This is a quite common approach by now in research on gender and other types of stereotypes

Source: https://developers.google.com

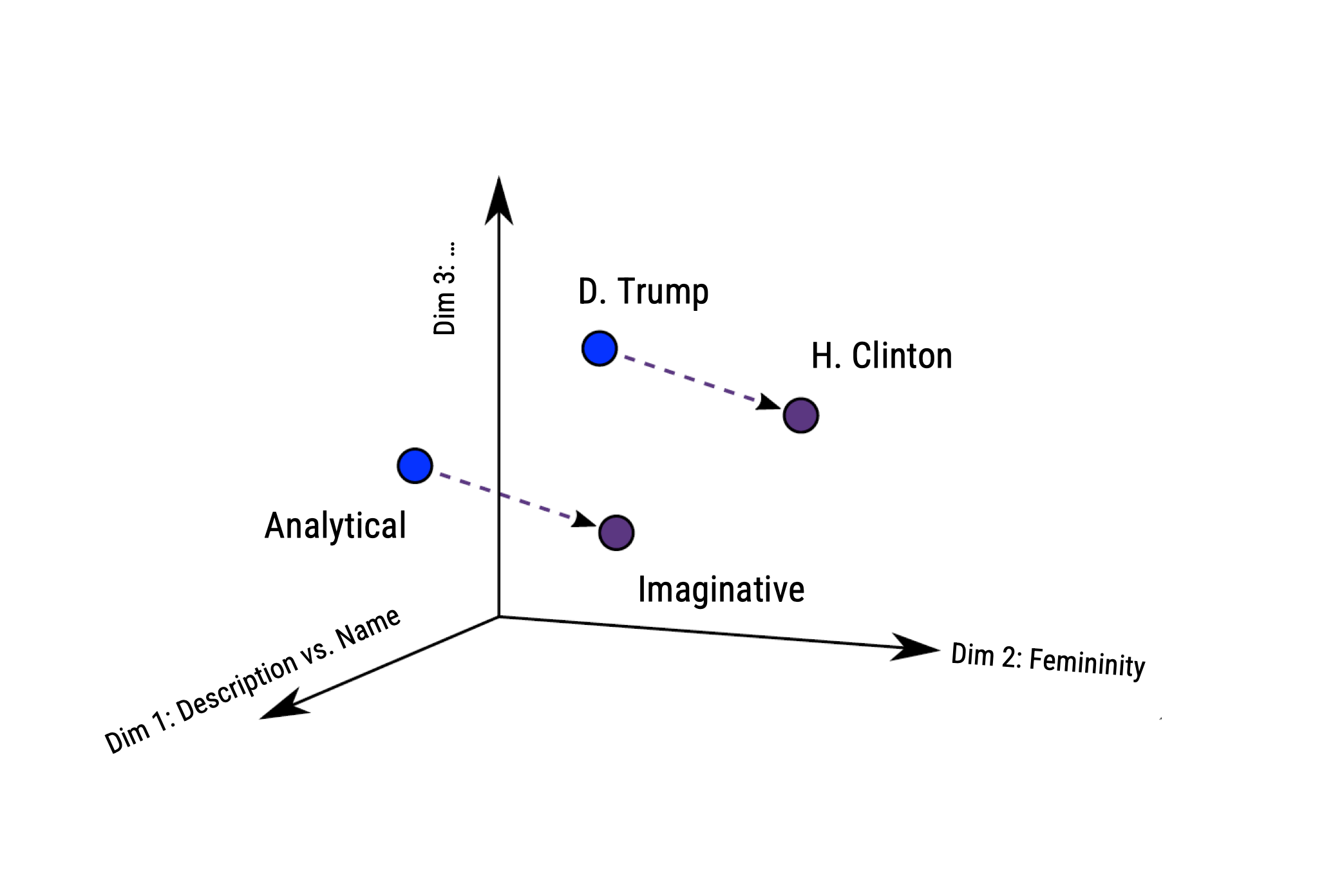

Example from the literature: Gender-Stereotypes

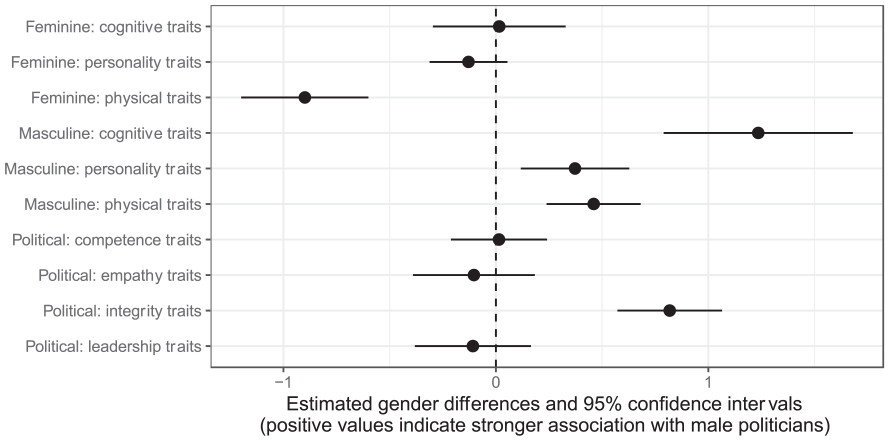

![]() Andrich et al. (2023) examine stereotypical traits in portrayals of 1,095 U.S. politicians.

Andrich et al. (2023) examine stereotypical traits in portrayals of 1,095 U.S. politicians.Analyzed 5 million U.S. news stories published from 2010 to 2020 to study gender-linked (feminine, masculine) and political (leadership, competence, integrity, empathy) traits

Methodologically, they estimated word embeddings using the Continuous Bag of Words (CBOW) model, meaning that a target word (e.g., honest) is predicted from its context (e.g., Who thinks President Trump is [target word]?)

Bias can thus be identified if e.g., gender-neutral words (e.g., competent) are closer to words that represent one gender (e.g., donald_trump) than to words that represent the opposite gender (e.g., hillary_clinton).

Results

All three masculine traits were more strongly associated with male politicians.

In contrast, only the feminine physical traits were more strongly associated with female politicians.

Differences remained stable across time.

The Rise of Transformers and Transfer Learning

Origin of Transformer Models

Until 2017, the state-of-the-art for natural language processing was using a deep neural network (e.g., recurrent neural networks, long short-term memory and gated recurrent neural networks)

In a preprint called “Attention is all you need”, published in 2017 and cited more than 95,000 times, the team of Google Brain introduced the so-called Transformer, a a neural network-type architecture that learns context and thus meaning by tracking relationships in sequential data like the words in this sentence.

Transformer models apply an evolving set of mathematical techniques, called attention or self-attention, to detect subtle ways even distant data elements in a series influence and depend on each other.

The proposed network structure had notable characteristics:

- No need for recurrent or convolutional network structures

- Based solely on attention mechanism (stacked on top of one another)

- Requires less training time (can be parallelized)

- Outperformed prior state-of-the-art models in a variety of tasks

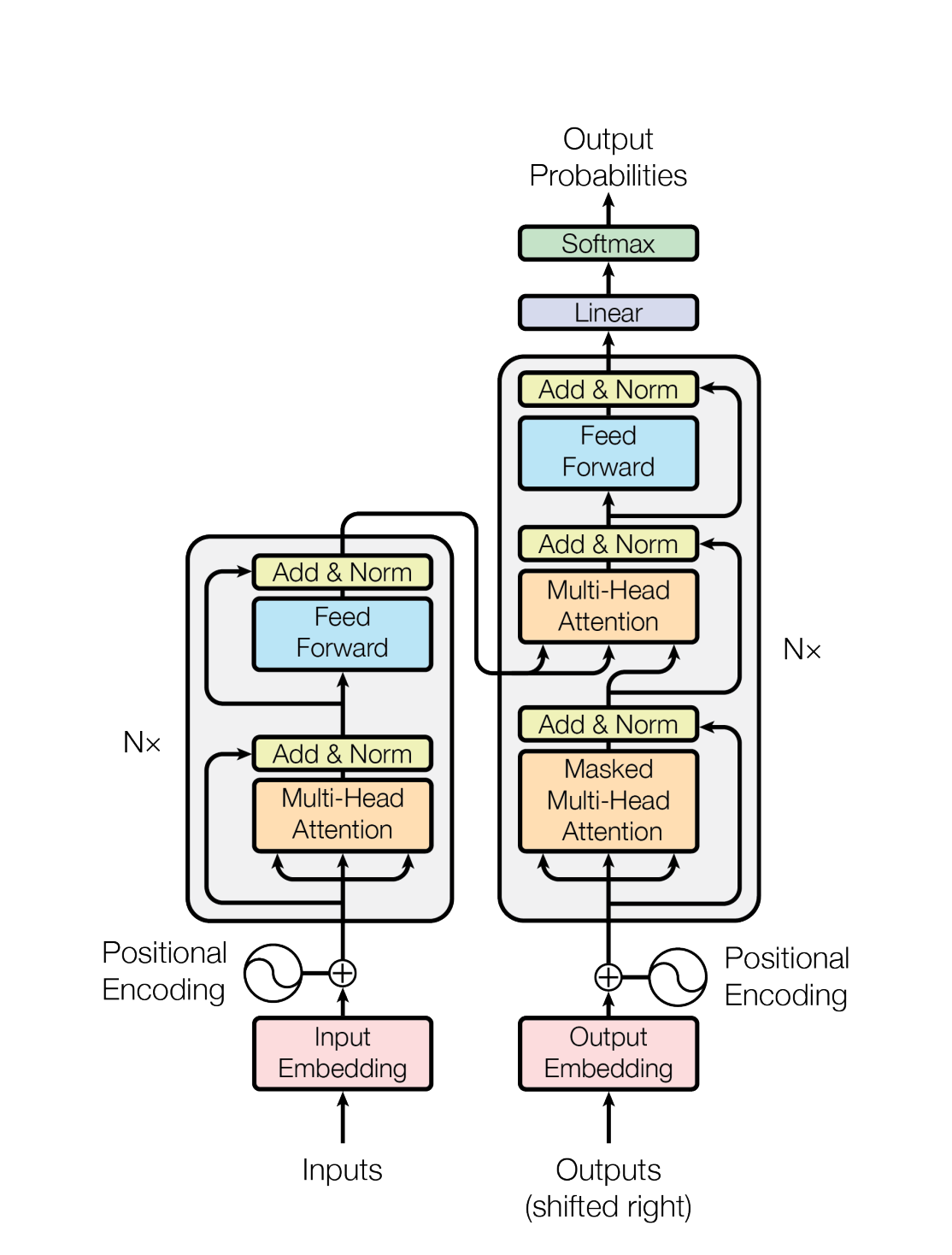

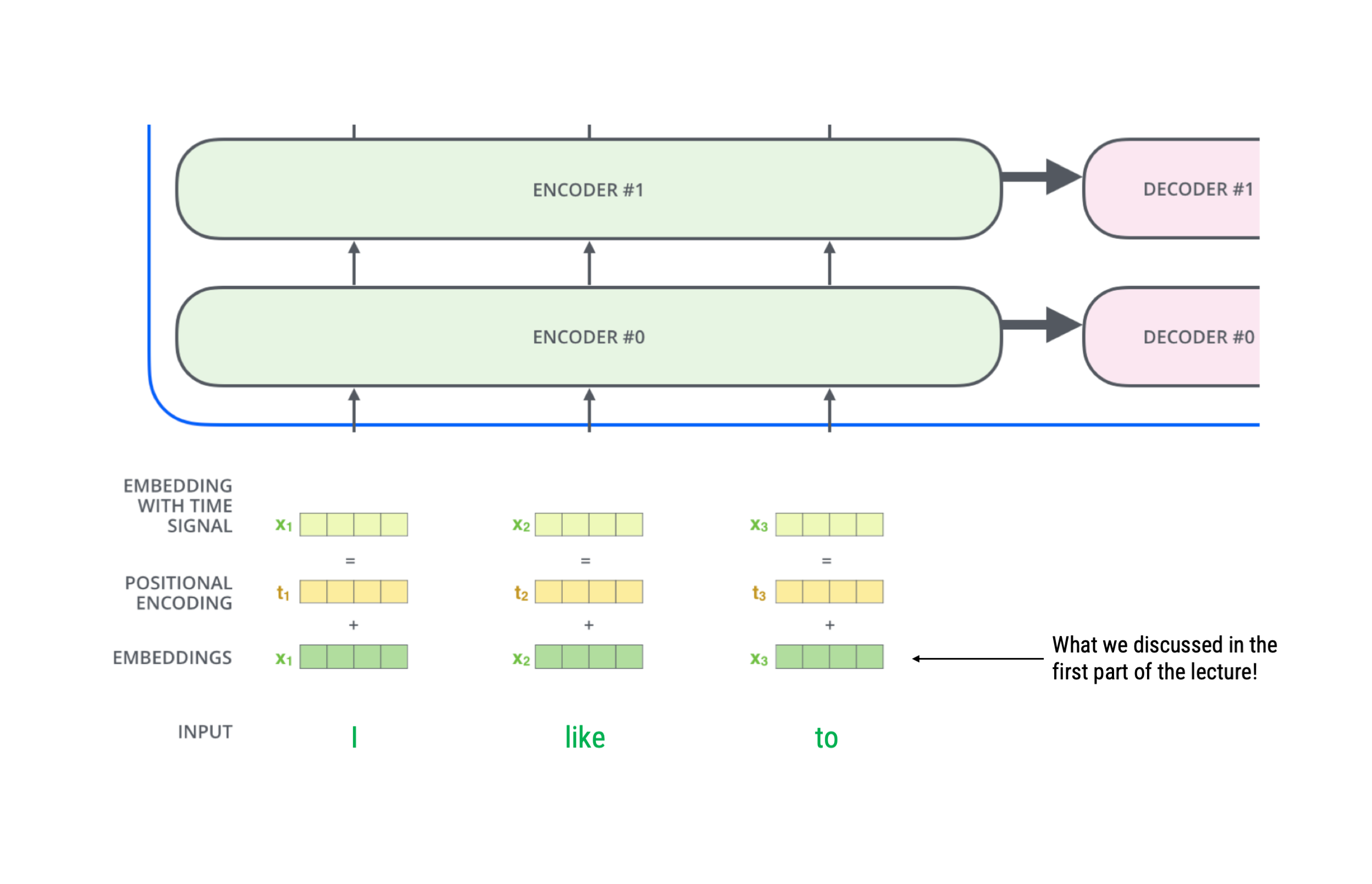

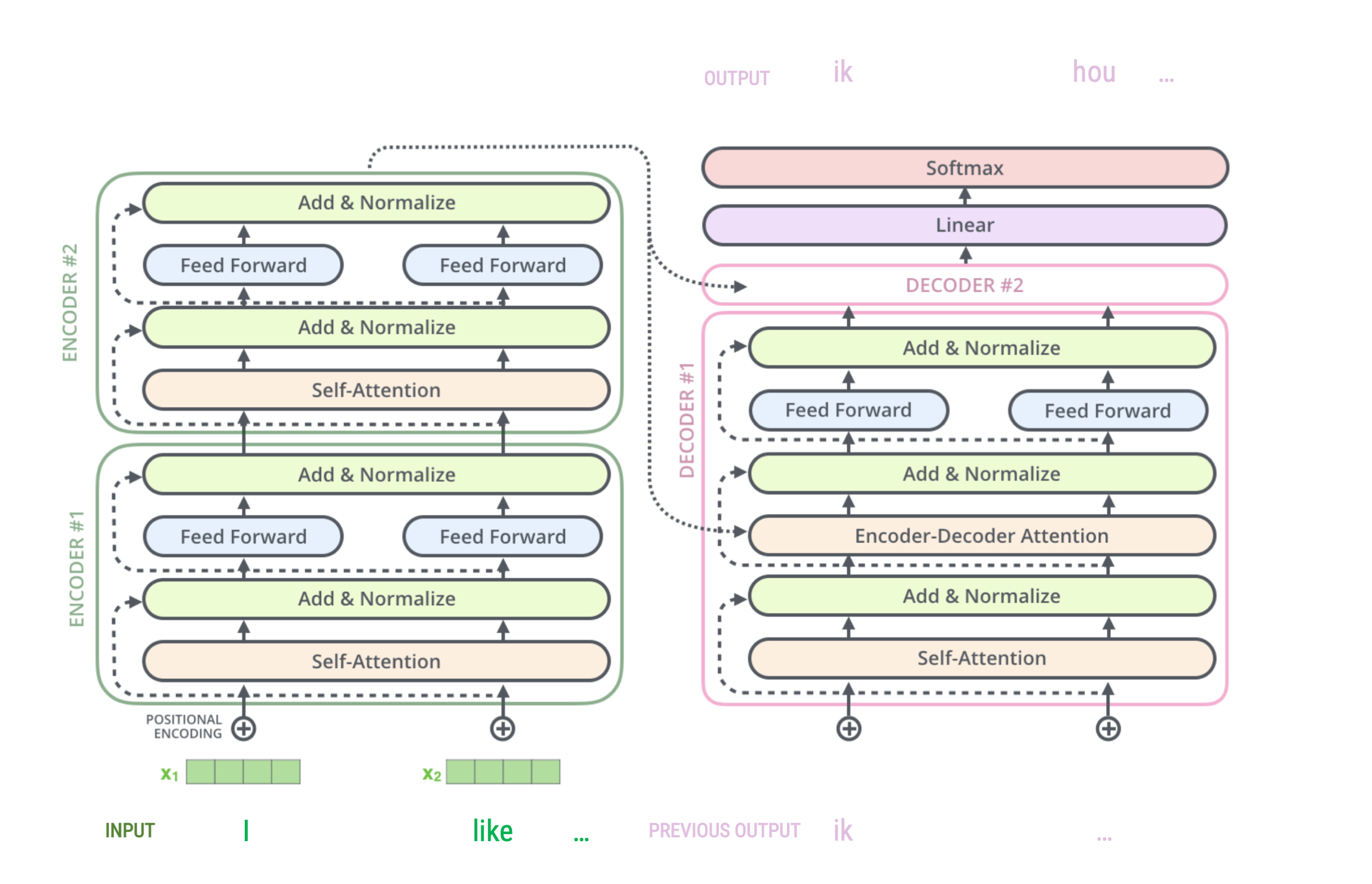

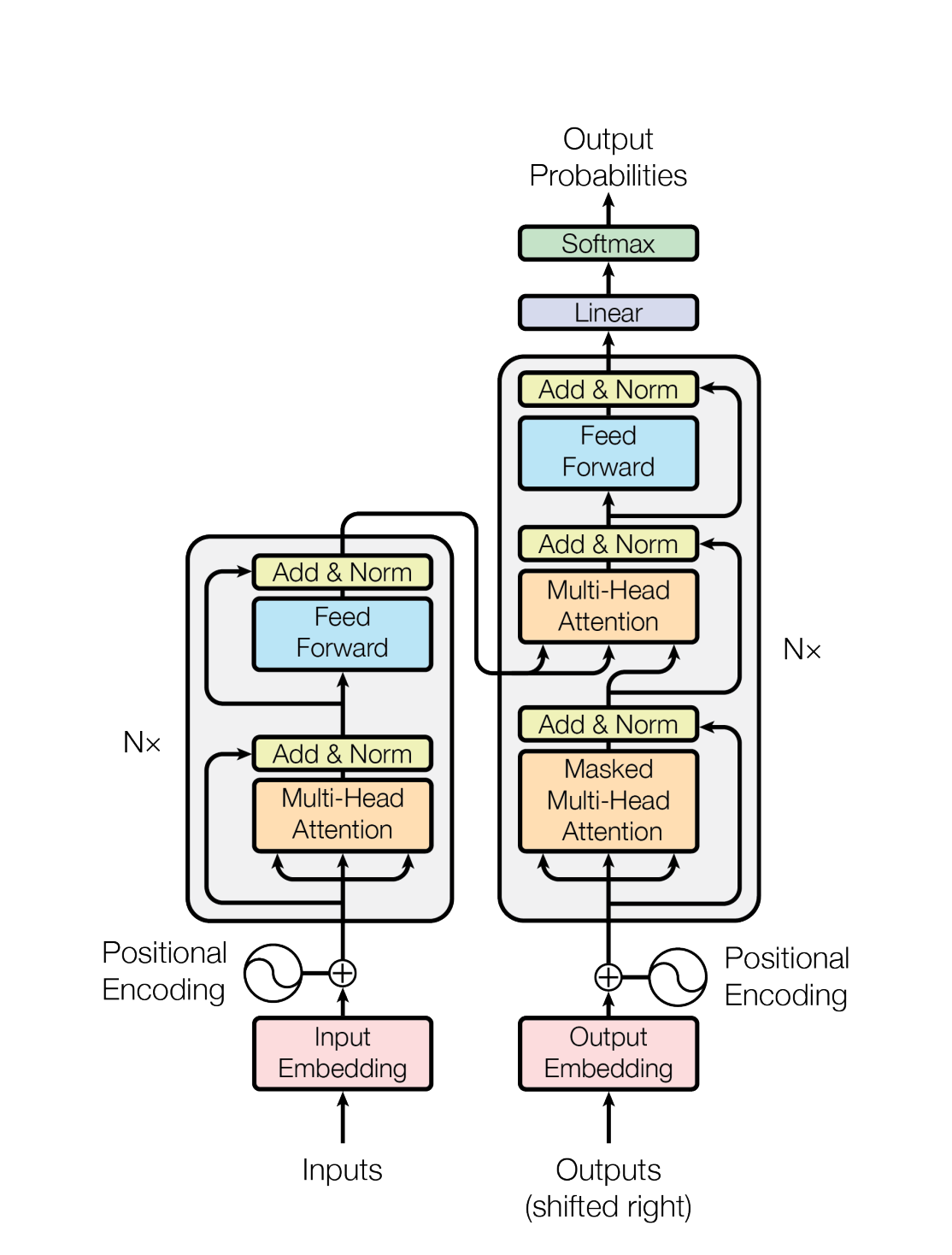

OVerview of the architecture

The figure on the right represent an abstract overview of a transformer’s architecture

It can be used for sequence-to-sequence predictions

- classic example is translation: e.g., english-to-dutch

- but also: question-to-answer, text-to-summary, sentence-to-next-sentence…

Although models can differ, they generally include:

- An encoder-decoder framework

- Word embeddings + positional embedding

- Attention and self-attention modules

We won’t cover any of these in much detail and just aim for a high-level understanding

Vaswani et al. 2017

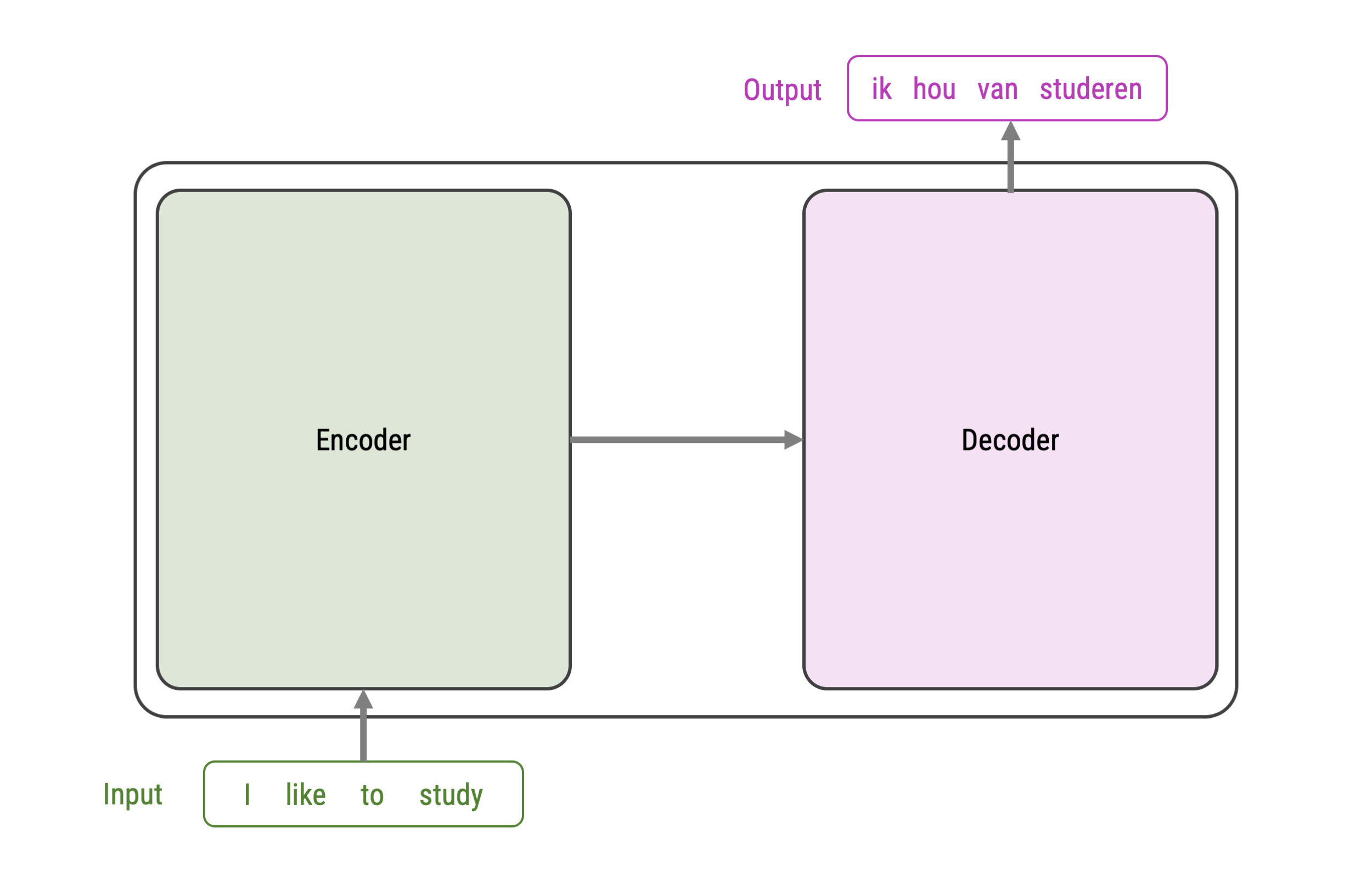

Basic Encoder-Decoder Framework (for Translation)

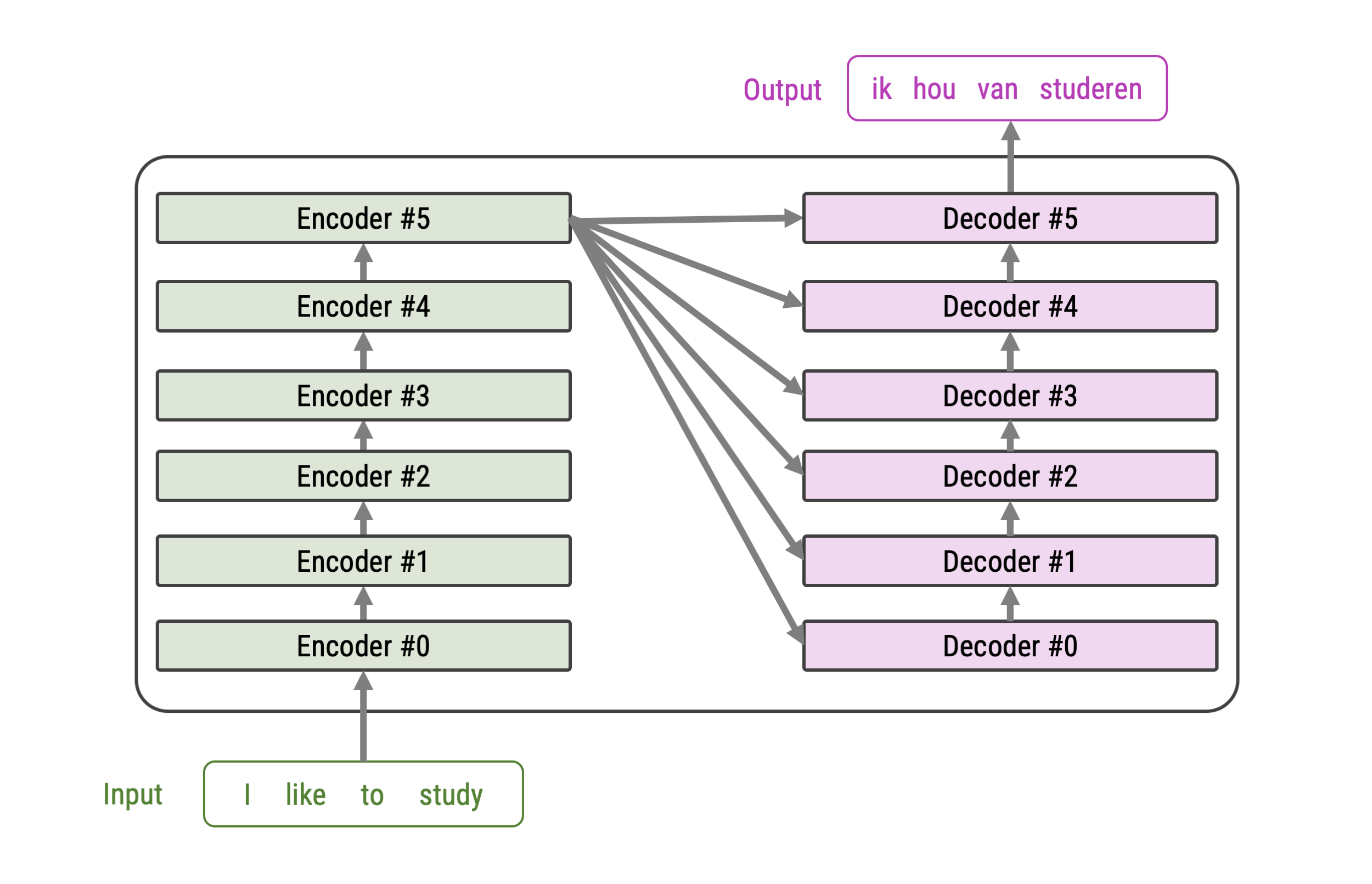

Stacked Encoders and Decoders

More elaborate encoding of words

Source: Alammar, 2018

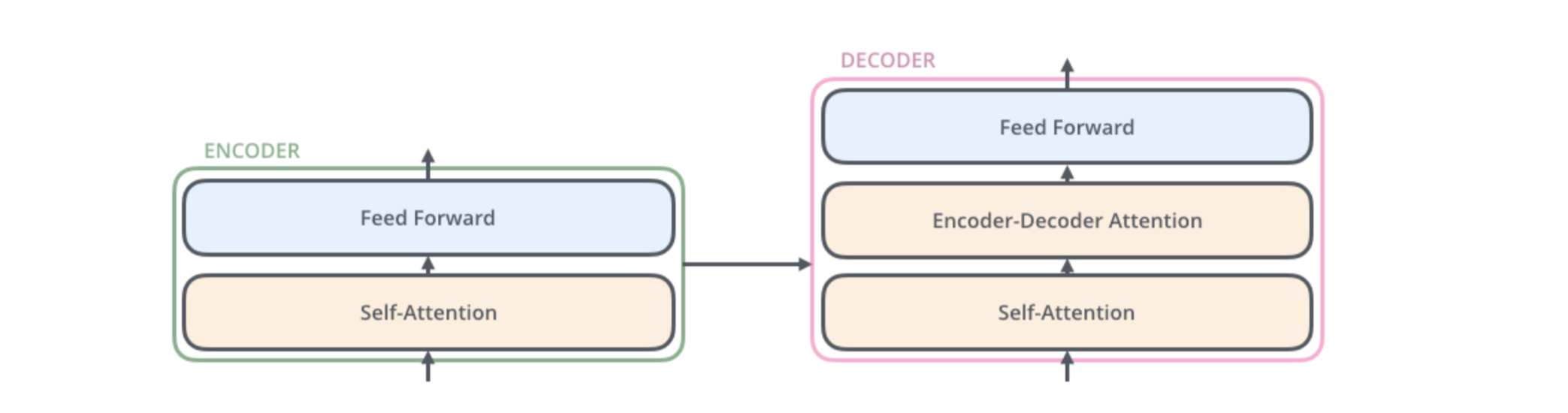

Inside of an encoder and a decoder

The word, position, and time signal embeddings are passed to the first encoder

Here, they flow through a self-attention layer, which further refines the encoding by “looking at other words” as it encodes a specific word

The outputs of the self-attention layer are fed to a feed-forward neural network.

The decoder likewise has both layers as well, but also an extra attention layer that helps to focus on different parts of the input (e.g., the encoders outputs)

Source: Alammar, 2018

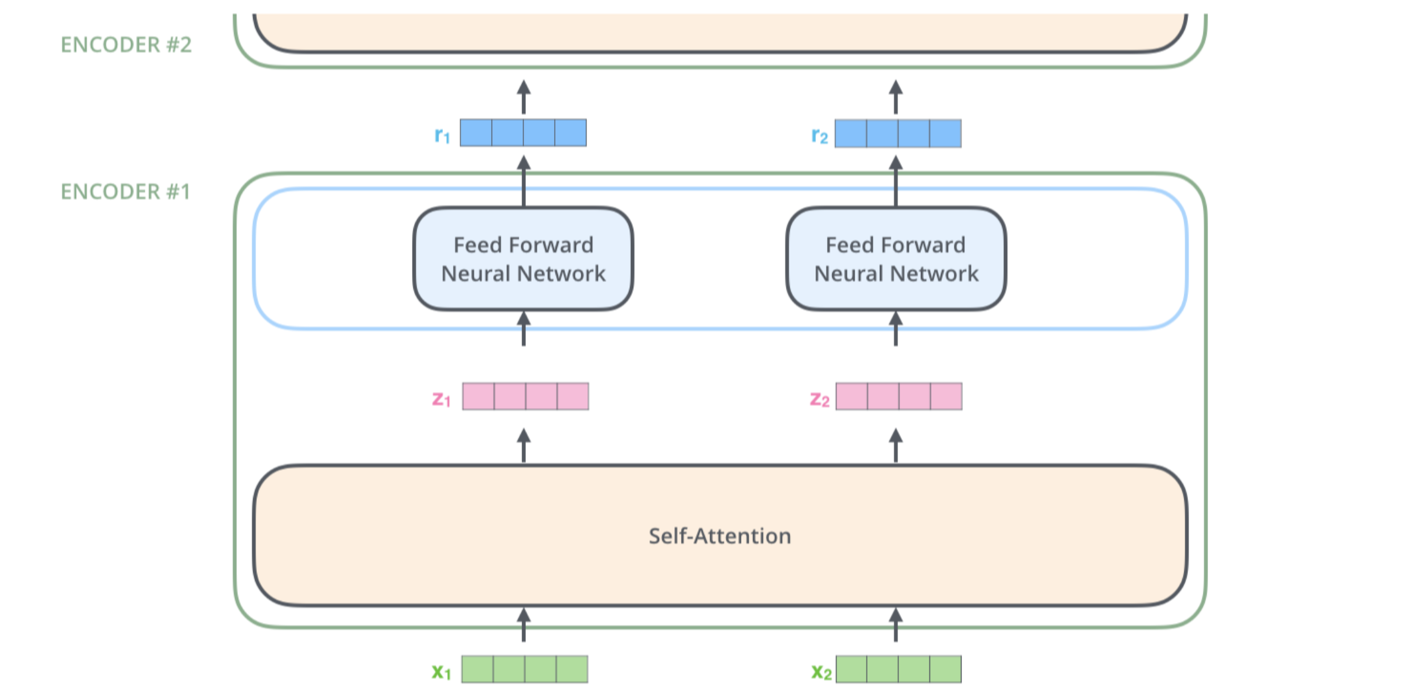

Encoding pipeline

The embedding only happens in the bottom-most encoder.

In other encoders, it would be the output of the encoder that’s directly below.

Source: Alammar, 2018

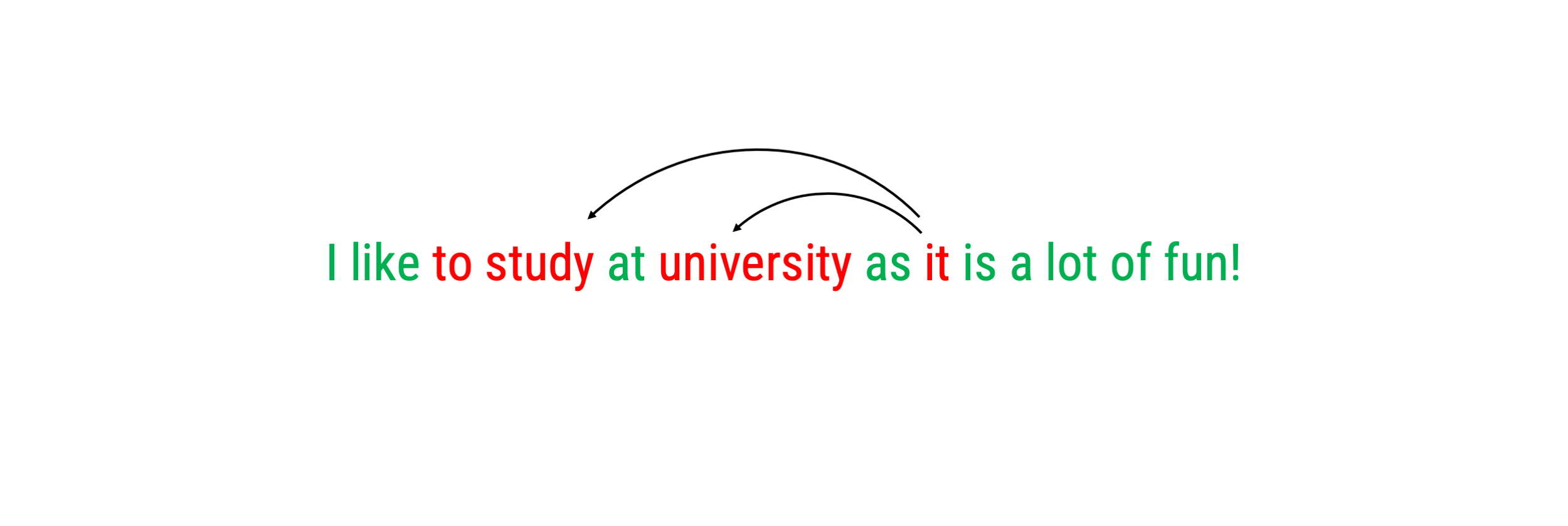

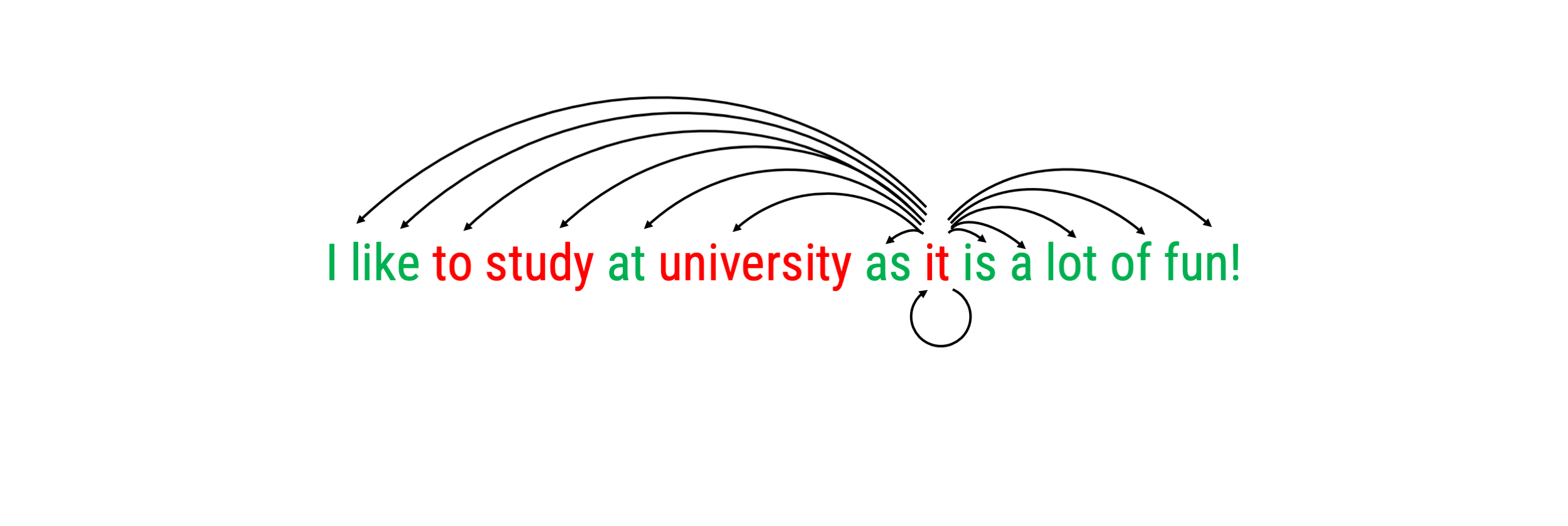

Self-Attention

In general terms, self-attention works encodes how similar each word is to all the words in the sentence, including itself.

Once the similarities are calculated, they are used to determine how the transformers encodes each word.

Self-Attention

In general terms, self-attention works encodes how similar each word is to all the words in the sentence, including itself.

Once the similarities are calculated, they are used to determine how the transformers encodes each word.

Self-Attention

In general terms, self-attention works encodes how similar each word is to all the words in the sentence, including itself.

Once the similarities are calculated, they are used to determine how the transformers encodes each word.

Self-Attention

In general terms, self-attention works encodes how similar each word is to all the words in the sentence, including itself.

Once the similarities are calculated, they are used to determine how the transformers encodes each word.

Self-Attention at a High Level

As the encoder processes each word (each position in the input sequence), self attention allows it to look at other positions in the input sequence for clues that can help lead to a better encoding for this word.

On the decoder side, the self-attention layer is only allowed to attend to earlier positions in the output sequence. This is done by masking future positions (setting them to -inf) before the softmax step in the self-attention calculation.

The actual architecture of this step is incredibly complex, so we keep it at that for now.

Putting it all together

Source: Alammar, 2018

High-level process

The transformers starts by creating word embeddings (combinations of similarity, position, time signal)

The encoder start by processing the input sequence (embeddings).

The output of the top encoder is then transformed into a set of attention vectors which are used by each decoder in its “encoder-decoder attention” layer which helps the decoder focus on appropriate places in the input sequence

The decoder spits out a first output (e.g., the word “I”), which then becomes the input for the decoder in follow-up steps

The decoder repeats these steps until a special symbol (e.g.,

= “end of sentence”) is reached.

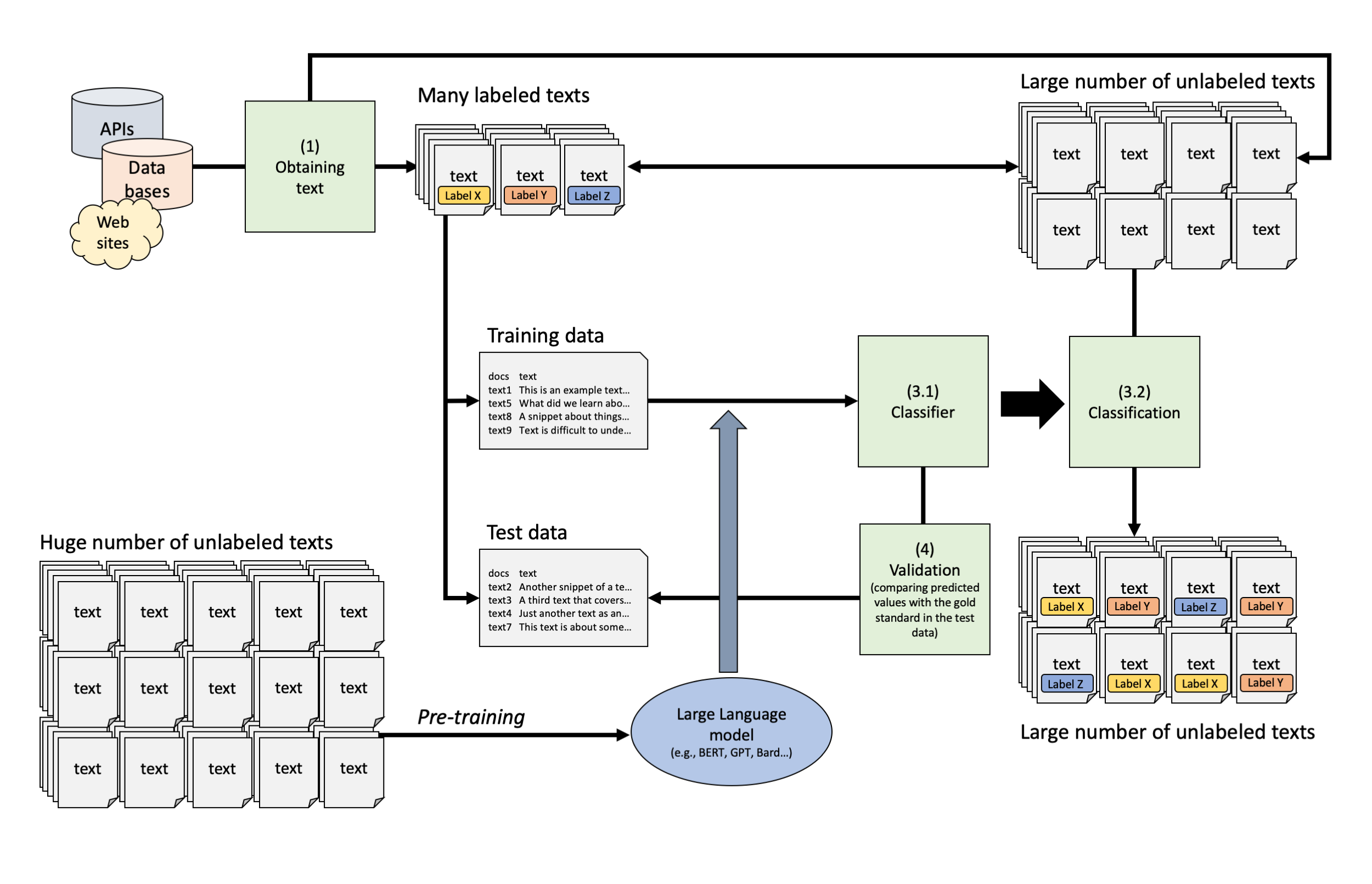

Text Classification Pipeline Using Transformers

Pre-training and transfer learning

Generally, transformer models are pre-trained using specific natural language processing tasks

It has been shown that these (often self- or unsupervised) trainings are often sufficient to let the model perform well on many down-stream tasks

However, the general idea would be to use a pre-trained model and then “fine-tune” it on the specific tasks it is supposed to perform (e.g., annotating text with topics or sentiment)

Although the transformer’s architecture has made training more efficient (due to the ability to parallelize), it nonetheless requires significant computing power to fine-tune a model

Luckily, transformers are the back-bone of today’s large language models, which - due to their immense training - are able to perform very well on most task without any specific fine-tuning (called: zero-shooting).

As pre-training often involves tasks that are different than what we want the model to do, this is often denoted as “transfer learning”, thus a type of learning that transfers to other task as well

Large Language Models: BERT, GPT and the “AI Revolution”?

Source: Christian Behler on Medium

What are large language models

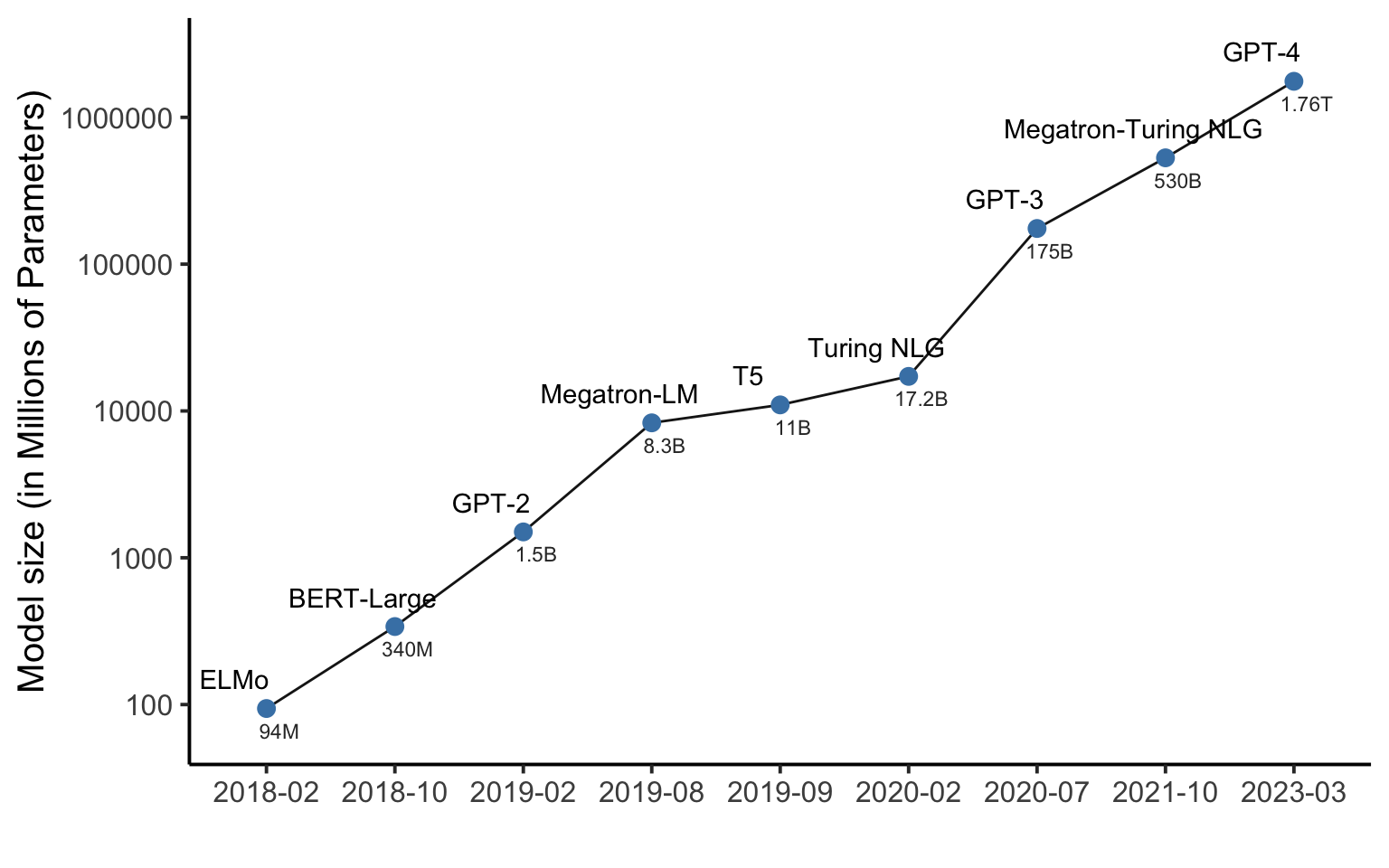

A large language model (LLM) is a type of language model notable for its ability to achieve general-purpose language understanding and generation.

LLMs acquire these abilities by using massive amounts of data to learn billions of parameters during training and consuming large computational resources during their training and operation.

LLMs are still just a type of artificial neural networks (mainly transformers!) and are (pre-)trained using self-supervised learning and semi-supervised learning.

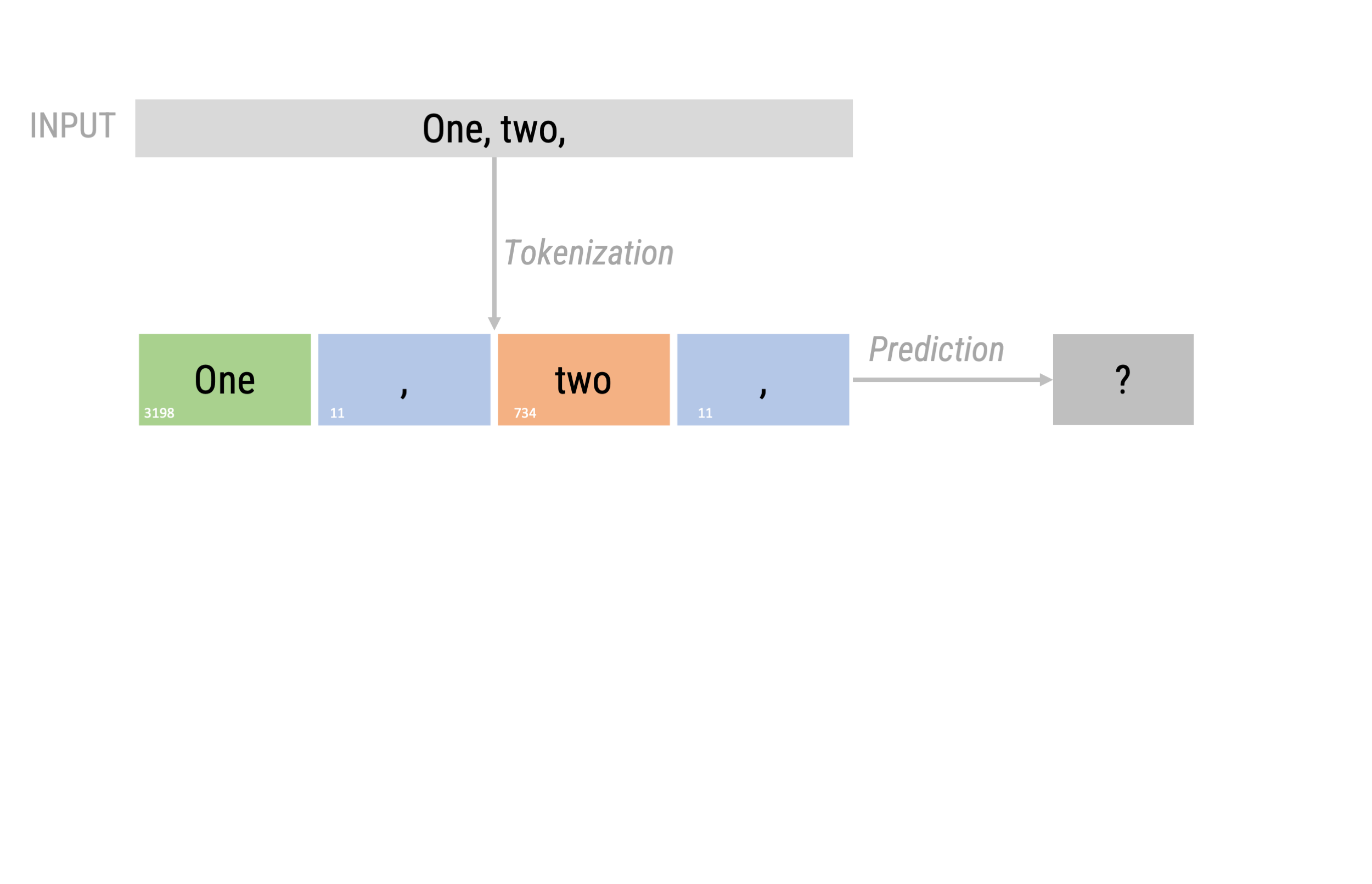

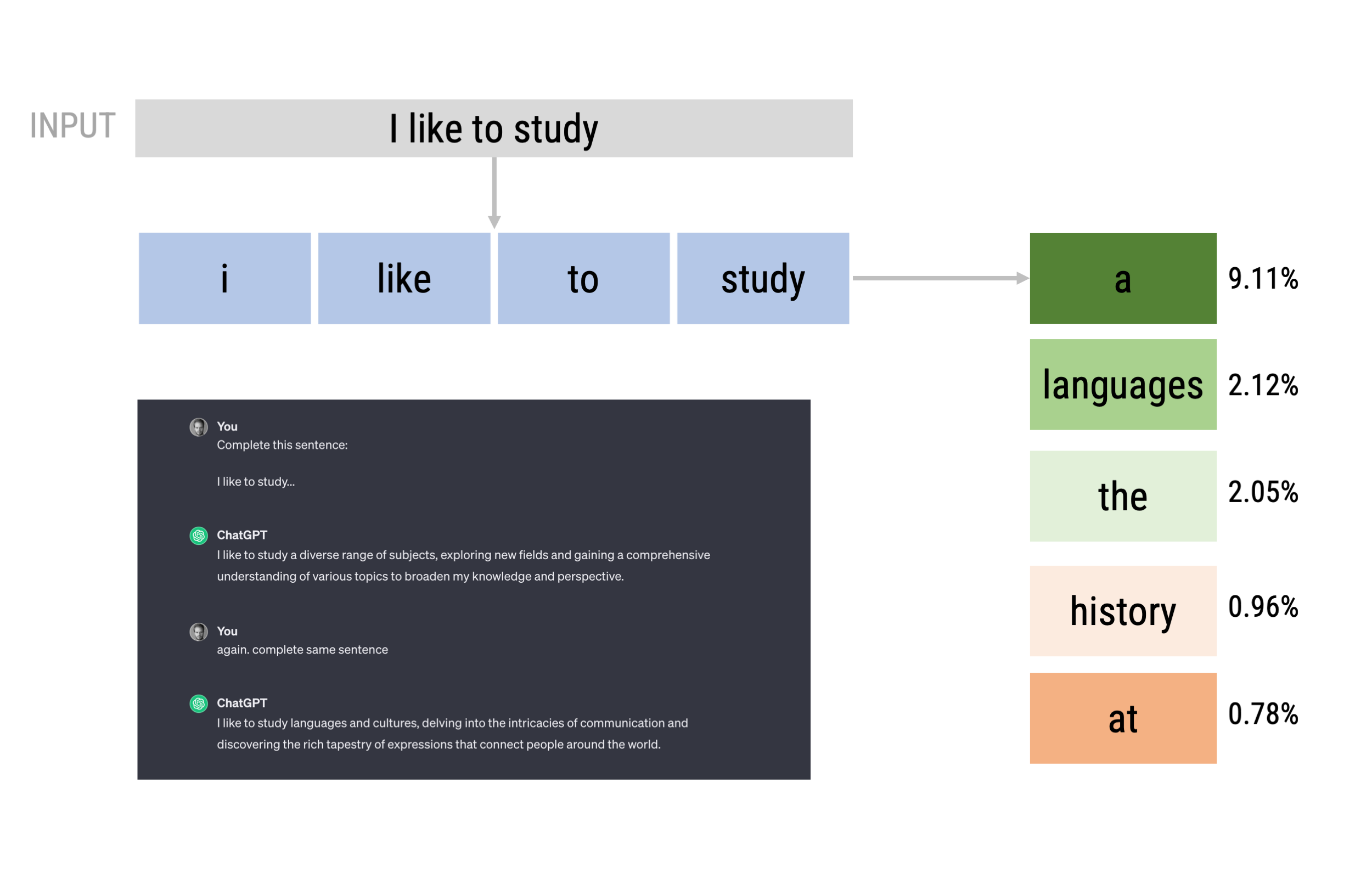

As so-called autoregressive language models, they take an input text and repeatedly predicting the next token or word.

Fine-tuning vs. zero-shot learning

Up to 2020, fine tuning was the only way a model could be adapted to be able to accomplish specific tasks.

Larger sized models (since BERT and GPT-2 and 3), however, can be prompt-engineered to achieve similar results.

They are thought to acquire embodied knowledge about syntax, semantics and “ontology” inherent in human language corpora, but also inaccuracies and biases present in the corpora.

Notable examples include:

- OpenAI’s GPT models (e.g., GPT-3.5 and GPT-4, used in ChatGPT)

- Google’s BERT and PaLM (used in Bard),

- Meta’s LLaMa

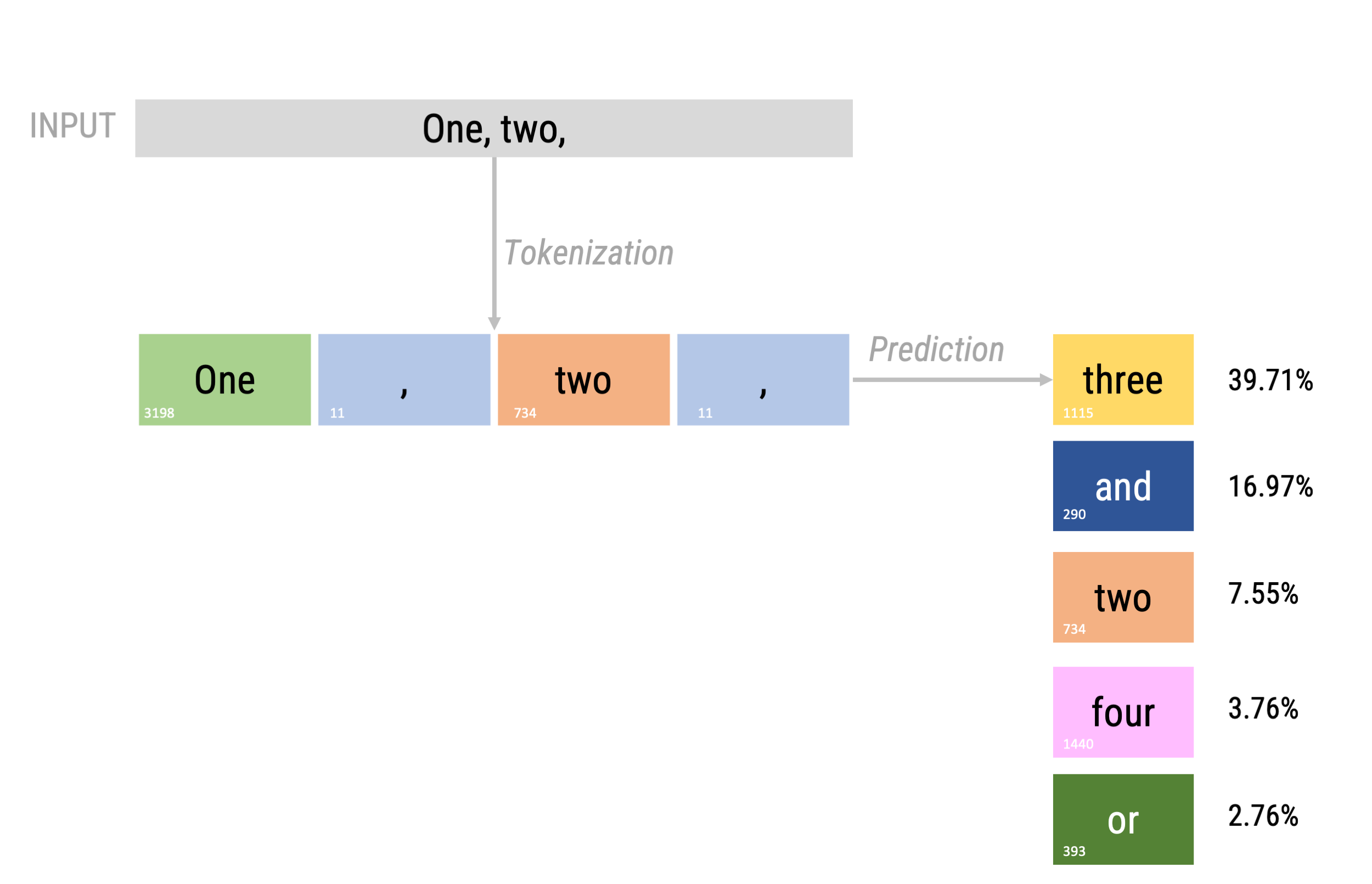

Next token prediction (as in GPT-2)

Next token prediction (as in GPT-2)

Next token prediction

Different architectures

Encoder-Decoder Transformers:

- BART (Lewis et al., 2019): language generation, tanslation, comprehension,…

Encoder-Only Transformer

- BERT (Devlin et al., 2019): Question answering, language inference,…

Decoder-Only Transformer:

- GPT-series (OpenAI)

In the following, we will focus on BERT and GPT

Note: Whereas many large language models are open source AND free to use (e.g., BERT), others are free to use only in limited capacity and essentially a black box when it comes to details about the architecture and training form and training data (e.g., GPT-3 or GPT-4).

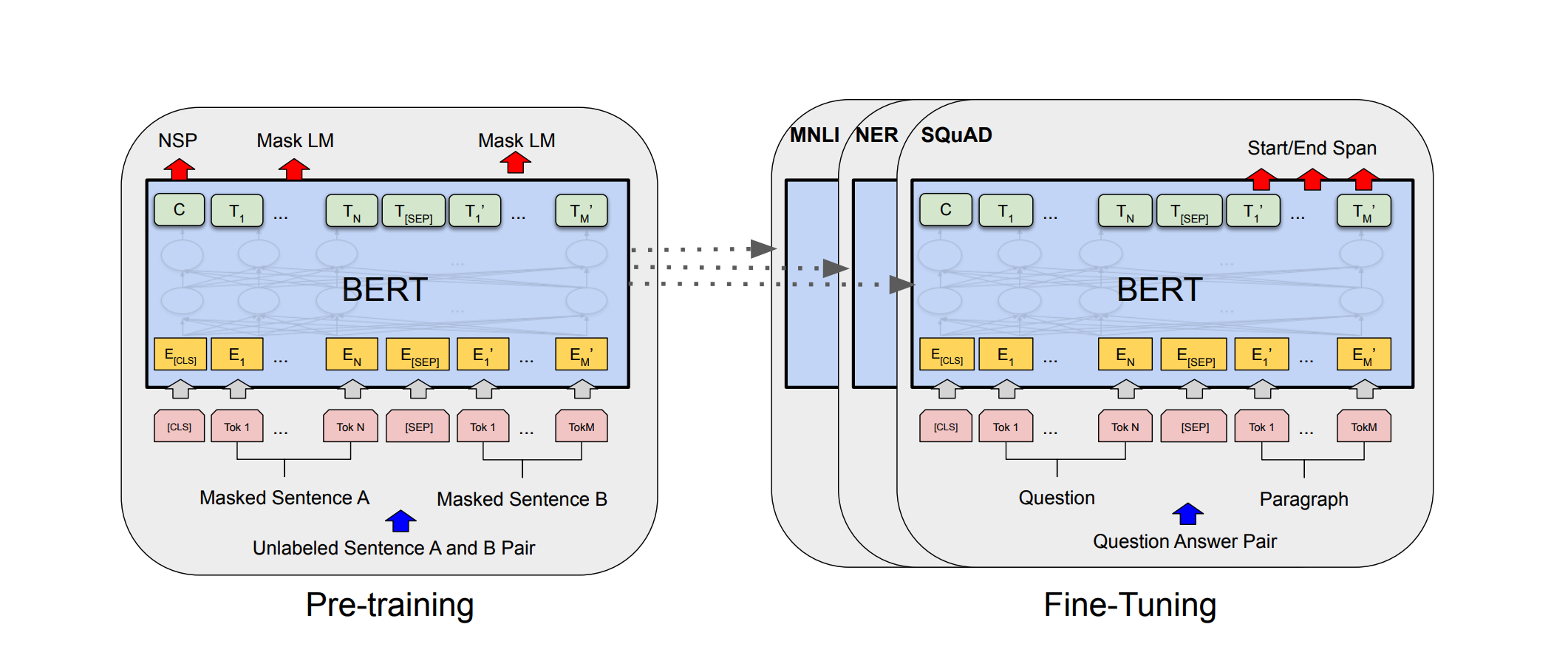

Bert

Bidirectional Encoder Representations from Transformers (BERT) is a family of language models introduced in October 2018 by researchers at Google.

BERT is an “encoder-only” transformer architecture.

Generally speaking, BERT consists of three modules:

- Embedding. This module converts an array of one-hot encoded tokens into an array of vectors representing the tokens.

- Stack of encoders. These encoders are the Transformer encoders (BERT-base = 12, BERT-large = 24). They perform transformations over the array of representation vectors.

- Un-embedding. This module converts the final representation vectors into one-hot encoded tokens again.

Devlin et al. 2018

Why un-embedding?

The un-embedding module is necessary for pretraining, but it is often unnecessary for downstream tasks.

Here, one would take the representation vectors output at the end of the stack of encoders, and use those as a vector representation of the text input, and train a smaller model on top of that (technically anything we covered last lecture!)

Training and Fine-Tuning of BERT

Pretrained on two task:

- Mask Language Modelling (LM): simply mask some percentage of the input tokens at random, and then predict those masked tokens

- Next sentence prediction (NSP): predict sentence B from sentence A to model relationships between sentences

This pre-training led to great performances in downstream tasks

Source: Devlin et al., 2018

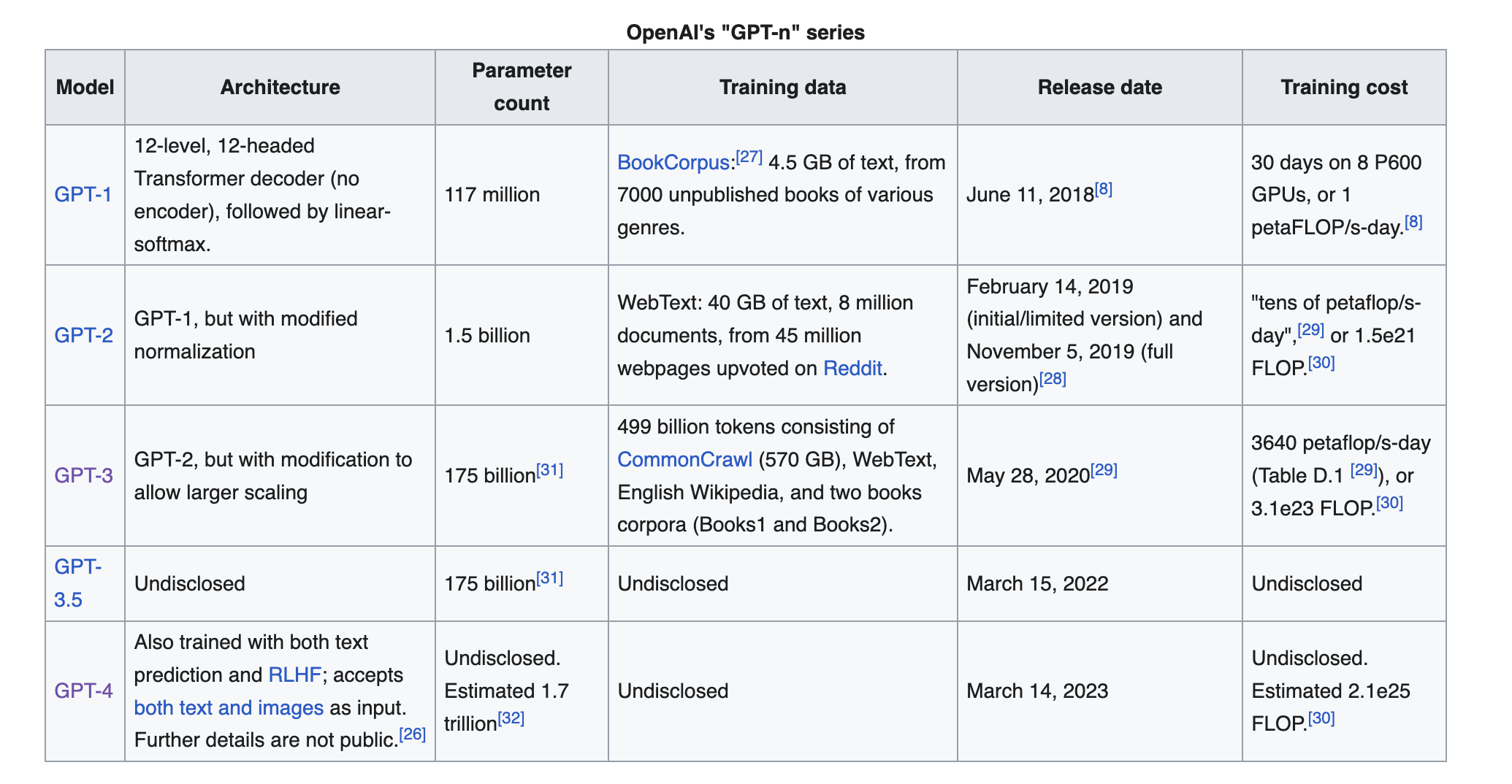

GPT-Series by OpenAI

Generative Pre-trained Transformer (GPT), is a set of state-of-the-art large language model developed by OpenAI.

Particularly GPT-3, released publicly in November 2022 together with a chat interface, caused a lot of public attention.

Millions of users in a very short amount of time (faster than Facebook, Instagram, TikTok, etc…), now 1.5 Billion users

![]()

High-level architecture and training of GPT

- GPT are decoder-only models

Source: Wikipedia

Zero-shot Learning

Zero-shot learning refers to the ability of a model to perform a task or make predictions on a set of classes or concepts that it has never seen or been explicitly trained on.

In other words, the model can generalize its pre-trained knowledge to new, unseen tasks without specific examples or training data for those tasks.

Classic machine learning models (last lecture) are typically trained on a specific set of classes, and their performance is evaluated on the same set of classes during testing.

Zero-shot learning extends this capability by allowing the model to handle tasks or categories that were not part of its training set be

This works, because of the model’s ability to capture and generalize information from the vast and varied data it has been exposed to during training.

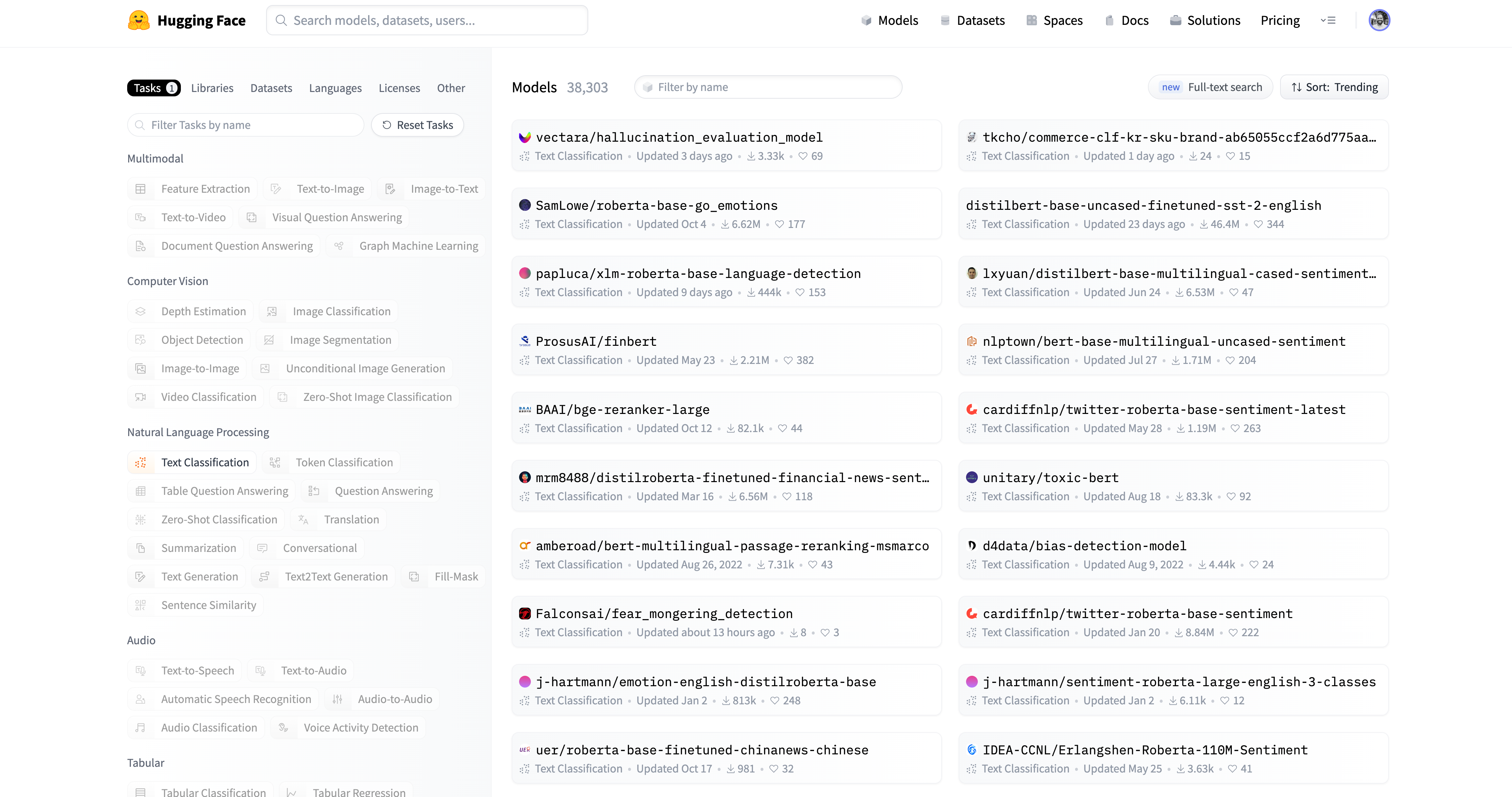

Zero-Shot Text Classification with LLMS

Many LLMS are available at huggingface.co

Workflow

There are generally two ways in which we can work with these models:

- Assess the model via the hugging face API (only smaller rates per minute, but still useful)

- Download and use the model on our own computer/GPU (requires Python, can be computationally intensive)

In this course, we are going to “play around” with BERT models via the hugging face API

- We created a set of simple functions that allow to use different types of models for different purposes

- To use them, it makes sense to create an account on hugging face and create an access token (homework!)

For the project-phase, I will provide a more elaborate tutorial on how to use such models on your computer (but you don’t have to, if you don’t want to)

- This will require to install python and use it via R

- Generally a bit more complicated

Using a BERT model to zero-shot topics

library(ccsamsterdamR)

example_corpus <- tibble(

id = c(1:6),

text = c("To be, or not to be: that is the question.",

"An atom is a particle that consists of a nucleus of protons and neutrons.",

"Senate passes stopgap bill to avert government shutdown.",

"S&P 500 ends Friday slightly higher, major averages cruise to third week of gains: Live updates",

"Joe Burrow out for season: League to investigate Bengals over injury, looking whether team violated NFL policy",

"Joe Biden attended the final NFL game together with French president Emmanuel Macron."))

output <- hf_zeroshot(txt = example_corpus$text,

labels = c("Poetry", "Politics", "Physics", "Finance", "Sport" ),

url = "https://api-inference.huggingface.co/models/MoritzLaurer/deberta-v3-large-zeroshot-v1")

results <- output |>

unnest(cols = c("labels", "scores")) %>%

as_tibble %>%

spread(labels, scores)

results# A tibble: 6 × 6

sequence Finance Physics Poetry Politics Sport

<chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 An atom is a particle that consists of… 0.0003 0.999 0.0003 0.0003 0.0003

2 Joe Biden attended the final NFL game … 0.0003 0.0002 0.0002 0.116 0.884

3 Joe Burrow out for season: League to i… 0 0 0 0 1.00

4 S&P 500 ends Friday slightly higher, m… 1.00 0.0001 0.0001 0.0001 0.0001

5 Senate passes stopgap bill to avert go… 0.0003 0.0001 0.0001 0.999 0.0001

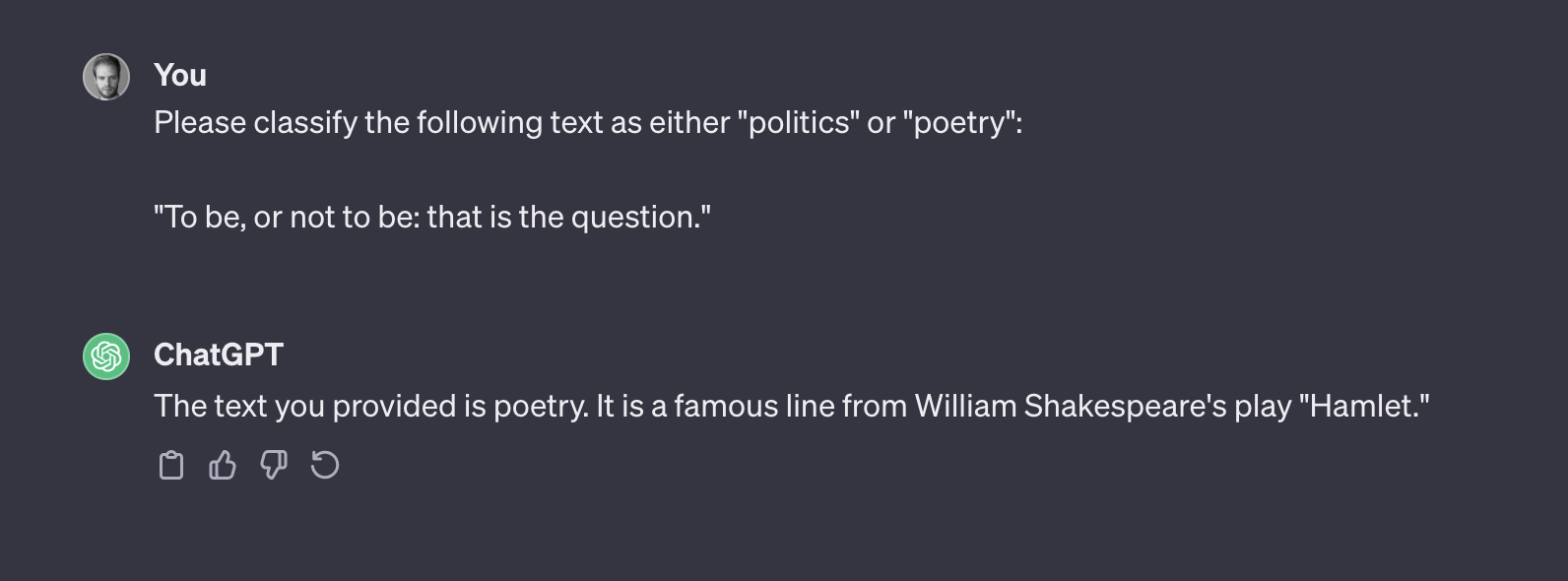

6 To be, or not to be: that is the quest… 0.0625 0.101 0.687 0.0829 0.0665Using GPT for text classification

- The simplest way to use GPT is to simply paste text into the chat and ask for the relevant topics (in fact, let’s try this now!)

Yet, for larger text corpora this is not feasible

To use GPT for text analysis purposes, we need to access the models via the Open AI API

- We created functions that allow to use the GPT models similarly to how we used the hugging face models

- Requires to pay per token (luckily not too expensive, we will provide some accounts)

Using GPT-3.5-turbo for a simple topic classification

We simply pass the corpus to the function

gpt_zeroshot()included in the packageccsamsterdamRWe further pass a vector of labels that we want GPT to classify the text with.

# A tibble: 6 × 4

id text labels justification

<dbl> <chr> <chr> <chr>

1 1 To be, or not to be: that is the question. Poetry Contains famous line from Shakespeare's Hamlet.

2 2 An atom is a particle that consists of a nucleus of protons and neutrons. Physics Describes basic concept of an atom.

3 3 Senate passes stopgap bill to avert government shutdown. Politics Refers to a specific legislative action in the Senate.

4 4 S&P 500 ends Friday slightly higher, major averages cruise to third week of gains: Live updates Finance Covers stock market performance and S&P 500.

5 5 Joe Burrow out for season: League to investigate Bengals over injury, looking whether team violated NFL policy Sport Reports on injury of football player Joe Burrow.

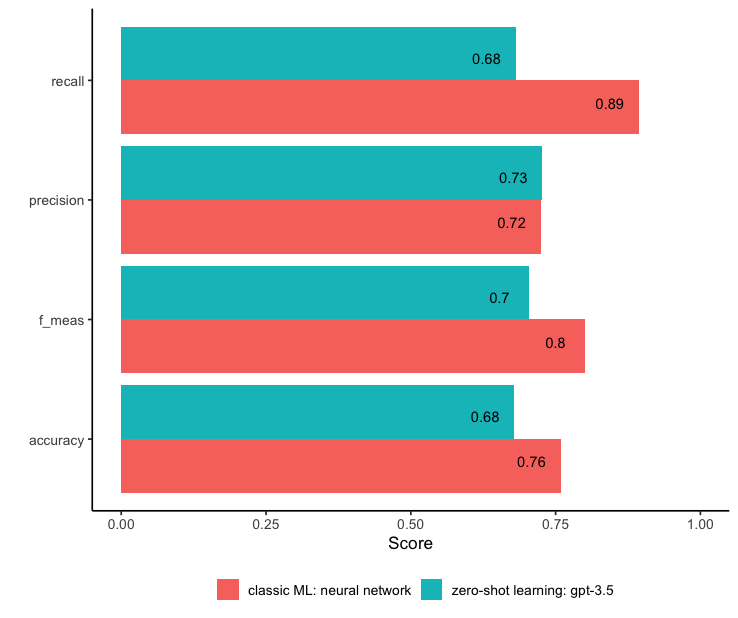

6 6 Joe Biden attended the final NFL game together with French president Emmanuel Macron. Politics Describes diplomatic engagement between Joe Biden and Emmanuel Macron at NFL game.Comparison with classic machine learning

Let’s compare the zero-shot performance of GPT-3.5 against our best neural network classifier from last week in predicting genre from music lyrics.

To make things easier, we only use 87 songs from the lyrics data set.

library(tidyverse)

library(textrecipes)

library(tidymodels)

# Set seed

set.seed(42)

# Read data

lyrics_data <- read_csv("data/lyrics-data-prep.csv") |>

mutate(binary_genre = factor(ifelse(Genre =="Rock","rock", "other"),

levels = c("rock", "other") )) |>

sample_frac(size = .001) |>

select(doc_id = SLink, Artist, Song = SName,

Genre, binary_genre, text = Lyric)

head(lyrics_data)# A tibble: 6 × 6

doc_id Artist Song Genre binary_genre text

<chr> <chr> <chr> <chr> <fct> <chr>

1 /snoop-dogg/213-tha-gangsta-clicc.html Snoop Dogg 213 Tha Gangsta Clicc Hip … other "[Sn…

2 /far-east-movement/jello-feat-rye-rye.html Far East Movement Jello (feat. Rye Rye) Pop other "Jel…

3 /janet-jackson/got-till-its-gone.html Janet Jackson Got 'til It's Gone (feat. Q-Tip… Pop other "Wha…

4 /tori-amos/yo-george.html Tori Amos Yo George Rock rock "I s…

5 /van-morrison/a-sense-of-wonder.html Van Morrison A Sense of Wonder Rock rock "I w…

6 /heart/together-now.html Heart Together Now Rock rock "Dee…Predict genre with the neural network classifier

Because I saved the classifier from last week, I can now simply use it to predict the genre in the new subset

Remember, it was multilayer perceptrion with 1 hidden layer (6 nodes) and trained on 10,445 songs.

# Setting relevant metrics

class_metrics = metric_set(accuracy, precision, recall, f_meas)

# Loading the model from last week

load("results/m_ann.Rdata")

# Predicting the new subset of the sample (the 87 songs!)

predict_ann <- predict(m_ann, new_data=lyrics_data) |>

bind_cols(select(lyrics_data, binary_genre)) |>

rename(predicted=.pred_class, actual=binary_genre)Predicting genre with GPT-3.5

To predict the data with GPT (I am choosing the GPT-3.5 Turbo version here), we don’t have to engage in elaborate preprocessing. We simply create an id-variable (helps to later combine predictions and actual gold standard).

However, the GPT API allows on a certain amount of tokens per minute (~10,000) AND also only a certain amount of tokens per prompt (for this model at least 32,000)

This requires us to get creative and do a few things:

- split the sample in smaller chunks so that we can prompt one after the other

- map across those chunks and capture prediction in each step

- add a delay (

Sys.sleep(10)= 10 seconds break) in between prompts

Predicting genre with GPT-3.5

- First, we have to create and ID-variable and split the data in small chunks:

# Actual prompting via the API

map_results <- map_df(splits, function(x) {

output <- gpt_zeroshot(

txt = x,

expertise = "You are in expert in classifying the genre of a song based on its lyrics.",

labels = c("rock", "other"),

model = "gpt-3.5-turbo-1106")

Sys.sleep(10) # Adding 10 seconds delay between prompts to avoid token-per-minute rate limit

output

})- Finally, we can join the resulting predictions with the original data:

Comparison between neural network and GPT-3.5

We can see that the neural network does better overall, but bear in mind that it was trained on a lot of data (10,445 songs!)

GPT-3.5 yields a quite astonishing performance, given that it was not trained to do this task at all!

bind_rows(

predict_ann |>

class_metrics(truth = actual,

estimate = predicted) |>

mutate(approach = "classic ML: neural network"),

predict_gpt |>

class_metrics(truth = binary_genre,

estimate = labels) |>

mutate(approach = "zero-shot learning: gpt-3.5")

) |>

ggplot(aes(x = .metric, y = .estimate,

fill = approach)) +

geom_col(position = position_dodge()) +

geom_text(aes(y = .estimate -.05,

label = round(.estimate, 2)),

position = position_dodge(width = .75)) +

ylim(0, 1) +

coord_flip() +

theme(legend.position = "bottom") +

labs(x = "", y = "Score", fill = "")

Examples in the literature

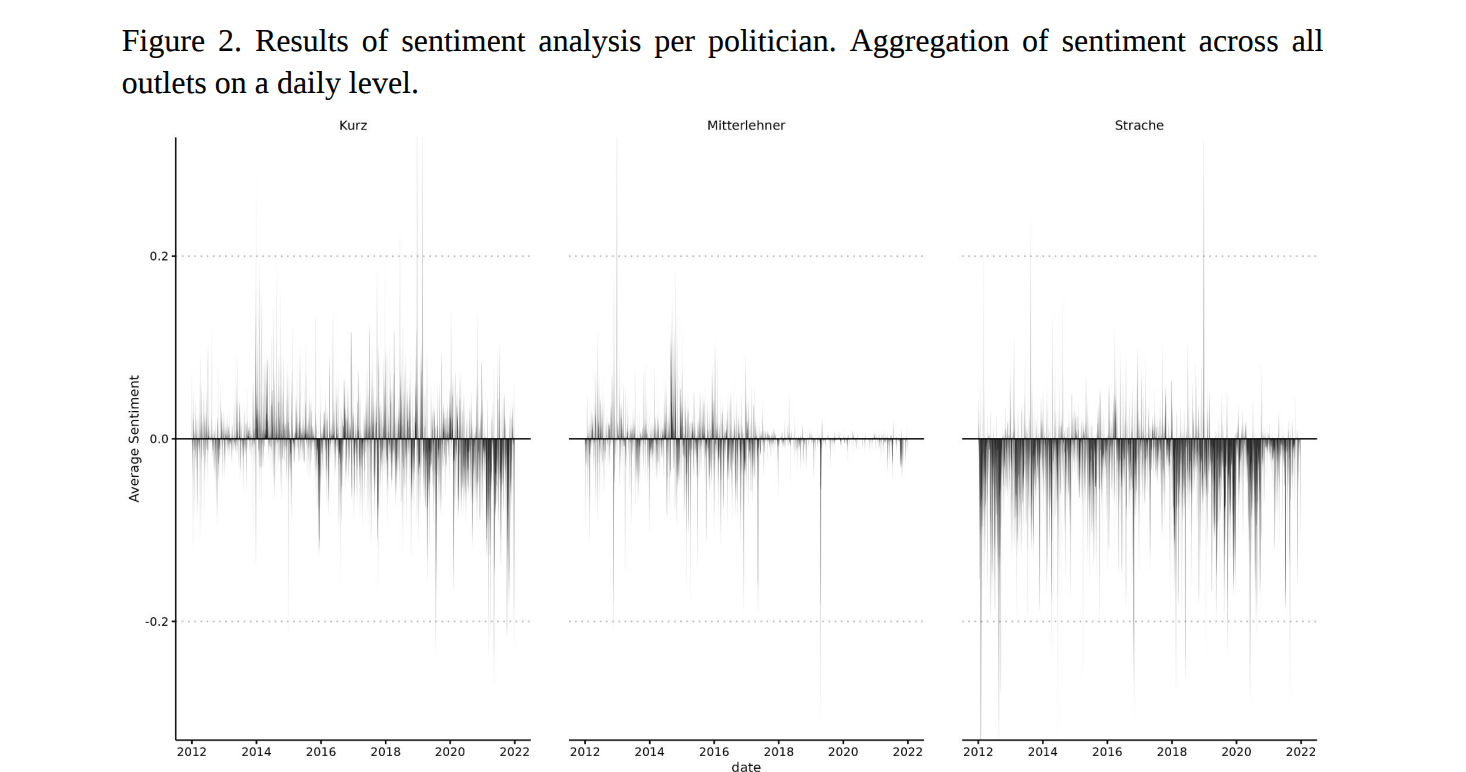

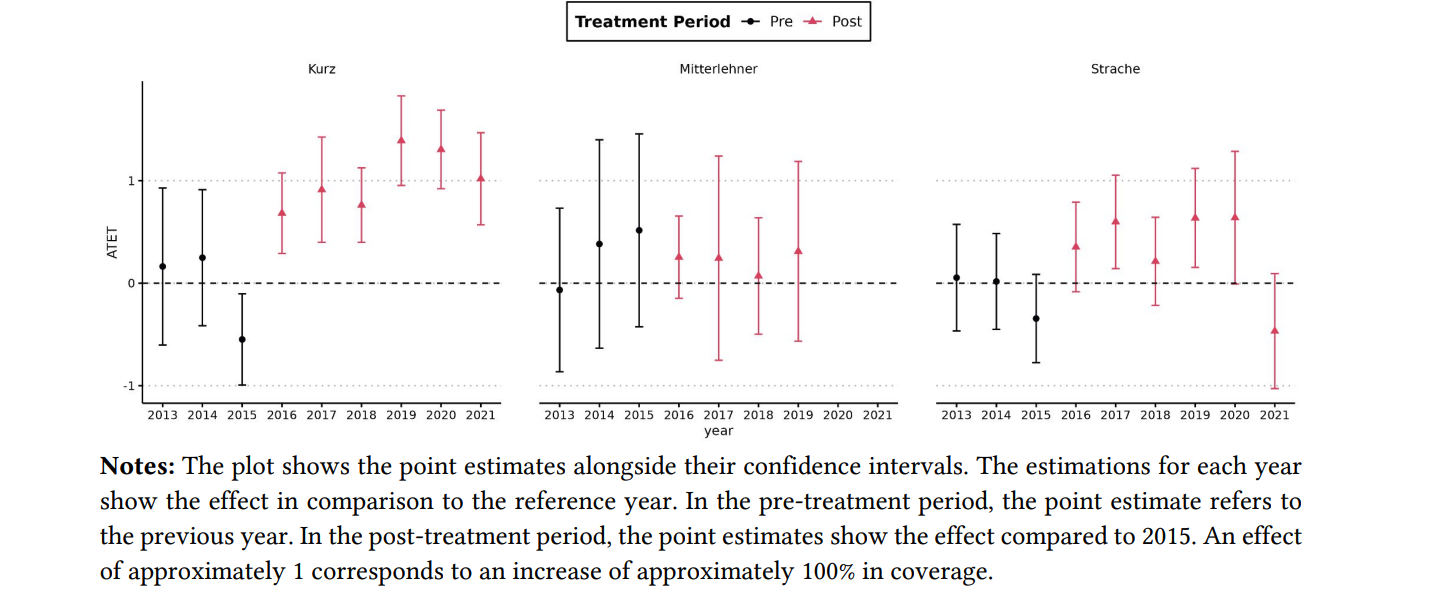

Baluff et al. (2023) investigated a recent case of media capture, a mutually corrupting relationship between political actors and media organizations.

This case involves former Austrian chancellor who allegedly colluded with a tabloid newspaper to receive better news coverage in exchange for increased ad placements by government institutions.

They implemented automated content analysis (using BERT) of political news articles from six prominent Austrian news outlets spanning 2012 to 2021 (n = 188,203) and adopted a difference-in-differences approach to scrutinize political actors’ visibility and favorability in news coverage for patterns indicative of the alleged serious breach of professional political and journalistic norms.

Methods

Used a German-language GottBERT model (Scheible et al., 2020) that they further fine-tuned for the task using publicly available data from the AUTNES Manual Content Analysis of the Media Coverage 2017 and 2019 (Galyga et al., 2022; Litvyak et al., 2022c)

Comparatively difficult task, but were able to reach a satisfactory F1-Score of 0.77 (precision = 0.77, recall = 0.77).

Findings

Our findings indicate a substantial increase in the news coverage of the former Austrian chancellor within the news outlet that is alleged to have received bribes.

In contrast, several other political actors did not experience similar shifts in visibility nor are similar patterns identified in other media outlets.

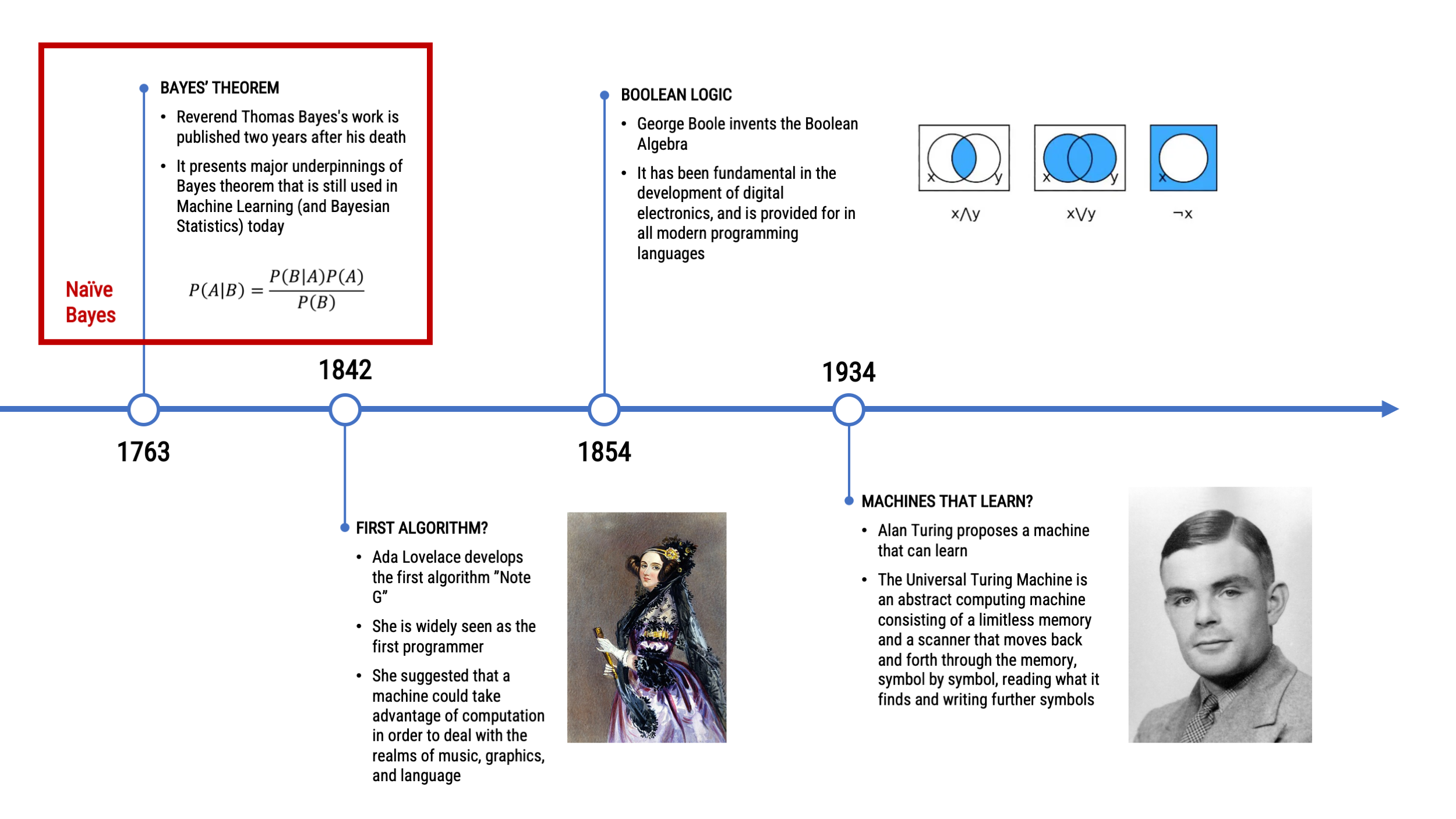

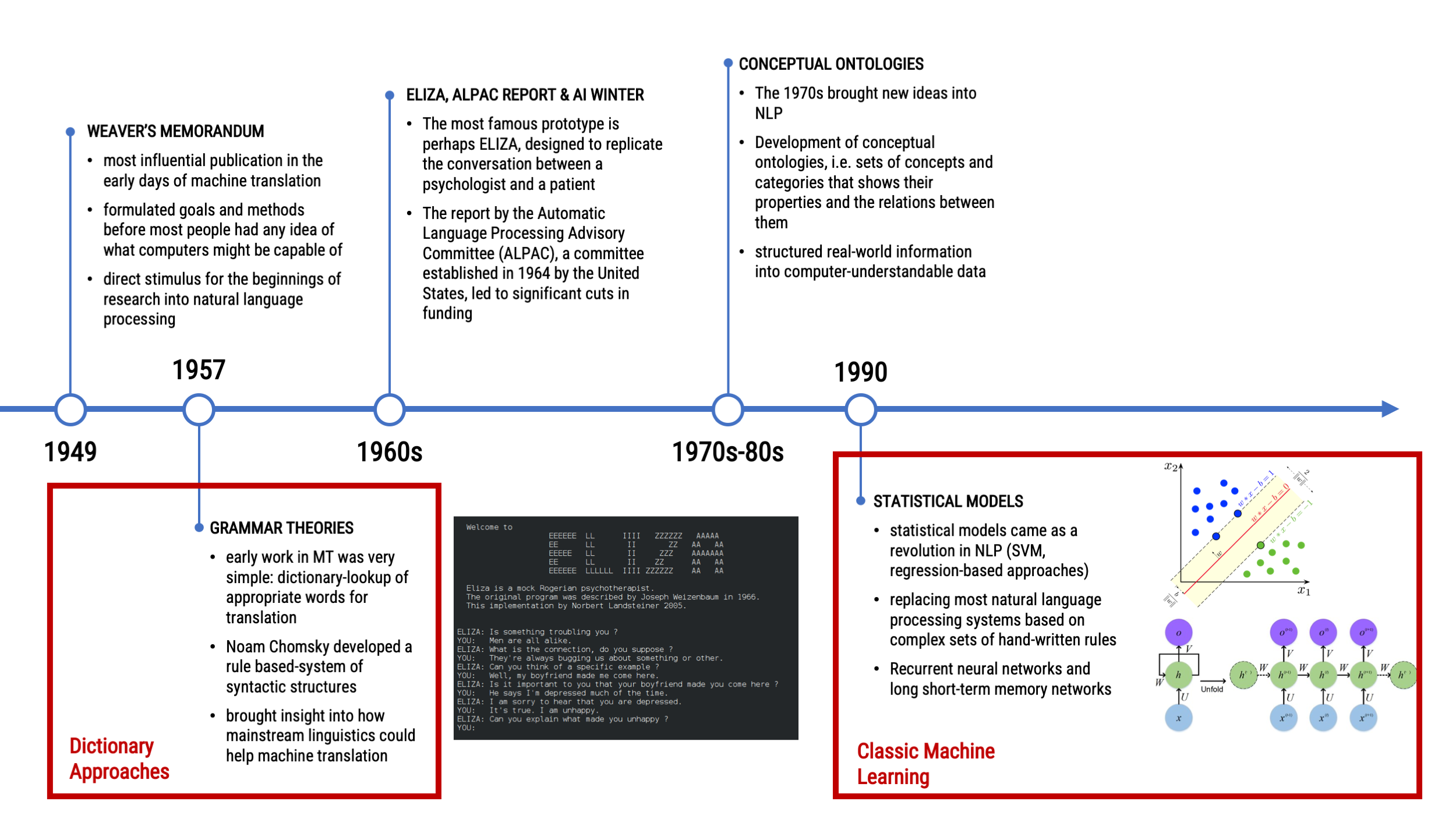

Summary and conclusion

A Look Back at the Chronology of NLP

A Look Back at the Chronology of NLP

A Look Back at the Chronology of NLP

Explosion in model size?

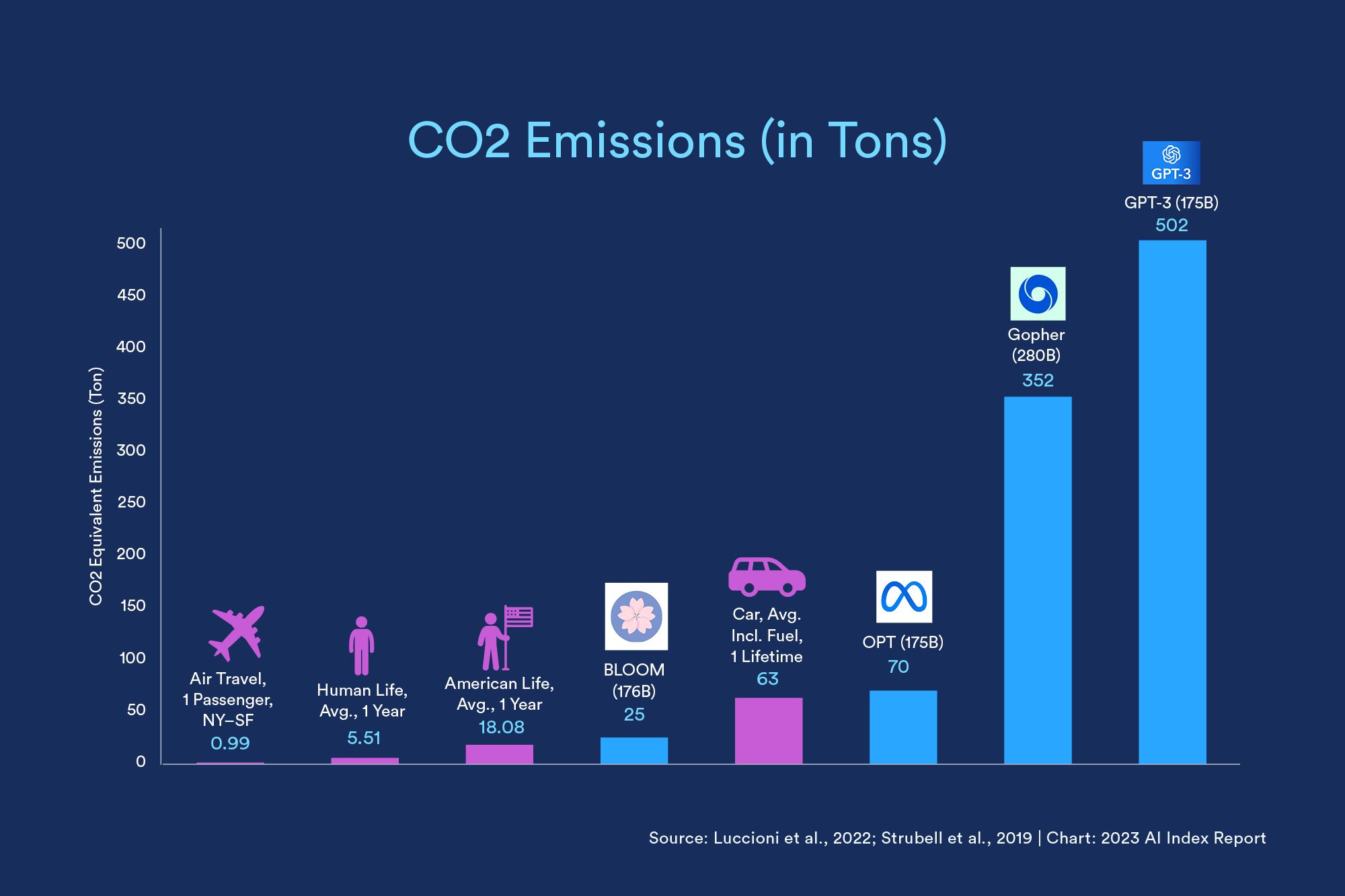

Environmental Impact

Ethical considerations

Training large language models requires significant computational resources, contributing to a substantial carbon footprint. Ethical considerations involve assessing the environmental impact of developing and deploying such models.

LLMs can inherit and perpetuate biases present in their training data.

This can result in the generation of biased or unfair content, reflecting and potentially amplifying societal biases and stereotypes.

Developers and users must be aware of the potential for bias and take steps to mitigate it during model training and deployment.

The fact that some LLMs are developed, trained, and employed behind closed doors causes yet another ethical dilemma in using them!

Conclusion

Advancement in NLP and AI are fast-paced; difficult to keep up

LLMs promise immense potential for communication research

Yet, large language models can contain biases or even hallucinate!

- Validation, validation, validation!

Thank you for your attention!

Required Reading

Kroon, A., Welbers, K., Trilling, D., & van Atteveldt, W. (2023). Advancing Automated Content Analysis for a New Era of Media Effects Research: The Key Role of Transfer Learning. Communication Methods and Measures, 1-21

(available on Canvas)

Reference

Alammar, J. (2018). The illustrated Transformer. Retrieved from: https://jalammar.github.io/illustrated-transformer/

Andrich, A., Bachl, M., & Domahidi, E. (2023). Goodbye, Gender Stereotypes? Trait Attributions to Politicians in 11 Years of News Coverage. Journalism & Mass Communication Quarterly, 100(3), 473-497. https://doi-org.vu-nl.idm.oclc.org/10.1177/10776990221142248

Balluff, P., Eberl, J., Oberhänsli, S. J., Bernhard, J., Boomgaarden, H. G., Fahr, A., & Huber, M. (2023, September 15). The Austrian Political Advertisement Scandal: Searching for Patterns of “Journalism for Sale”. https://doi.org/10.31235/osf.io/m5qx4

Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

Kroon, A., Welbers, K., Trilling, D., & van Atteveldt, W. (2023). Advancing Automated Content Analysis for a New Era of Media Effects Research: The Key Role of Transfer Learning. Communication Methods and Measures, 1-21

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., … & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

Example Exam Question (Multiple Choice)

How are word embeddings learned?

A. By assigning random numerical values to each word

B. By analyzing the pronunciation of words

C. By scanning the context of each word in a large corpus of documents

D. By counting the frequency of words in a given text

Example Exam Question (Multiple Choice)

How are word embeddings learned?

A. By assigning random numerical values to each word

B. By analyzing the pronunciation of words

C. By scanning the context of each word in a large corpus of documents

D. By counting the frequency of words in a given text

Example Exam Question (Open Format)

What does zero-shot learning refer to in the context of large language models?

In the context of large language models, zero-shot learning refers to the ability of a model to perform a task or make predictions on a set of classes or concepts that it has never seen or been explicitly trained on. Essentially, the model can generalize its knowledge to new, unseen tasks without specific examples or training data for those tasks.

In traditional machine learning, models are typically trained on a specific set of classes, and their performance is evaluated on the same set of classes during testing. Zero-shot learning extends this capability by allowing the model to handle tasks or categories that were not part of its training set.

In the case of large language models like GPT-3, which is trained on a diverse range of internet text, zero-shot learning means the model can understand and generate relevant responses for queries or prompts related to concepts it hasn’t been explicitly trained on. This is achieved through the model’s ability to capture and generalize information from the vast and varied data it has been exposed to during training.

Computational Analysis of Digital Communication

Andrich et al. (2023) examine stereotypical traits in portrayals of 1,095 U.S. politicians.

Andrich et al. (2023) examine stereotypical traits in portrayals of 1,095 U.S. politicians.