# Get data

science_data <- read_csv("data/science.csv") |> filter(label != "biology" & label != "finance" & label != "mathematics")

# Create test and train data sets

split <- initial_split(science_data, prop = .50)

# Feature engineering

rec <- recipe(sentiment ~ lemmata, data = science_data) |>

step_tokenize(lemmata) |>

step_tf(all_predictors()) |>

step_normalize(all_predictors())

# Setup algorithm/model

mlp_spec <- mlp(epochs = 600, hidden_units = c(6),

penalty = 0.01, learn_rate = 0.2) |>

set_engine("brulee") |>

set_mode("classification")

# Create workflow

mlp_workflow <- workflow() |>

add_recipe(rec) |>

add_model(mlp_spec)

# Fit model

m_mlp <- fit(mlp_workflow, data = training(split))Transformers and Large Language Models

Week 4: Bert, Llama, GPT, and Co

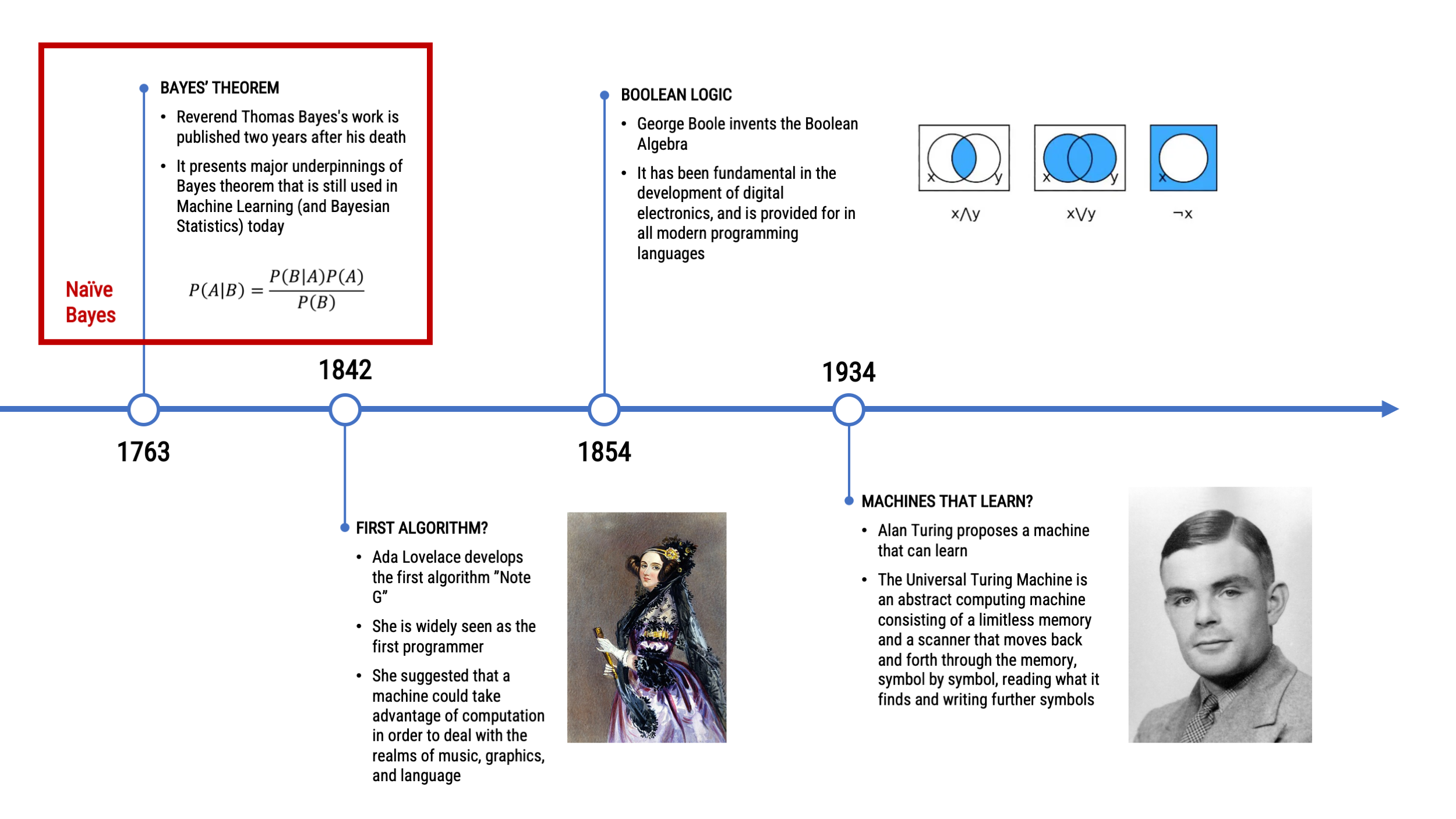

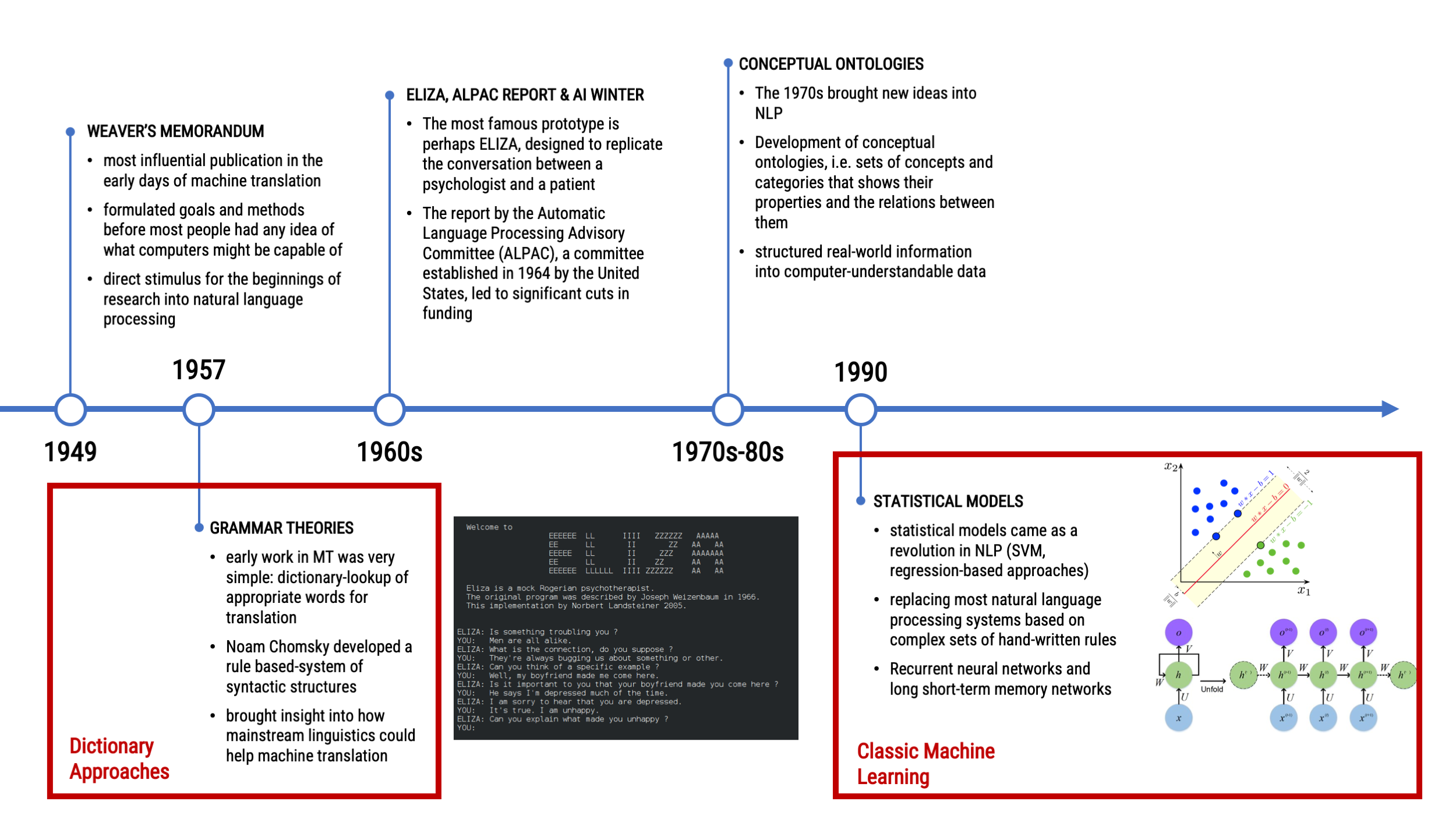

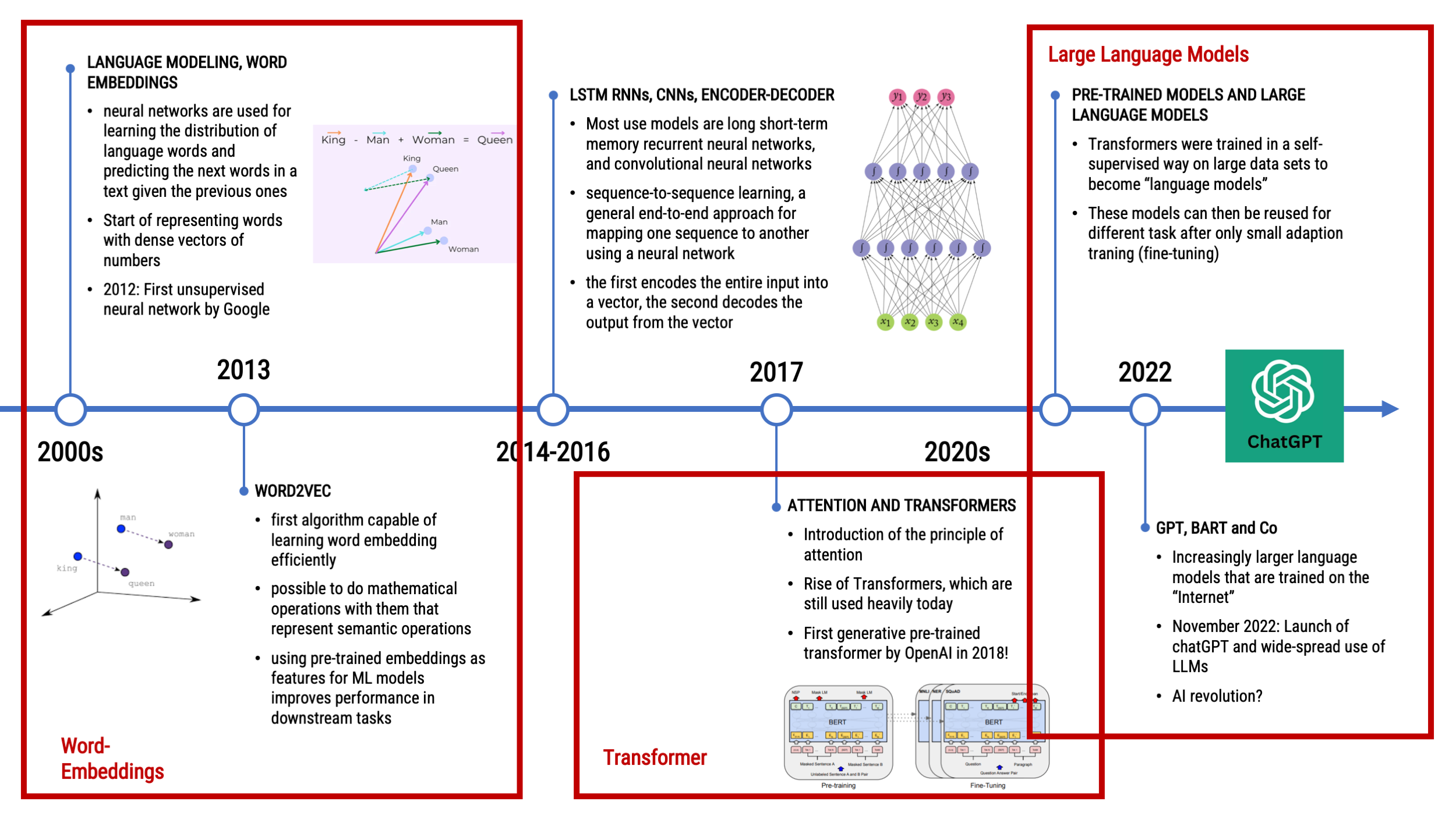

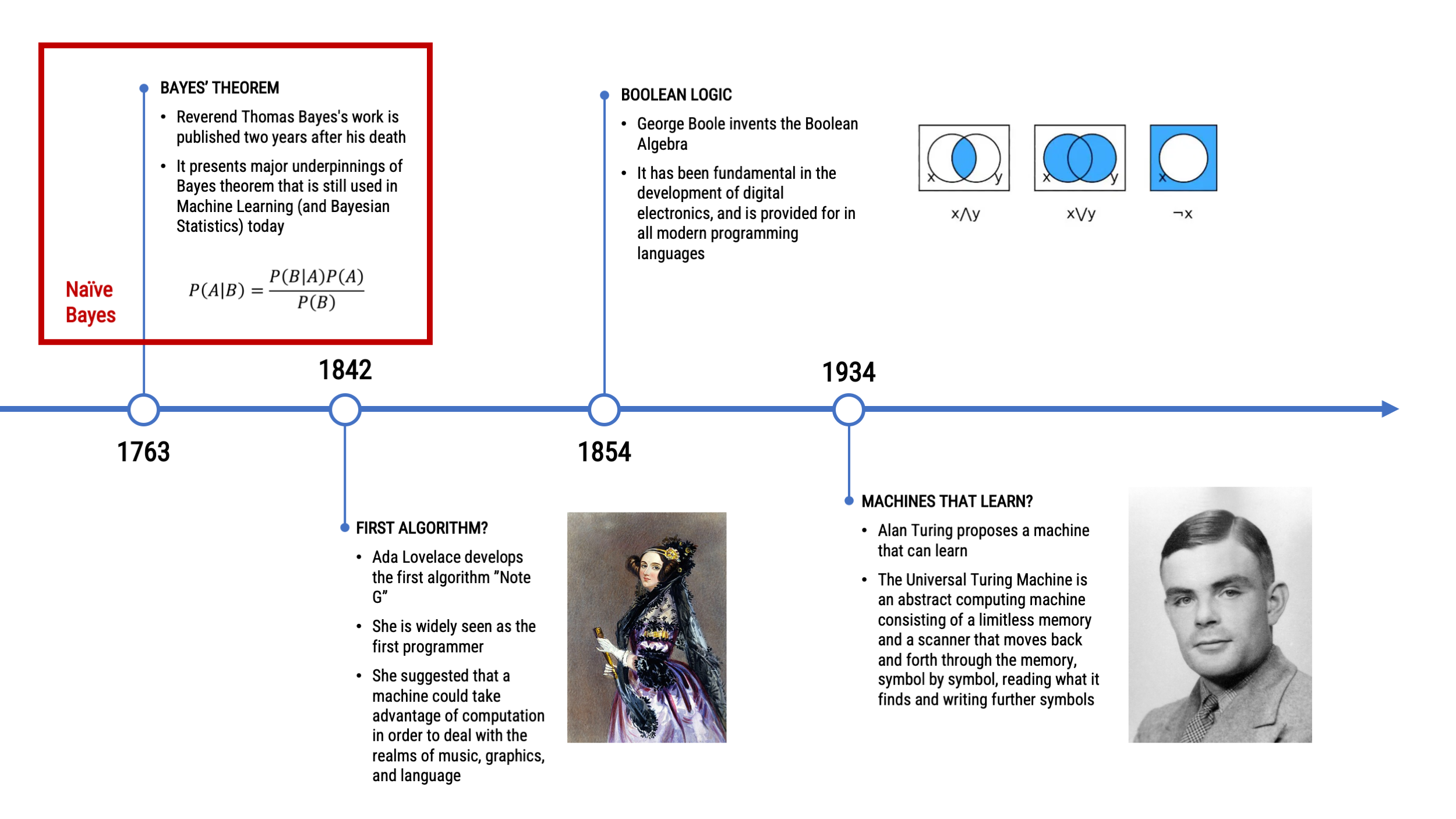

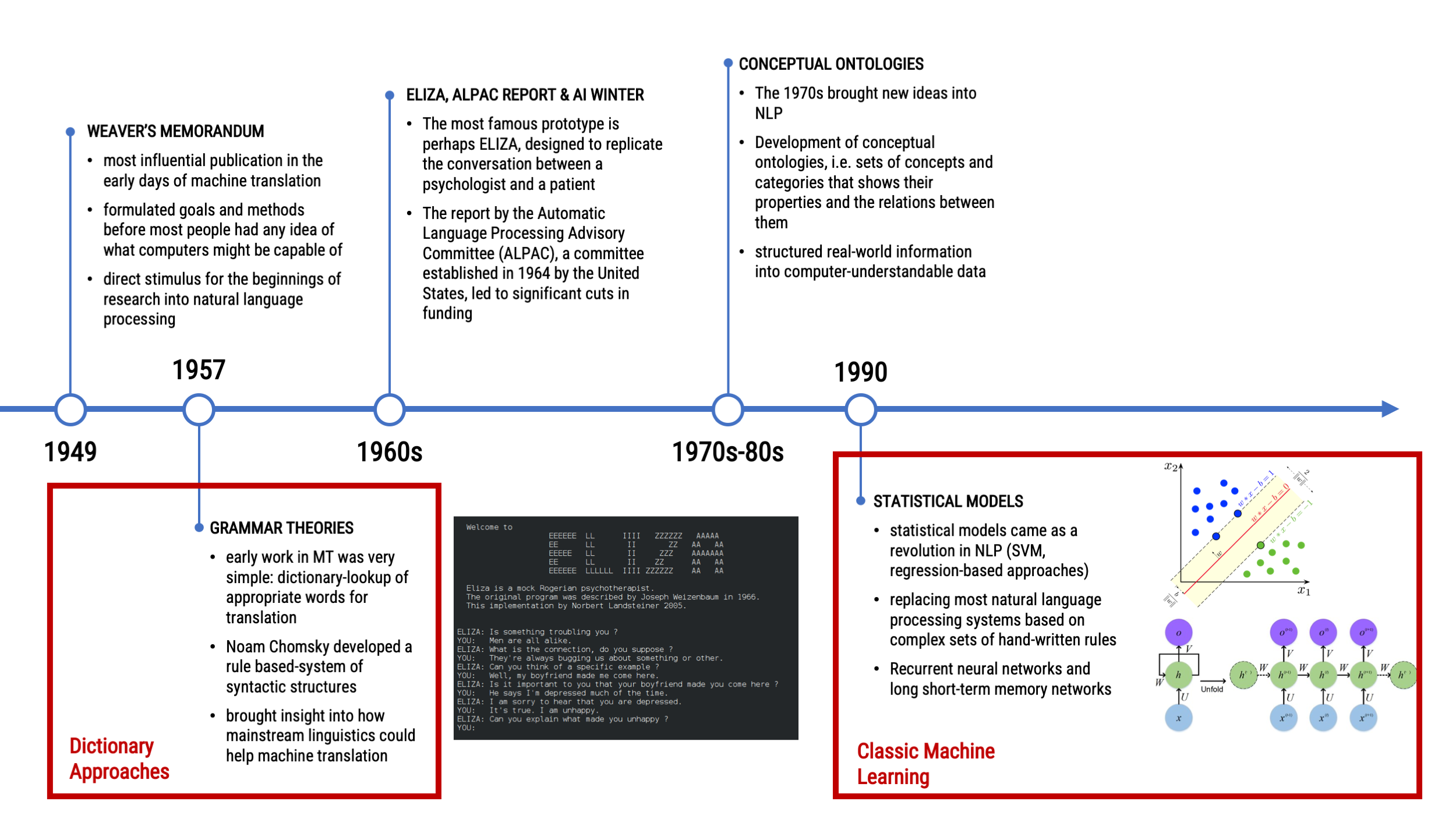

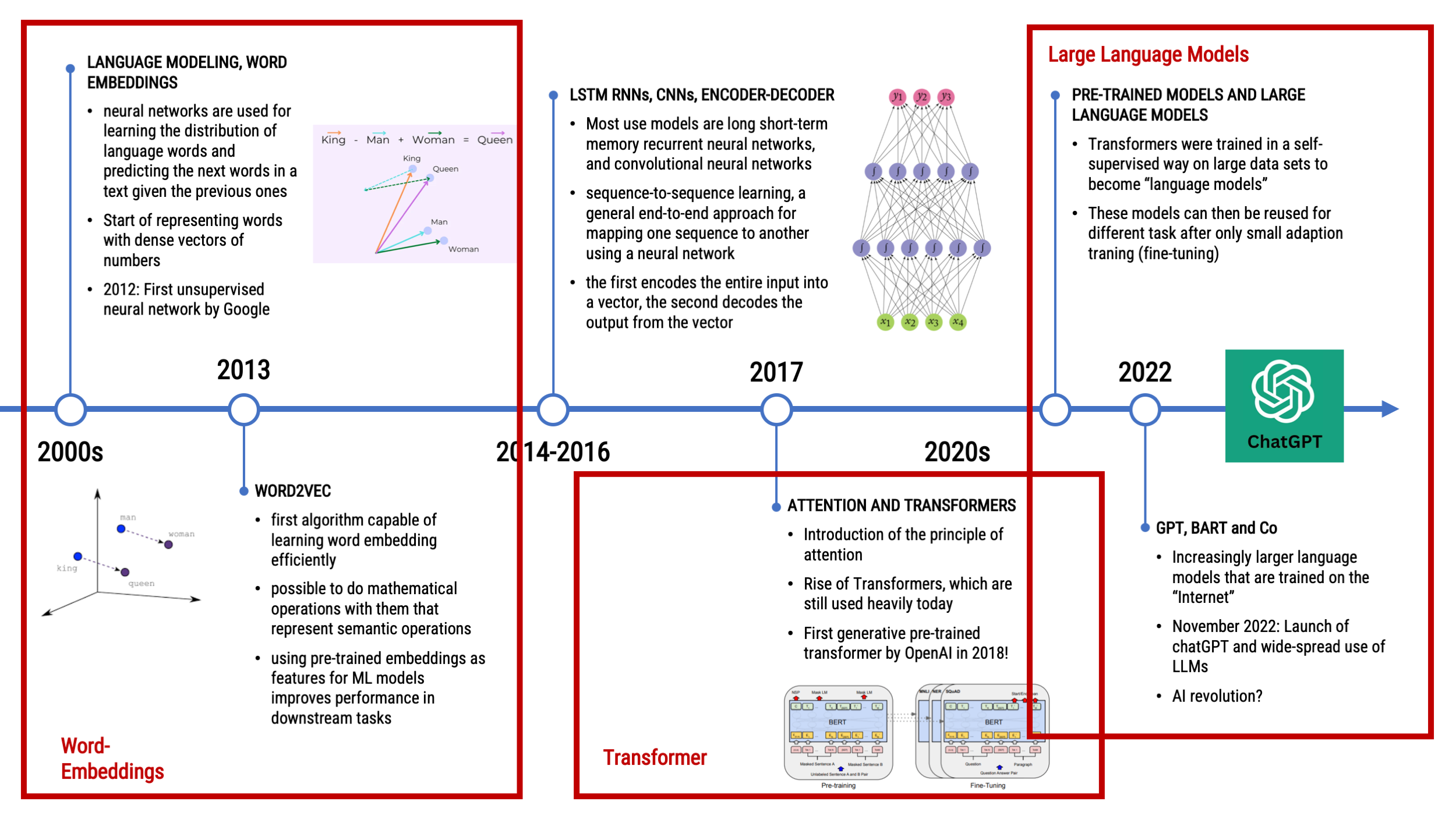

A Look Back at the Chronology of NLP

A Look Back at the Chronology of NLP

What we focus on today…

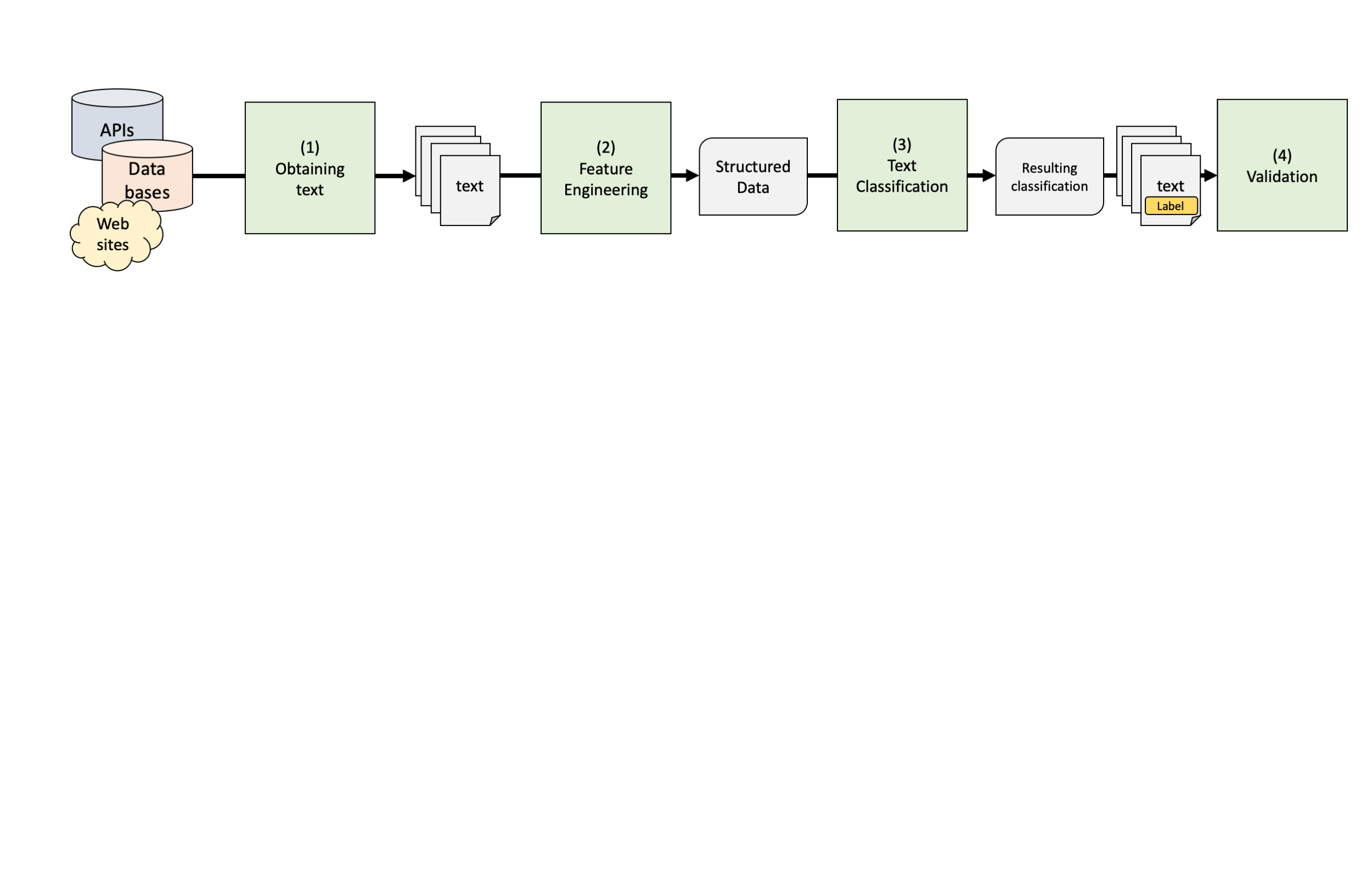

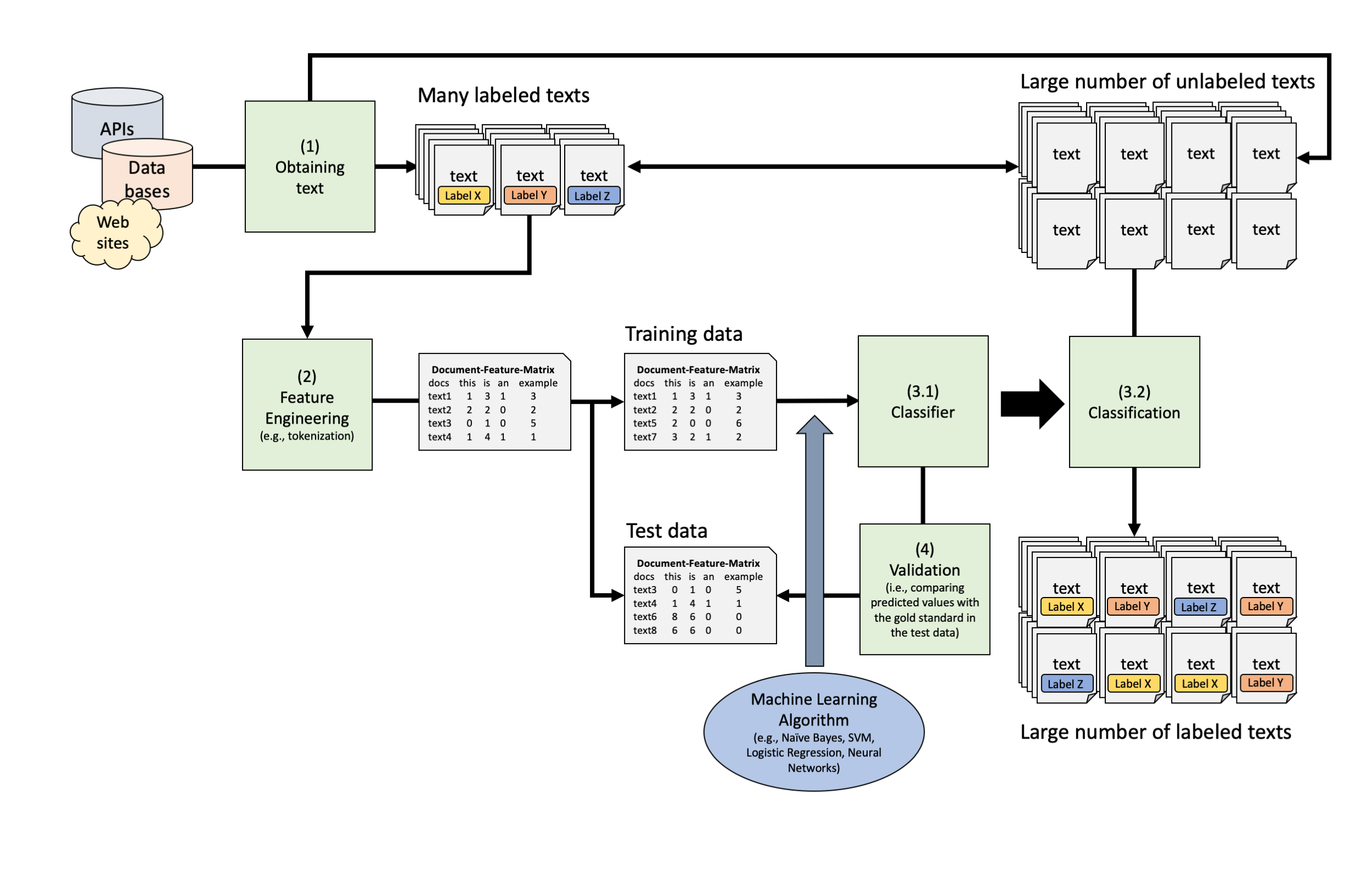

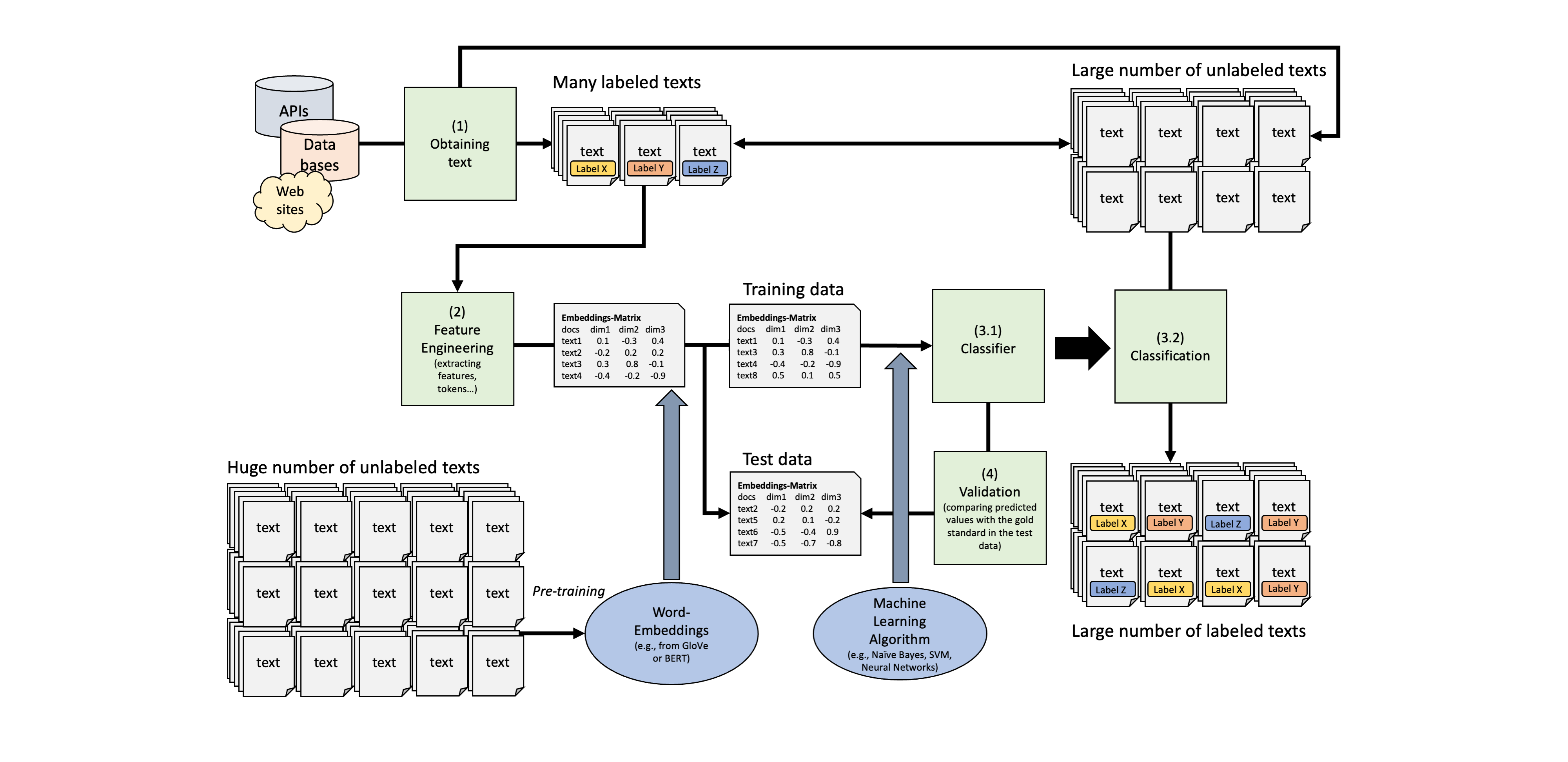

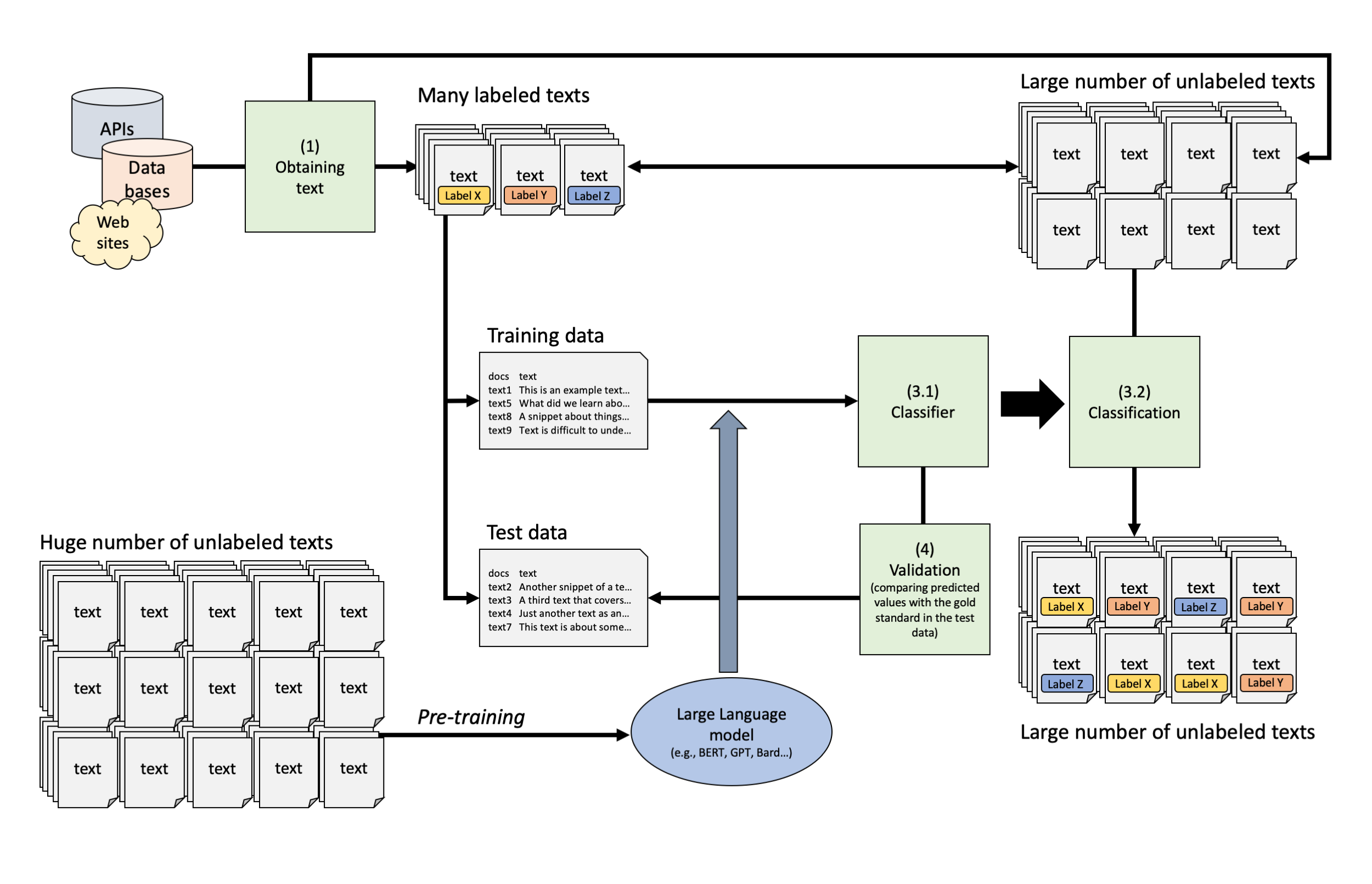

The classic machine learning approach

A lot of different steps…

Training takes a lot of data and time… and performance was still somewhat

What if things were easier?

Wouldn’t it be great if we would not have to wrangle with the data, not engage in any text preprocessing, and simply let the “computer” figure this out?

In fact, aren’t we living in times were we can simply ask the computer, similar as Theodore in the movie “Her”?

Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Classify this scientific abstract: Rotation Invariance Neural Network Rotation invariance and translation invariance have great values in image

recognition tasks. In this paper, we bring a new architecture in convolutional

neural network (CNN) named cyclic convolutional layer to achieve rotation

invariance in 2-D symbol recognition. We can also get the position and

orientation of the 2-D symbol by the network to achieve detection purpose for

multiple non-overlap target. Last but not least, this architecture can achieve

one-shot learning in some cases using those invariance.

Pick one of the following fields.

Provide only the name of the field:

Physics

Computer Science

Statistics

--------------------------------------------------------------

assistant: Computer Science

--------------------------------------------------------------Content of this Lecture

Machine Learning vs. Deep Learning

1.1. Reminder: Text Classification Pipeline

1.2. How did the Field move on?

The Rise of Transformers and Transfer Learning

3.1. Overview

3.2. Transfer Learning

3.3. Architecture of the Transformer Model

3.4. The Transformer Text Classification Pipeline Using BERT

Large Language Models: BERT, Llama, GPT and Co

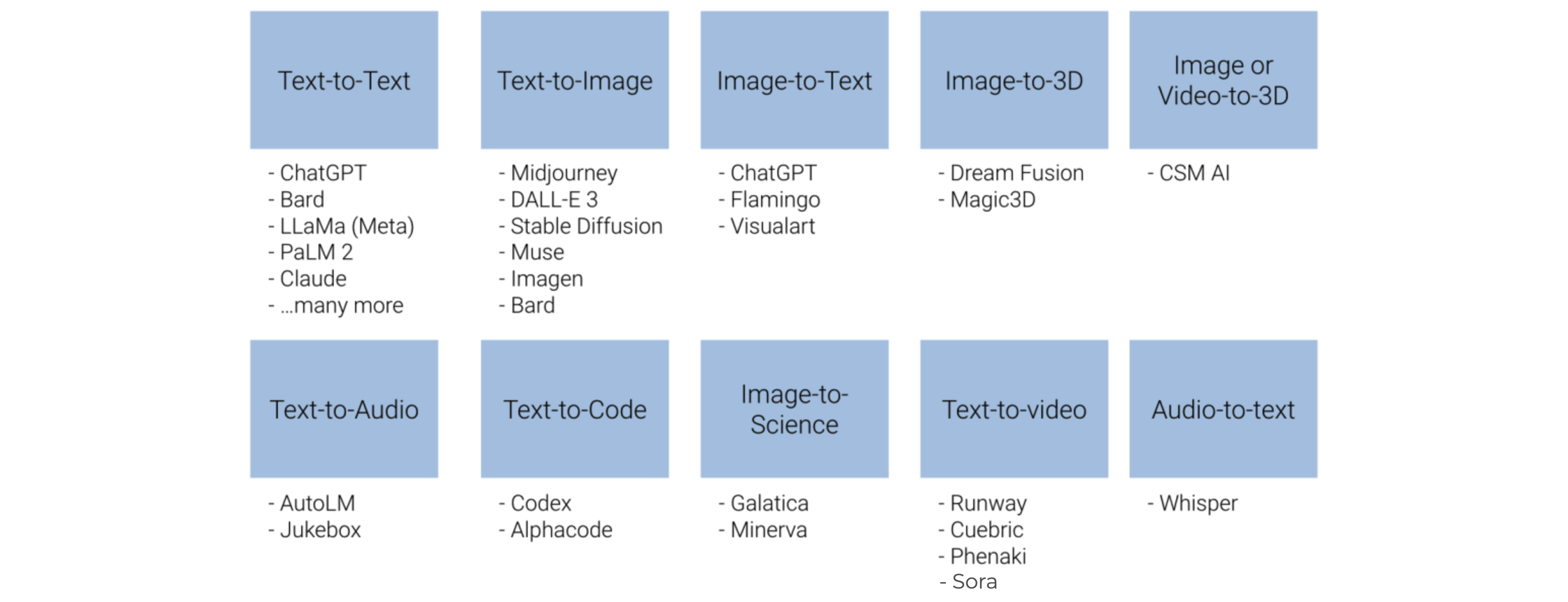

3.1. What are Large Language Models and Generative AI?

3.2. General Idea: Next-Token-Prediction

3.3. A Peek into the Architecture of GPT

Using LLMs for Text Classification

4.1. Zero-Shot, One-Short, and Few-Shot Classification

4.2. Text Classification Using Llama and GPT

4.3. Validation, validation, validation!

4.4. Examples in the Literature

Summary and conclusion

5.1. State-of-the-Art in Classification

5.2. Ethical considerations

5.3. Conclusion

Machine Learning vs. Deep Learning

Our Text Classification Pipeline

Classic Machine Learning (1990-2013)

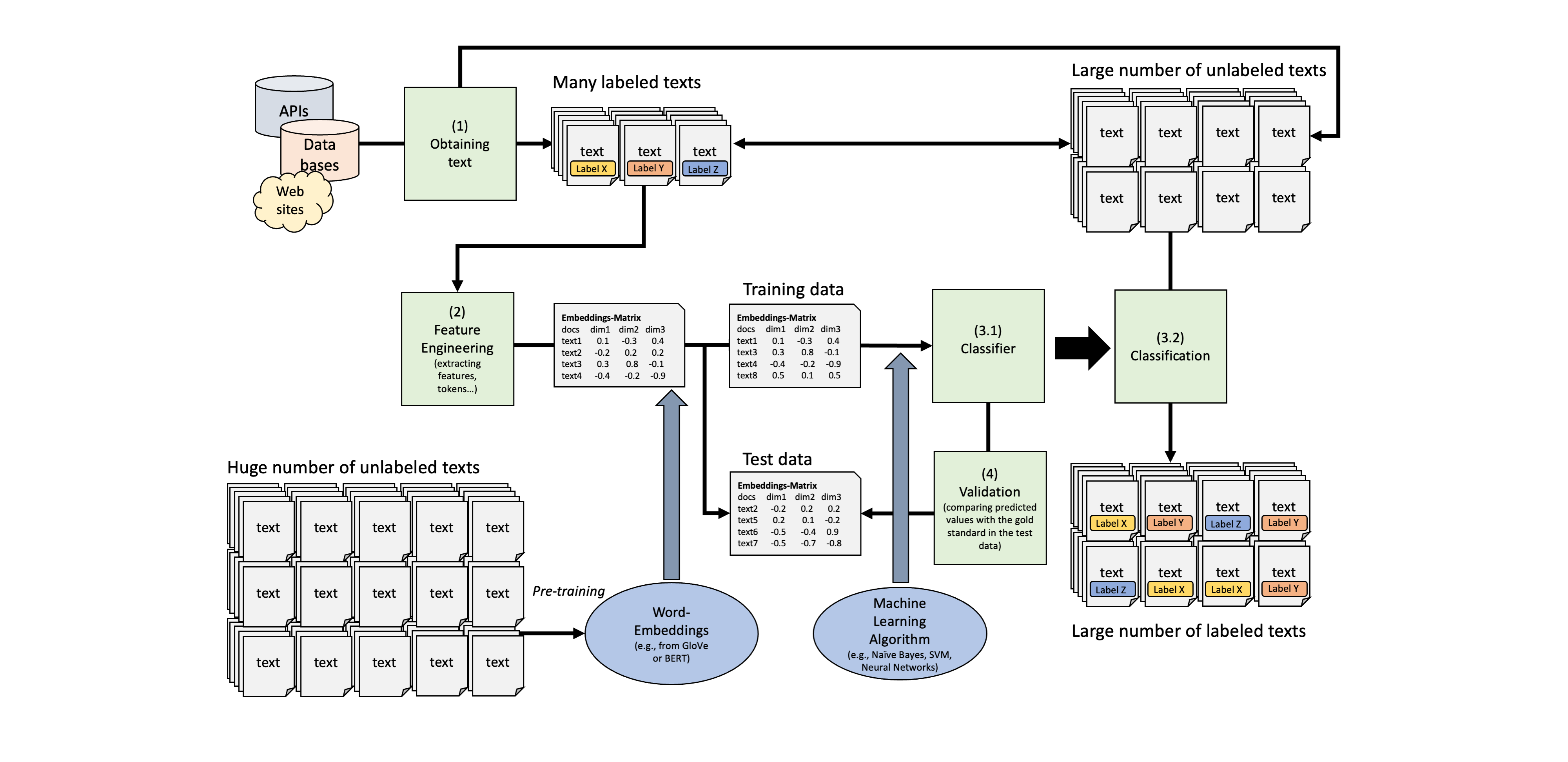

Word-Embeddings (2013-2020)

But Massive advancements in recent years

Massive advancement in how text can be represented at numbers

- From simple word counts to word embeddings

- From Static to contextual word embeddings

- Increasingly better embedding of meaning

Pretraining and transfer learning

- Word embeddings can be trained on large scale corpus

- Pretrained word embeddings can fine-tuned (less training data) and then used for downstream tasks

Transformers and Generative AI

- Larger and larger “language models”

- New mechanisms for better embedding (e.g., Attention)

- Conversational frameworks and Generative AI

The Rise of Transformers and Transfer Learning

Origin of the Transformer

Until 2017, the state-of-the-art for natural language processing was using a deep neural network (e.g., recurrent neural networks, long short-term memory and gated recurrent neural networks)

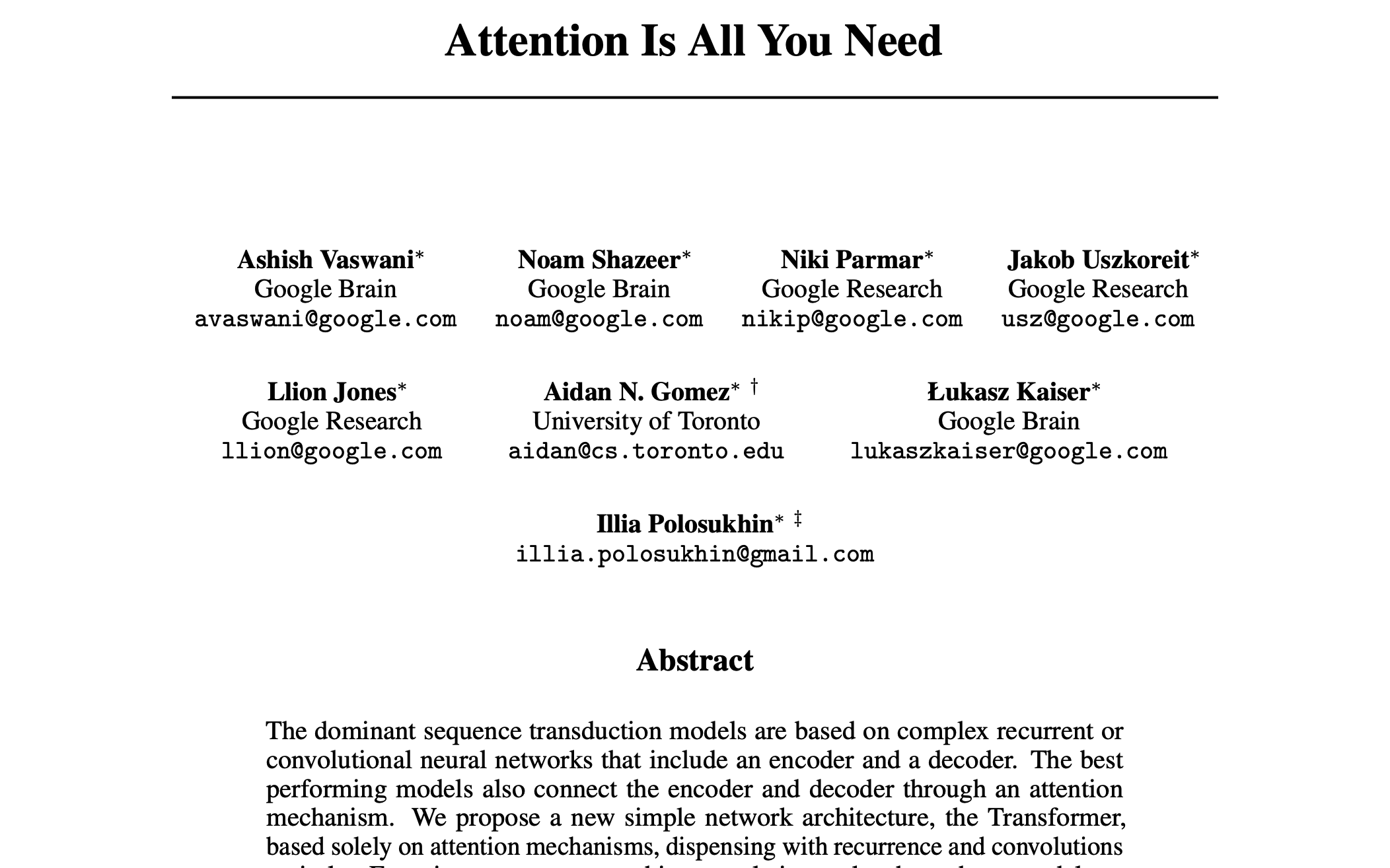

In a preprint called “Attention is all you need”, published in 2017 and cited more than 95,000 times, the team of Google Brain introduced the so-called Transformer

It represents a neural network-type architecture that learns context and thus meaning by tracking relationships in sequential data like the words in this sentence

What was so special about transformers?

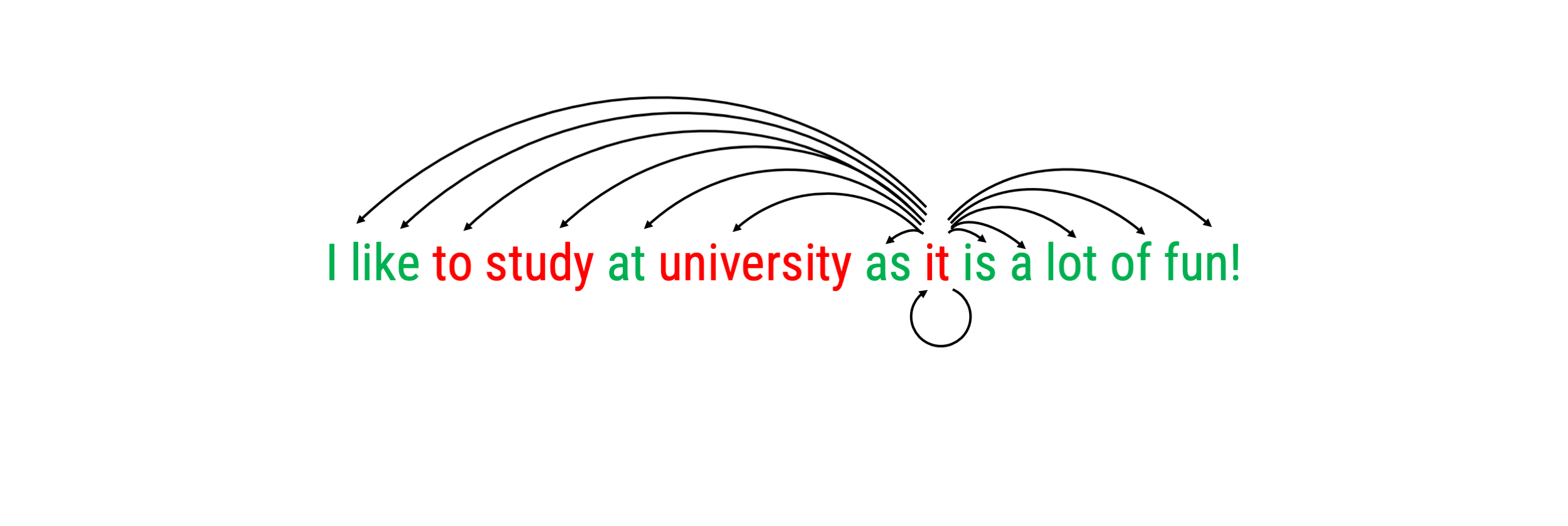

Transformer models apply an evolving set of mathematical techniques, called attention or self-attention, to detect subtle ways even distant data elements in a series influence and depend on each other.

The proposed network structure had notable characteristics:

- No need for recurrent or convolutional network structures

- Based solely on attention mechanism (stacked on top of one another)

- Required less training time (can be parallelized)

It thereby outperformed prior state-of-the-art models in a variety of tasks

The transformer architecture is the back-bone of all current large language models and so far drives the entire “AI revolution”

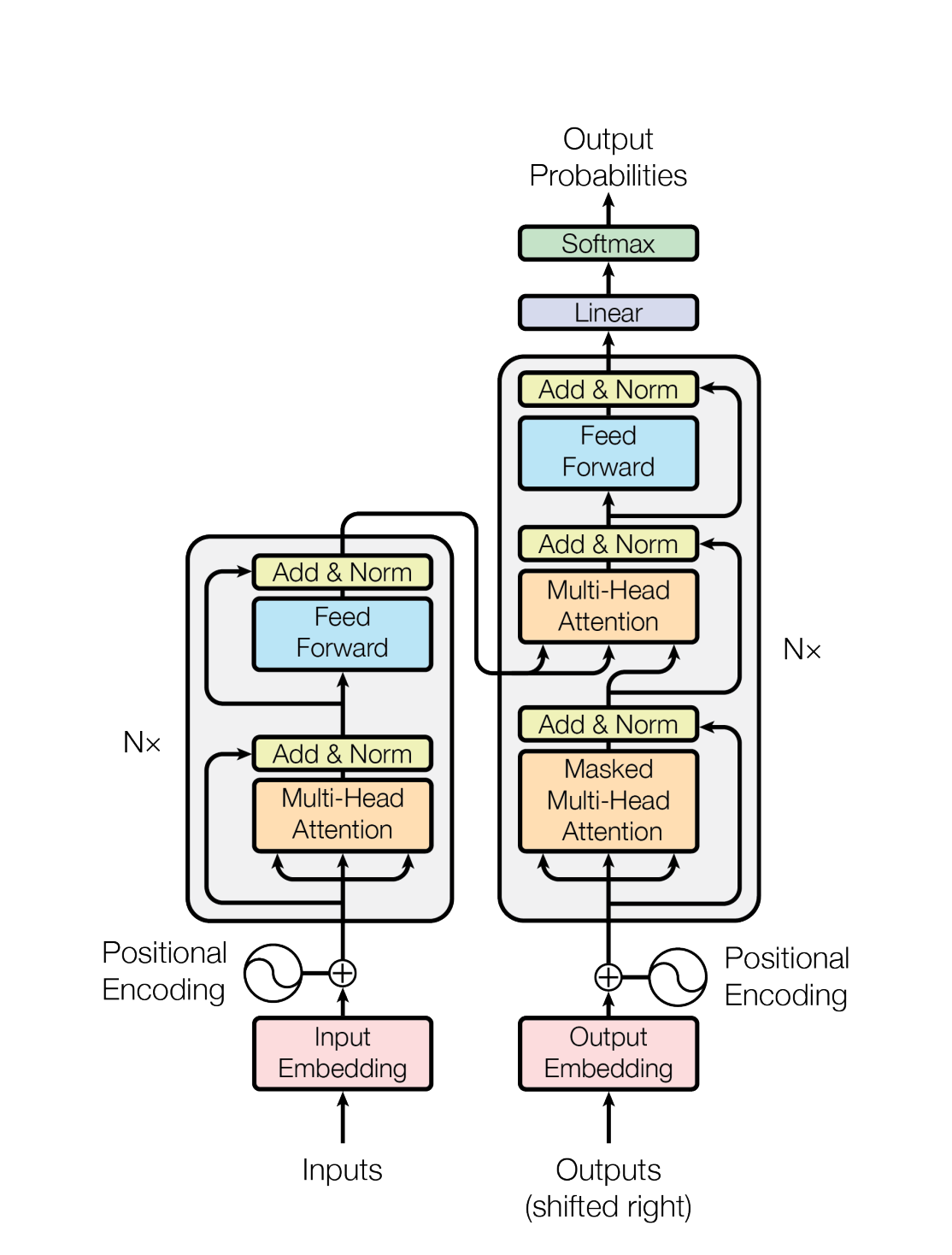

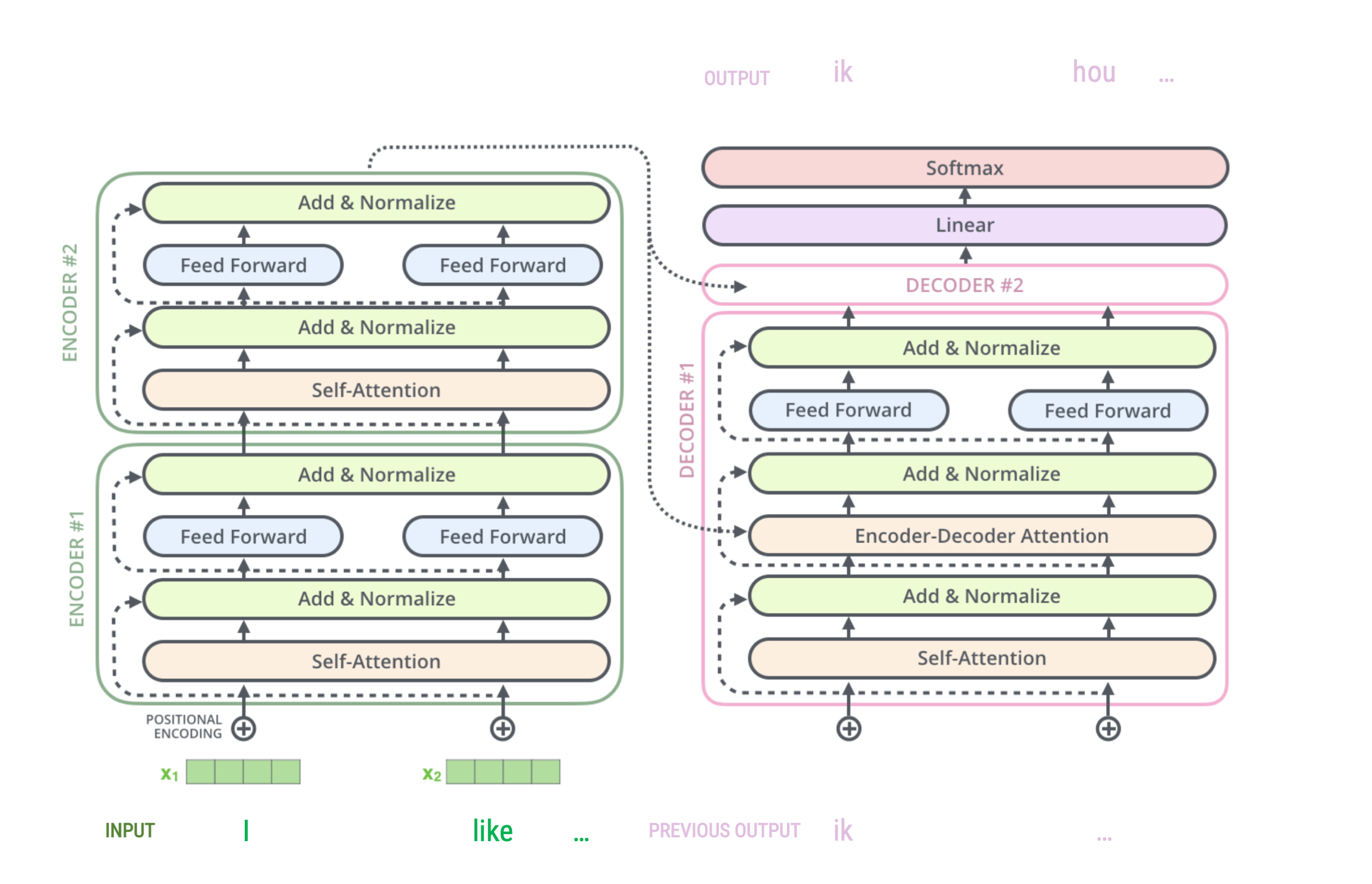

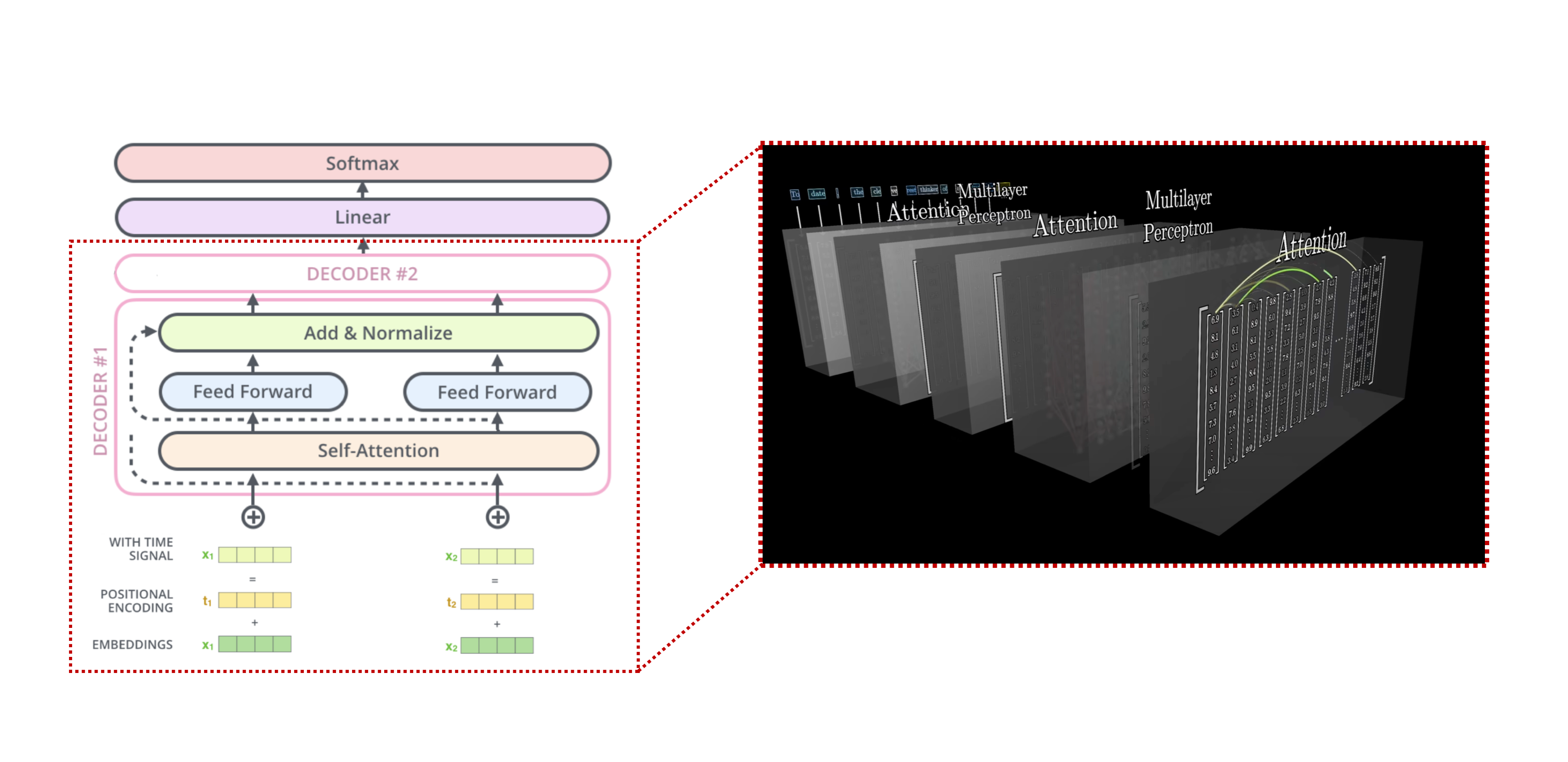

Overview of the architecture

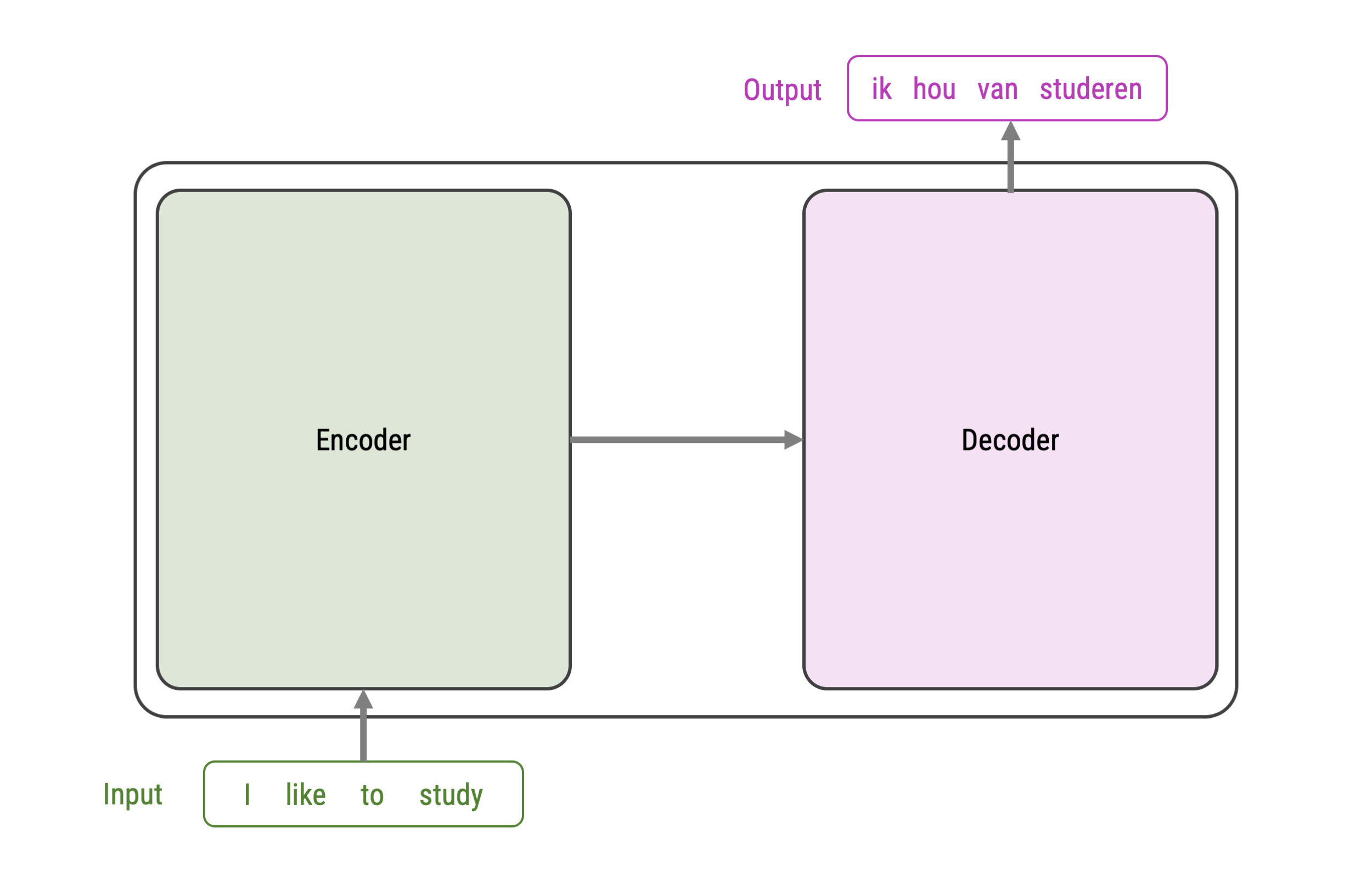

The figure on the right represent an abstract overview of a transformer’s architecture

It can be used for next-token-predictions

- classic example is translation: e.g., english-to-dutch

- but also: question-to-answer, text-to-summary, sentence-to-next-word…

- an thus also for text classification: text-to-label

Although models can differ, they generally include:

- An encoder-decoder framework

- Word embeddings + positional embedding

- Attention and self-attention modules

Vaswani et al. 2017

Basic Encoder-Decoder Framework (for Translation)

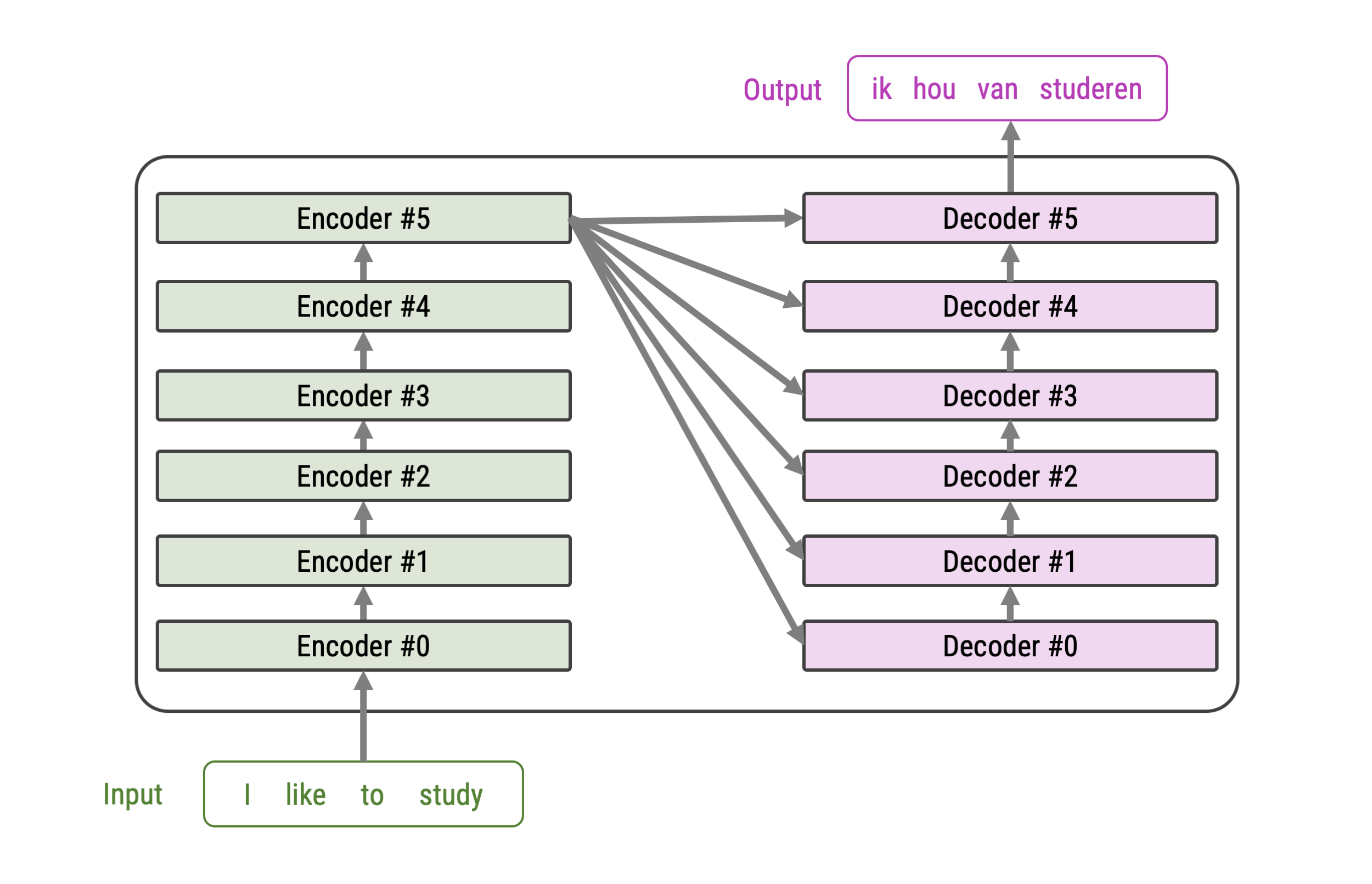

Stacked Encoders and Decoders

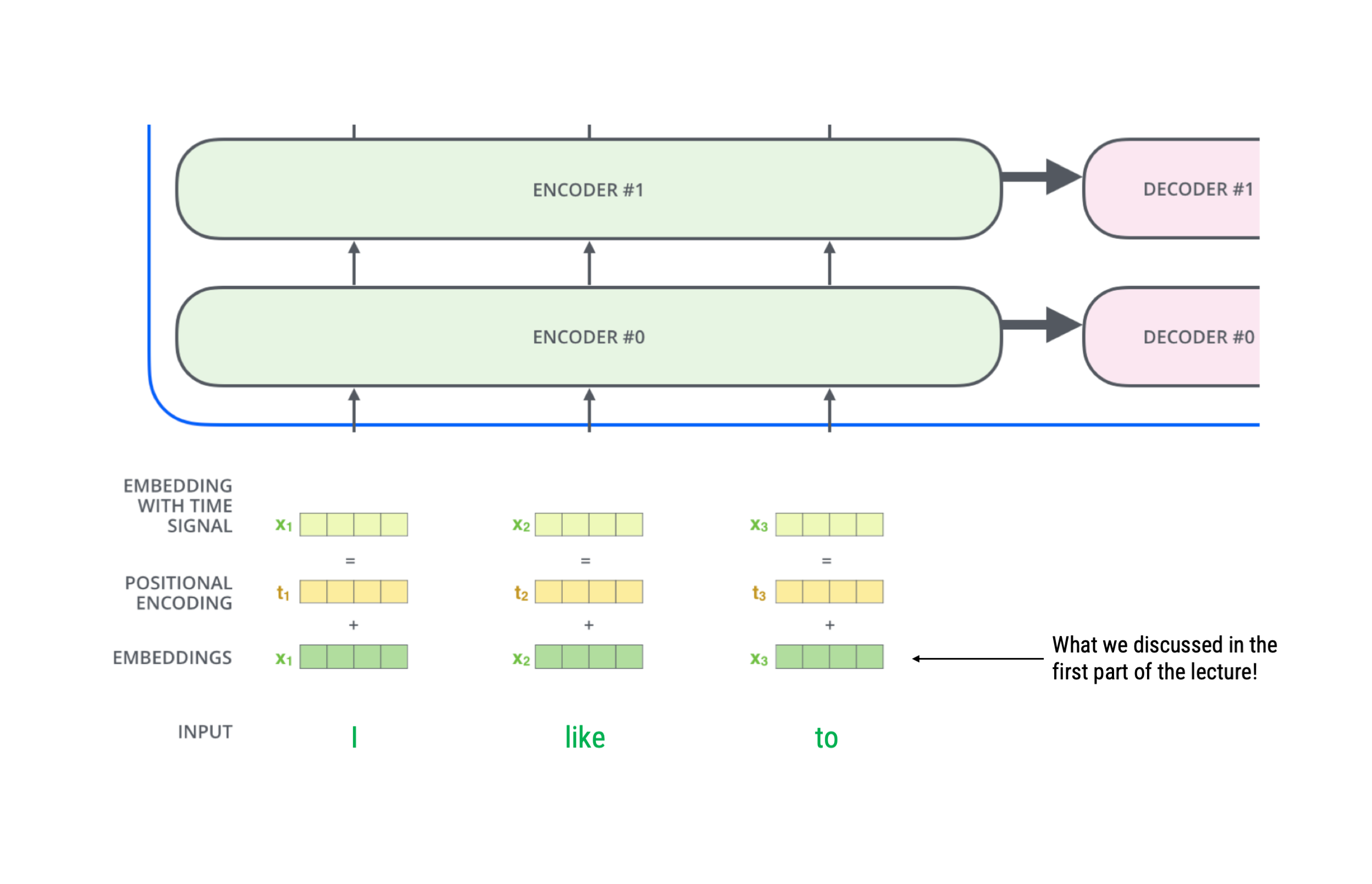

More elaborate encoding of words

Source: Alammar, 2018

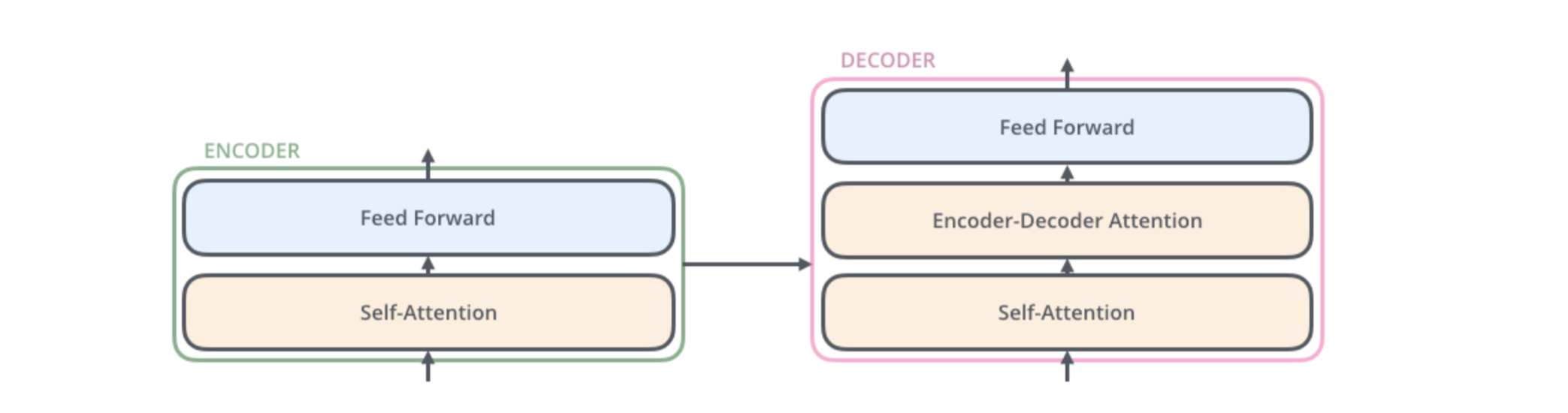

Inside of an encoder and a decoder

The word, position, and time signal embeddings are passed to the first encoder

Here, they flow through a self-attention layer, which further refines the encoding by “looking at other words” as it encodes a specific word

The outputs of the self-attention layer are fed to a feed-forward neural network.

The decoder likewise has both layers as well, but also an extra attention layer that helps to focus on different parts of the input (e.g., the encoders outputs)

Source: Alammar, 2018

Putting it all together

Source: Alammar, 2018

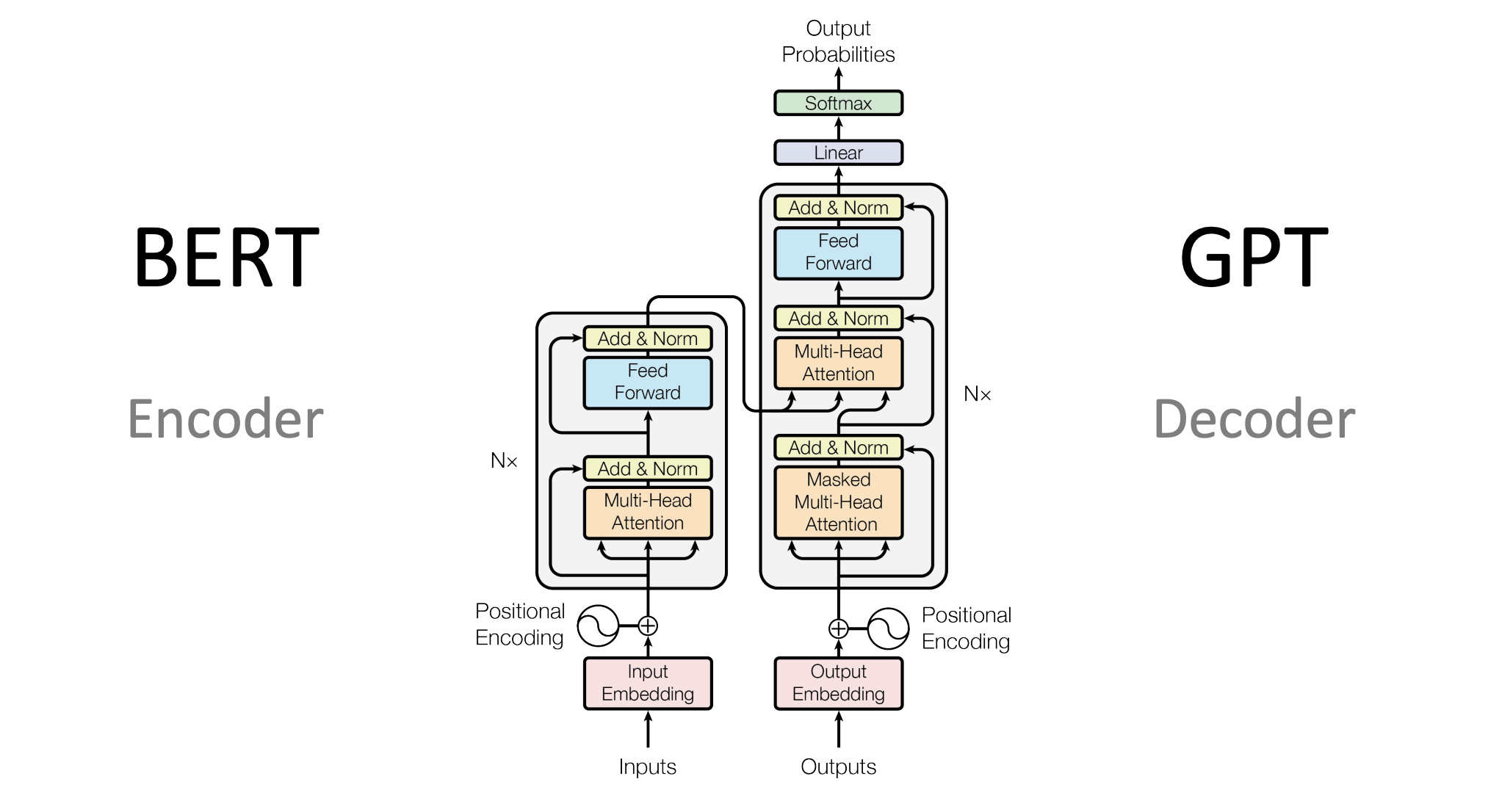

Different Types of Models for different tasks

BERT vs. GPT (or Llama)

Encoder-Decoder Transformers:

- BART (Lewis et al., 2019): tanslation, but also text generation ,…

Encoder-Only Transformer

- BERT (Devlin et al., 2019): Embedding-only, then down-stream tasks…

Decoder-Only Transformer:

- GPT-series (OpenAI): Text generation, …

- Llama (Meta): Text generation…

Text Classification with Transformers, Encoder-Only

Text Classification with Transformers, Decoder-Only

Pre-training, Fine-tuning, and Transfer Learning

Generally, transformer models are pre-trained using specific natural language processing tasks

- Mask Language Modelling (LM): simply mask some percentage of the input tokens at random, and then predict those masked tokens

- Next sentence prediction (NSP): predict sentence B from sentence A to model relationships between sentences

The general idea was to use a pre-trained model and then “fine-tune” it on the specific tasks it is supposed to perform (e.g., annotating text with topics or sentiment)

Although the transformer’s architecture has made training more efficient (due to the ability to parallelize), it nonetheless requires significant computing power to fine-tune a model

As pre-training often involves tasks that are different than what we want the model to do, this is often denoted as “transfer learning”, thus a type of learning that transfers to other task as well

As transformer-based models become larger and larger, the need for fine-tuning decreases as they already do well on down-stream tasks (e.g., using only prompt-engineering)

Very Large Language Models: Llama and GPT

Source: Christian Behler on Medium

What are large language models

A large language model (LLM) is a type of language model notable for its ability to achieve general-purpose language understanding and generation

LLMs acquire these abilities by using massive amounts of data to learn billions of parameters during training and consuming large computational resources during their training and operation

LLMs are still just a type of artificial neural networks (mainly transformers!) and are pretrained using self-supervised learning and semi-supervised learning (e.g., Mask Language Modelling)

As so-called autoregressive language models, they take an input text and repeatedly predicting the next token or word

Current models

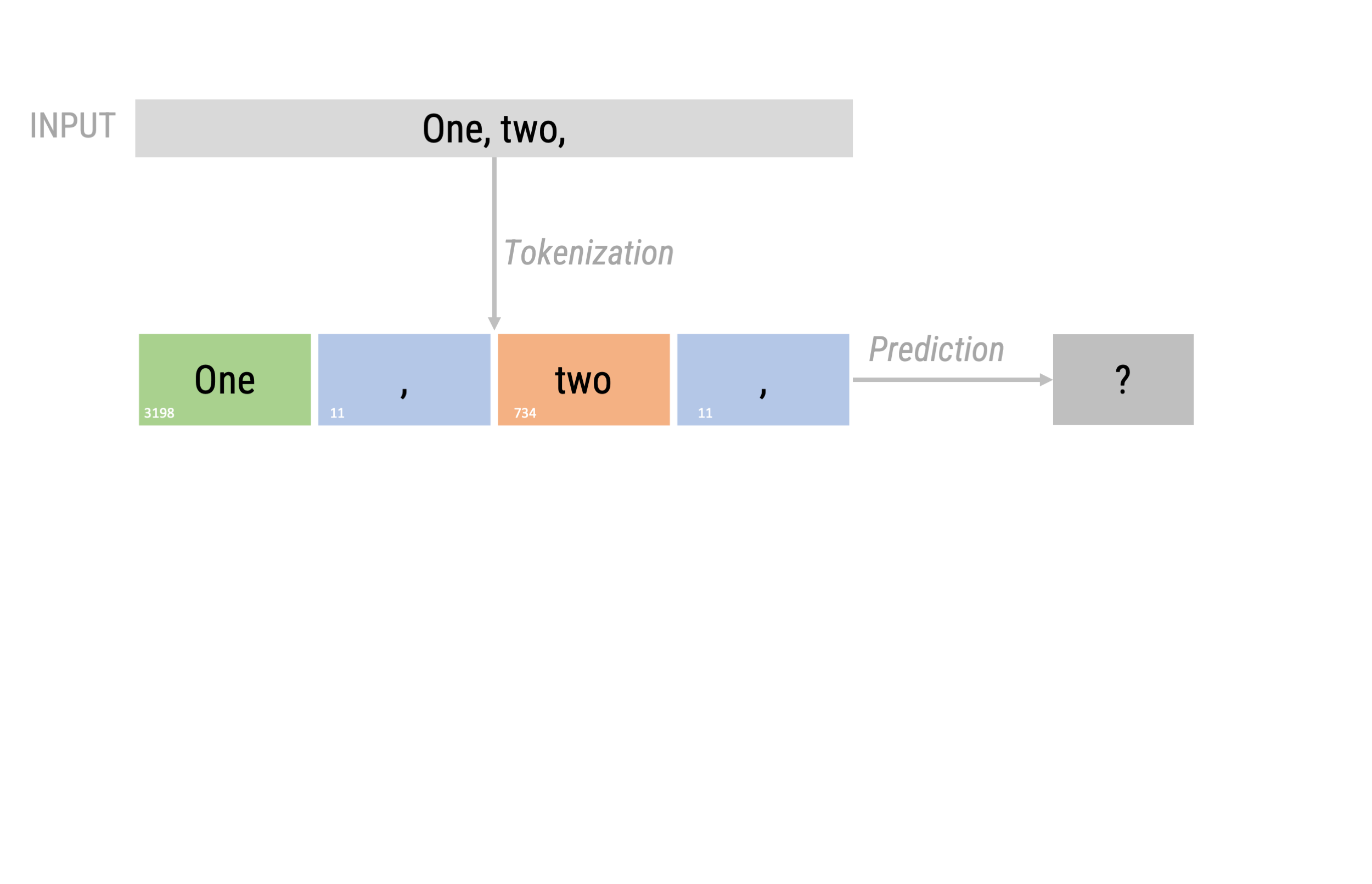

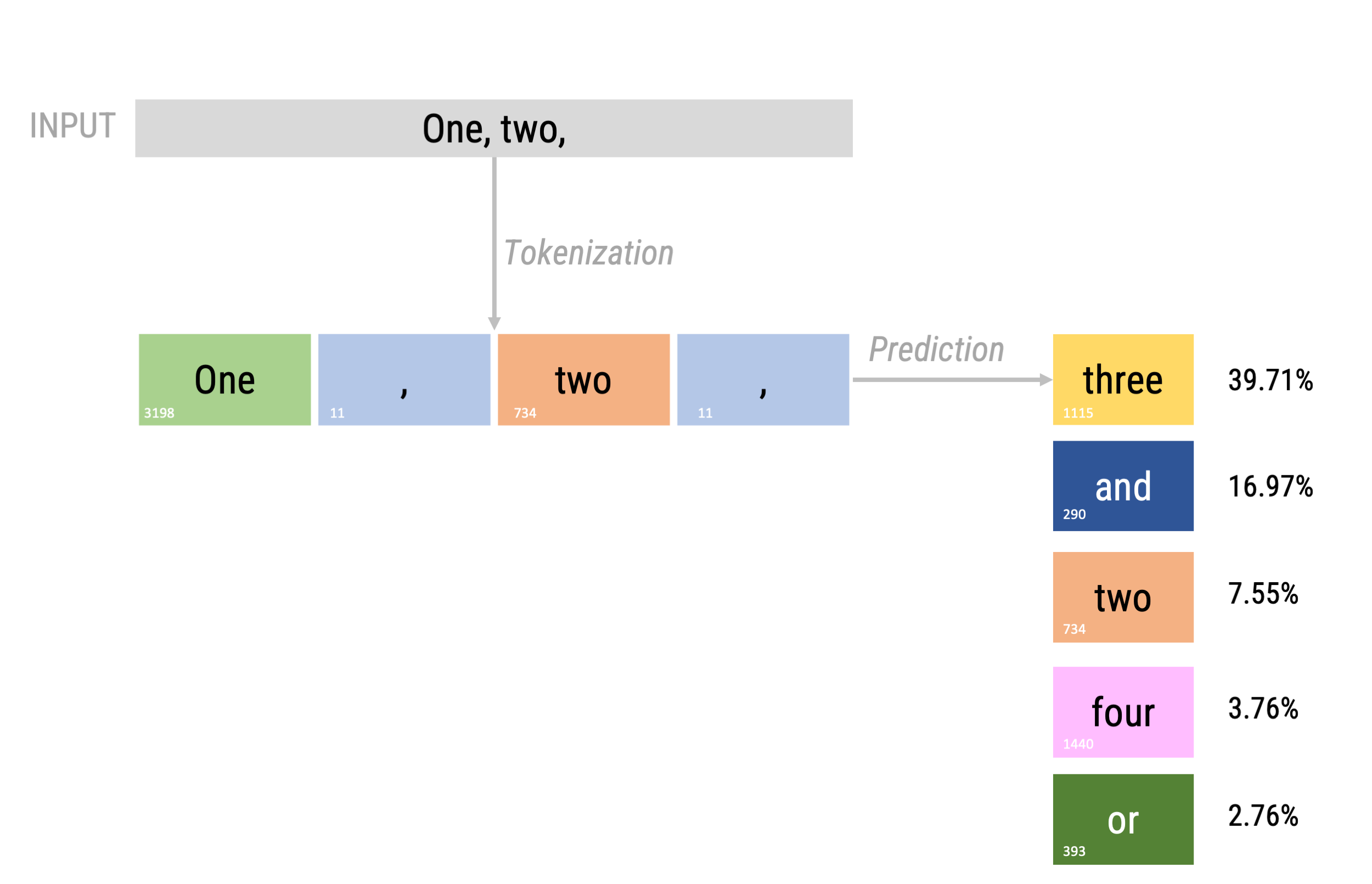

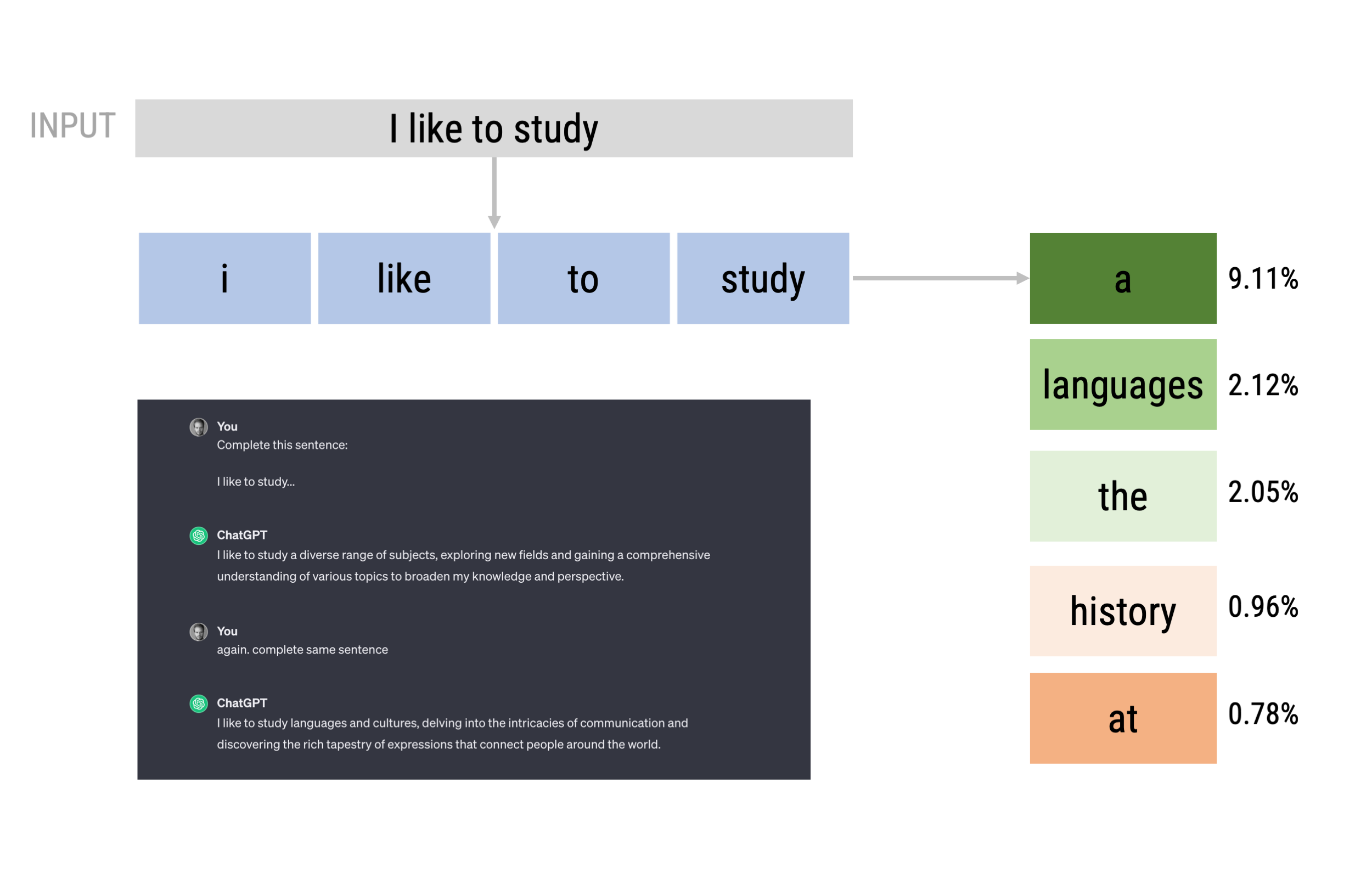

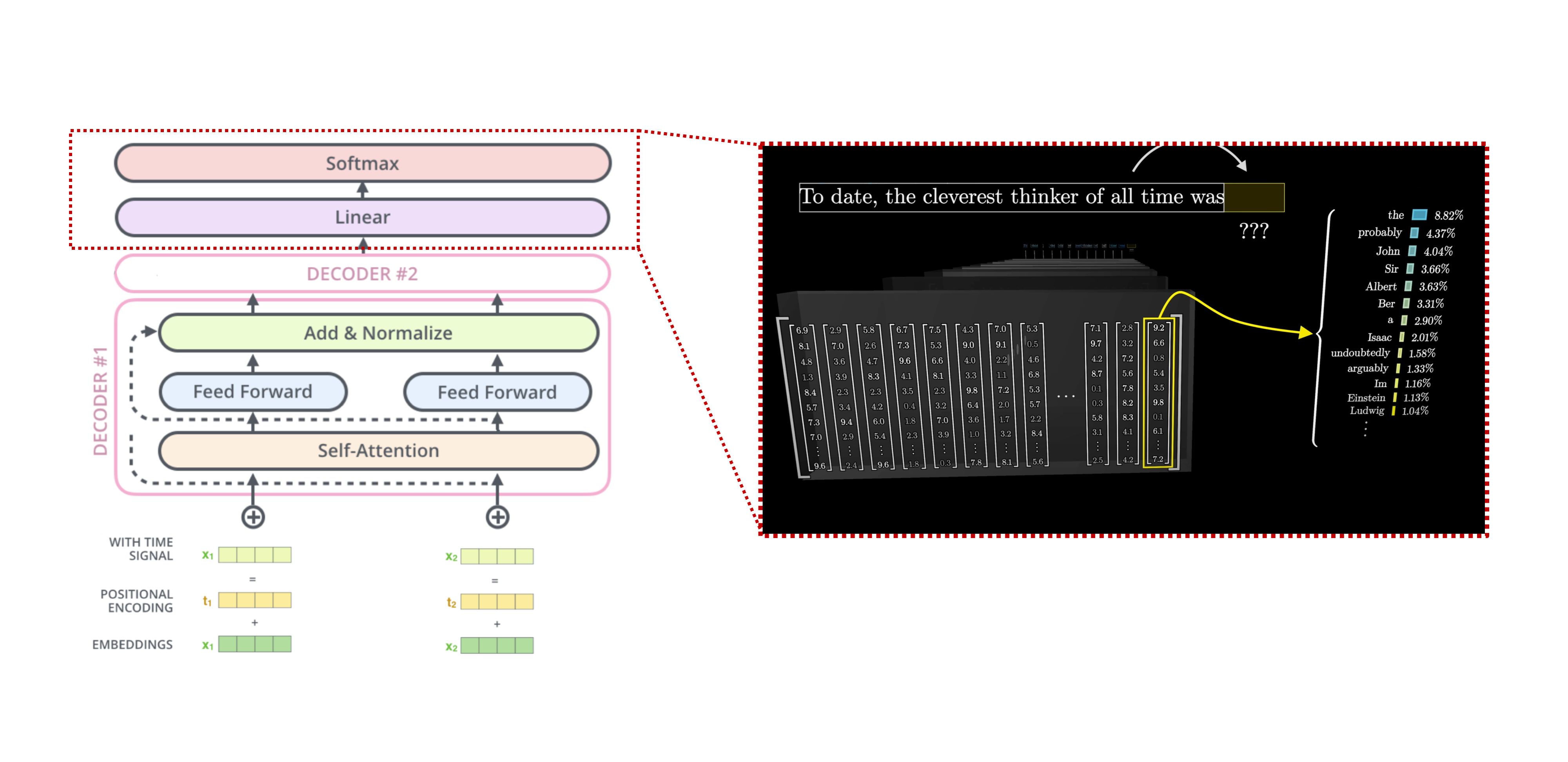

Next token prediction (as in GPT-2)

Next token prediction (as in GPT-2)

Next token prediction

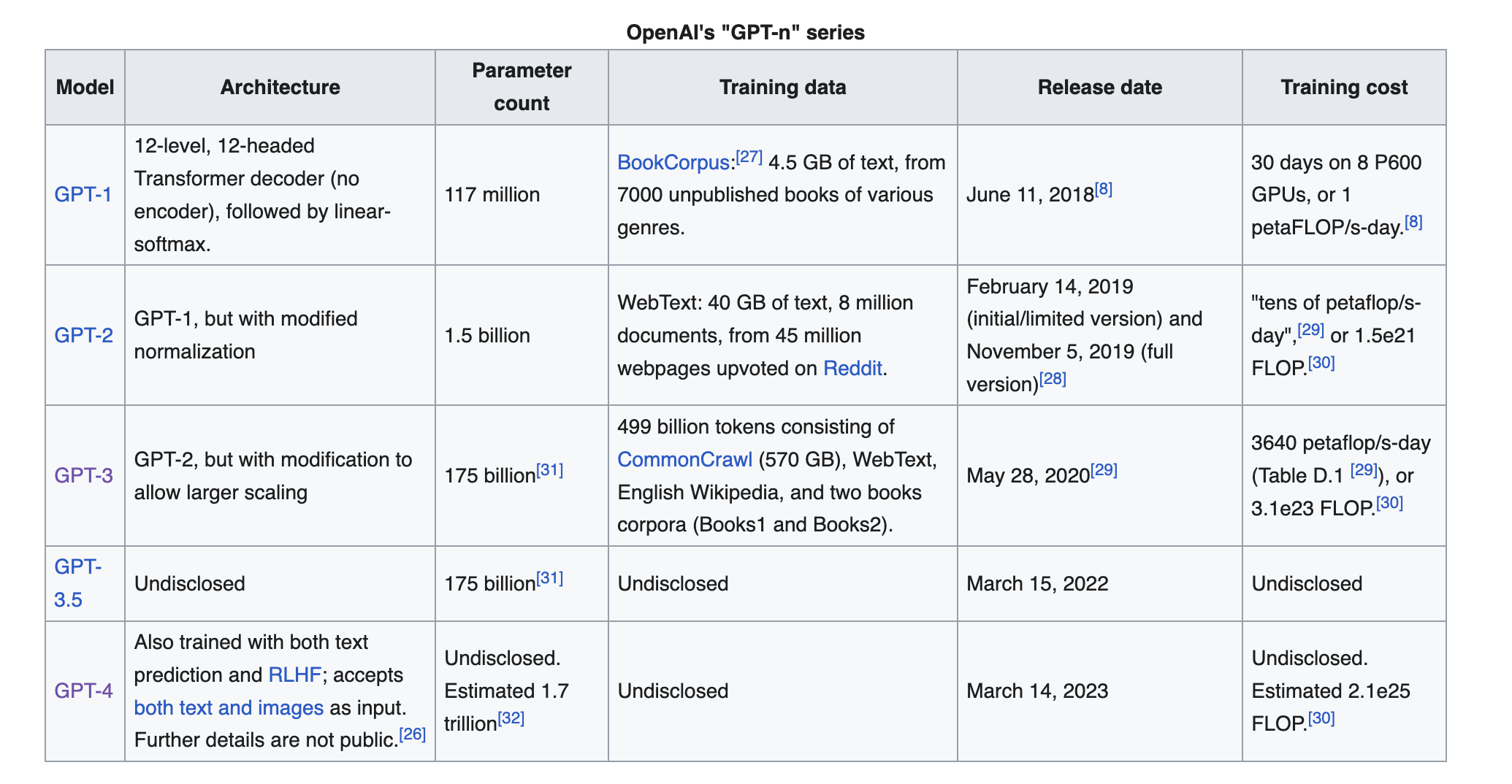

GPT-Series by OpenAI

Generative Pre-trained Transformer (GPT), is a set of state-of-the-art large language model developed by OpenAI.

Particularly GPT-3, released publicly in November 2022 together with a chat interface, caused a lot of public attention

Millions of users in a very short amount of time (faster than Facebook, Instagram, TikTok, etc…), now 1.5 Billion users

Overview of the GPT-series by OpenAI

Source: Wikipedia

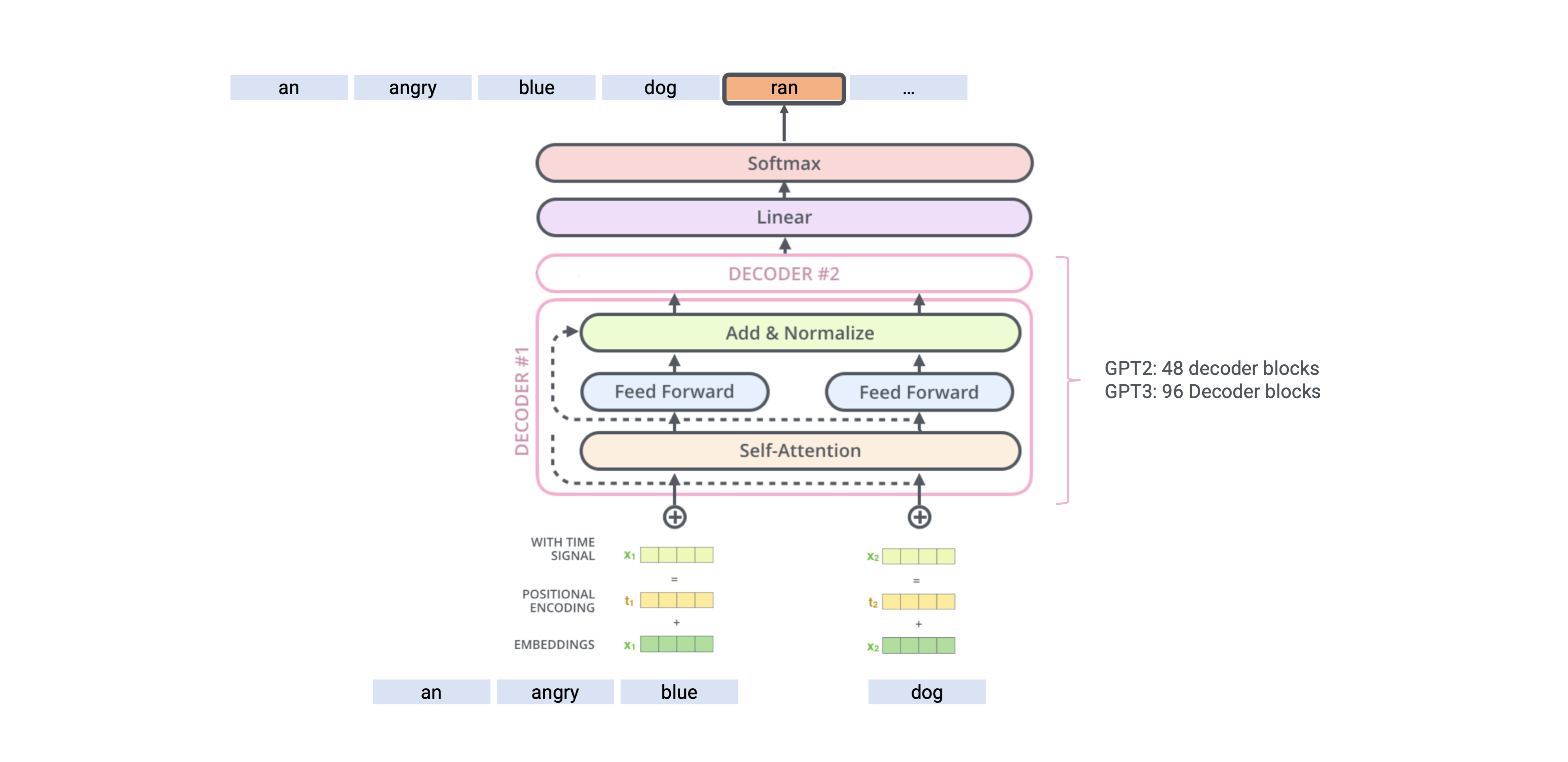

A Peek into the Architecture of GPT

Next-token-Prediction based on input text

Source: Adapted from Alammar, 2018

Intricate Meaning of Words

Intricate Meaning of Words

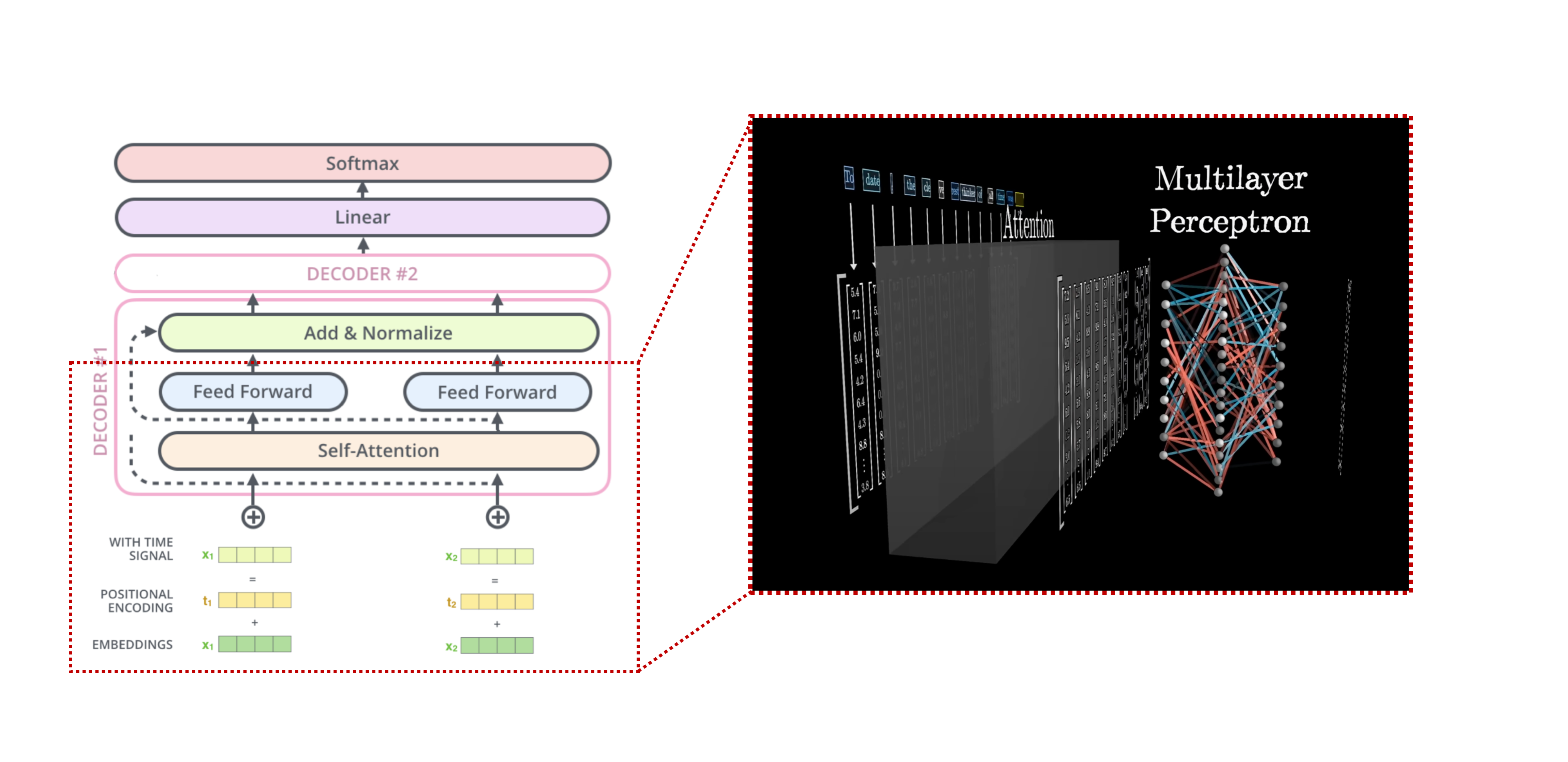

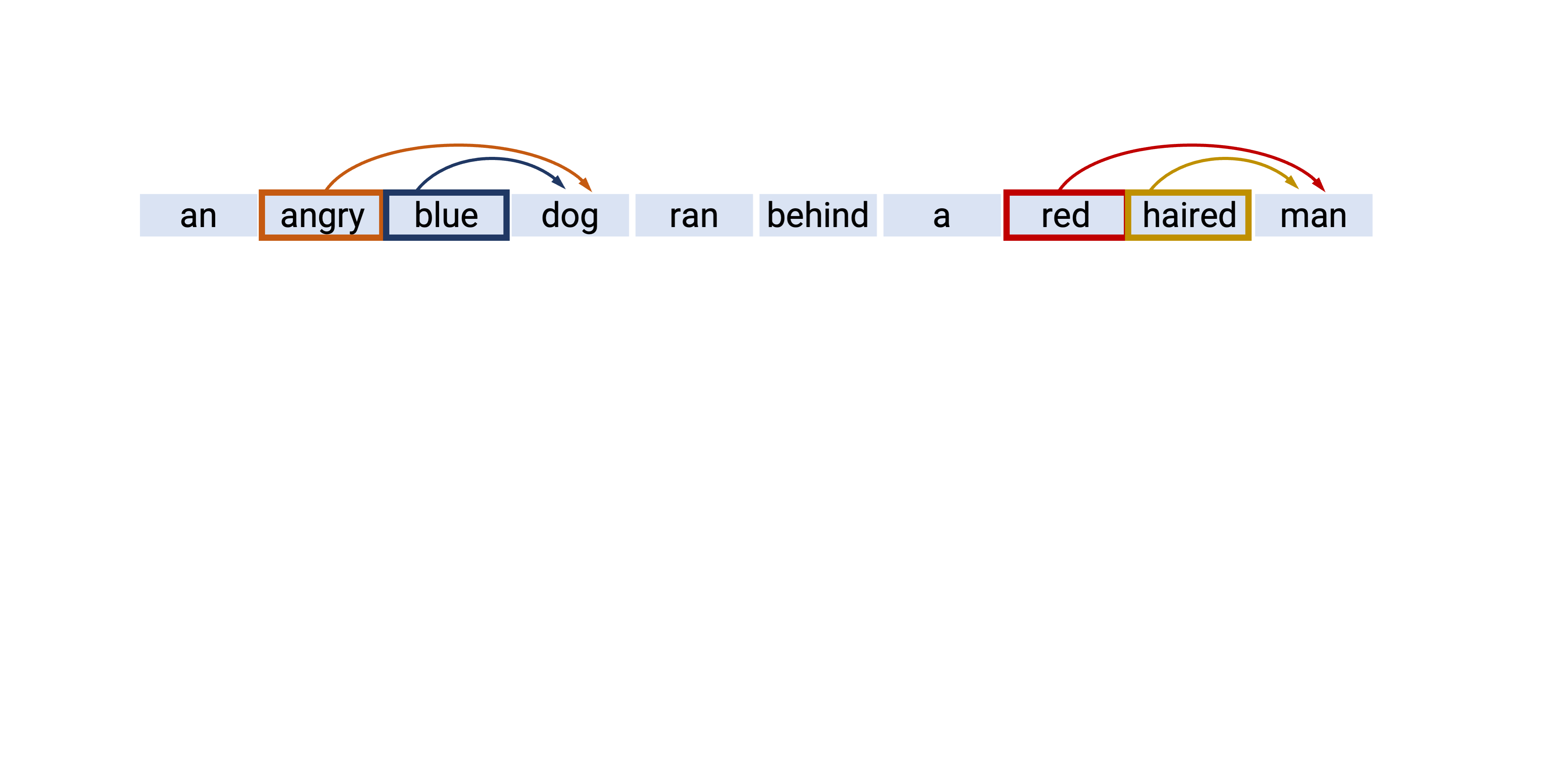

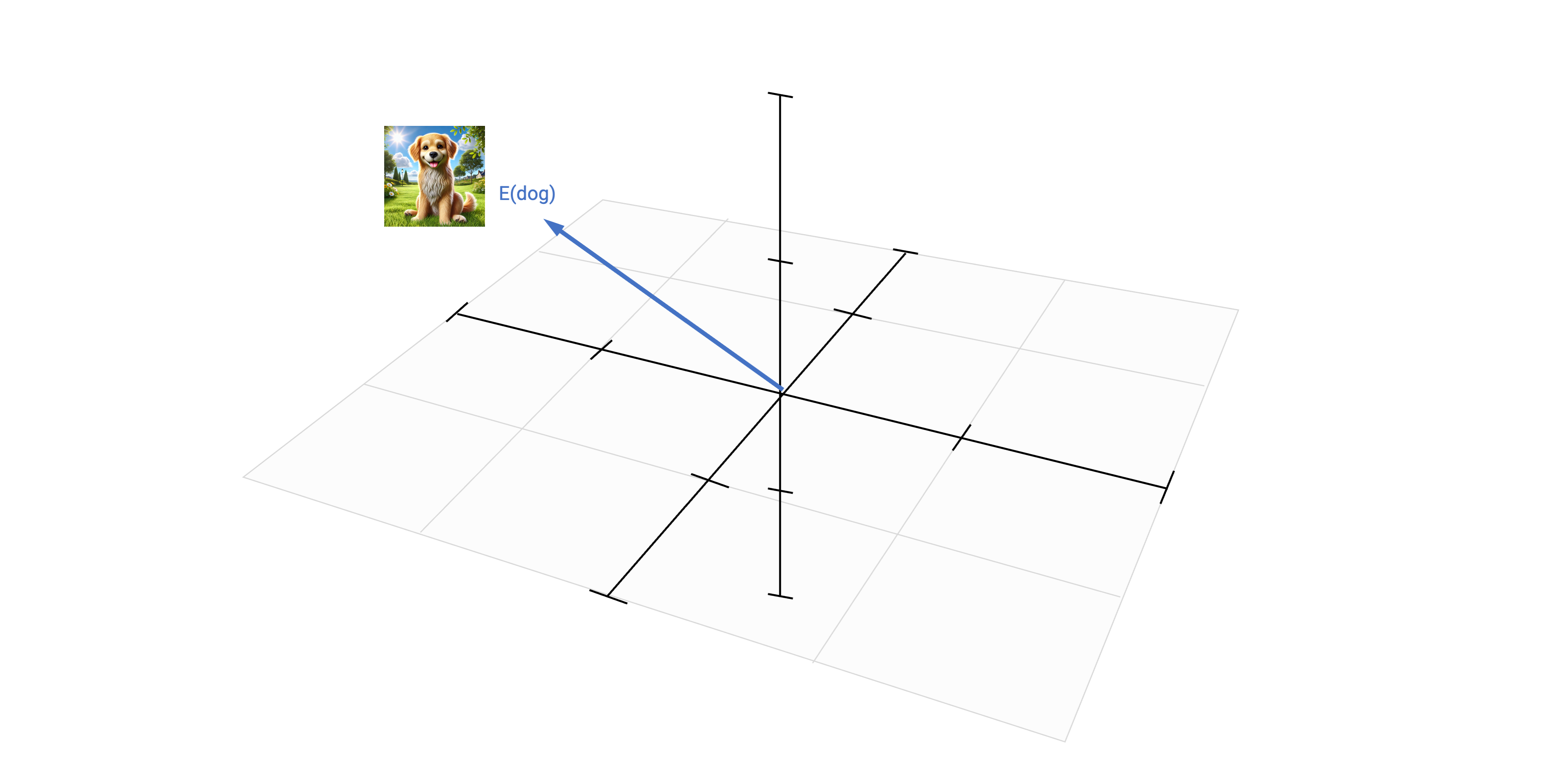

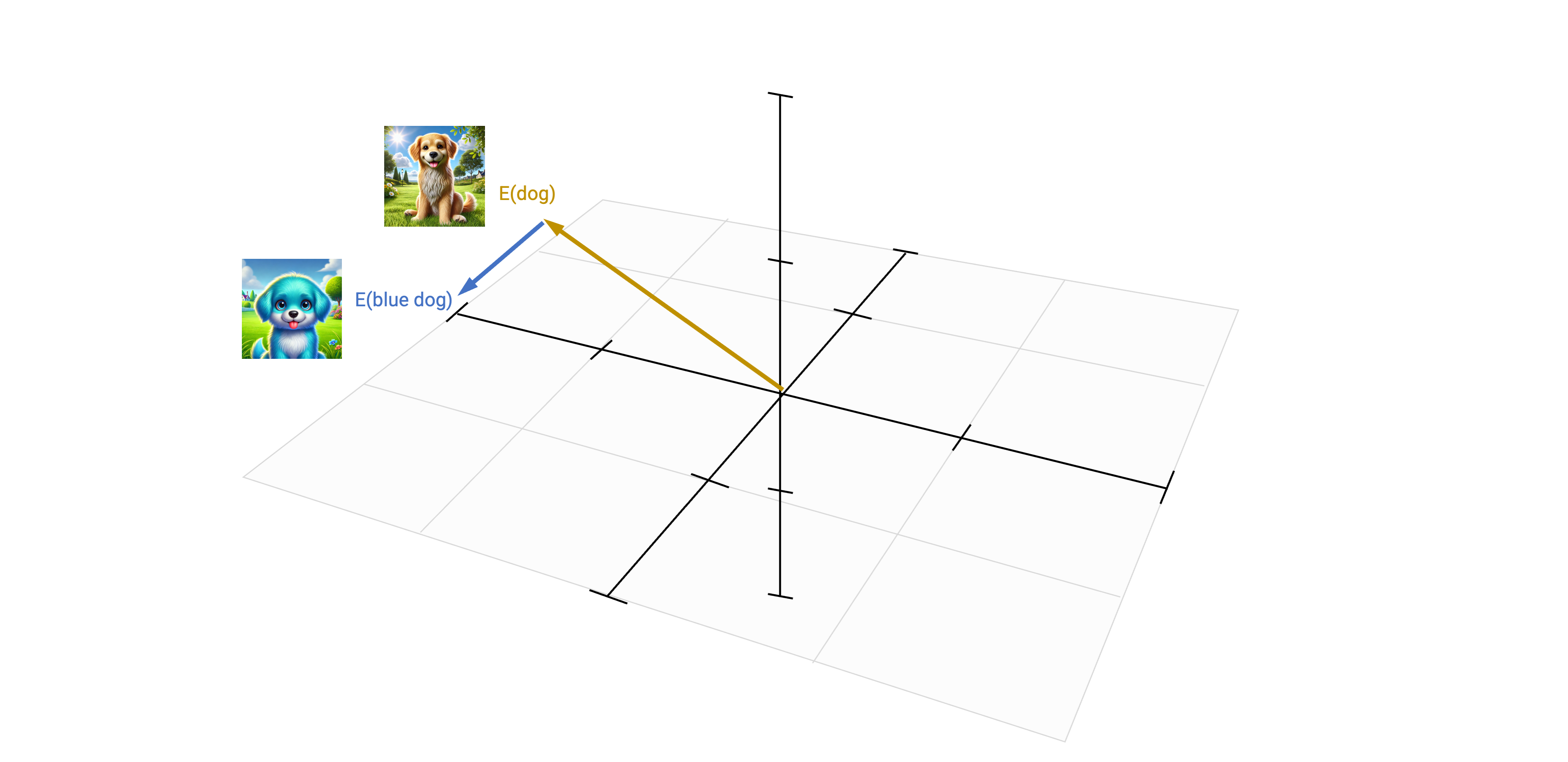

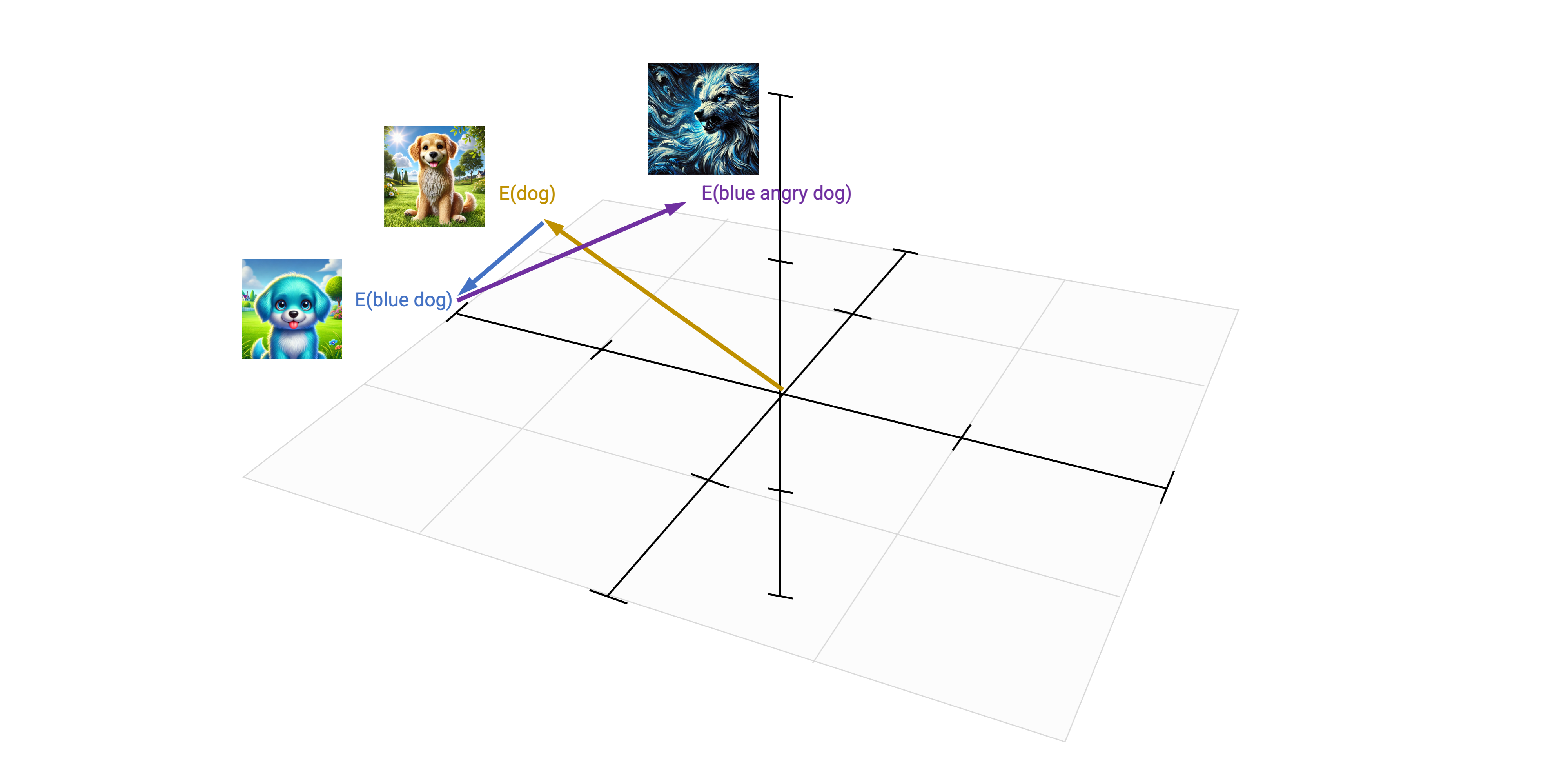

Core Idea: Better Encoding

Based on static word-embeddings (that we discussed in the last lecture), the word “Harry” would get the same embeddings vector, even though we clearly see that they refer to different persons

The same is true for words like “mole” (which can refer to an animal or a little skin spot) or “model” (e.g., a fashion model vs. a computer model)

LLMs like GPT work so well, because their architecture allows them to encode additional information into a token’s embedding vector and take surrounding tokens into account

This way, they learn which name (e.g., Harry) refers to which person or which word (e.g., model) refers to which meaning

A large neural network

Source: Alammar, 2018 and 3Blue1Brown, 2024

Many Layers of Attention

Source: Alammar, 2018 and 3Blue1Brown, 2024

Many Layers of Attention

Source: Alammar, 2018 and 3Blue1Brown, 2024

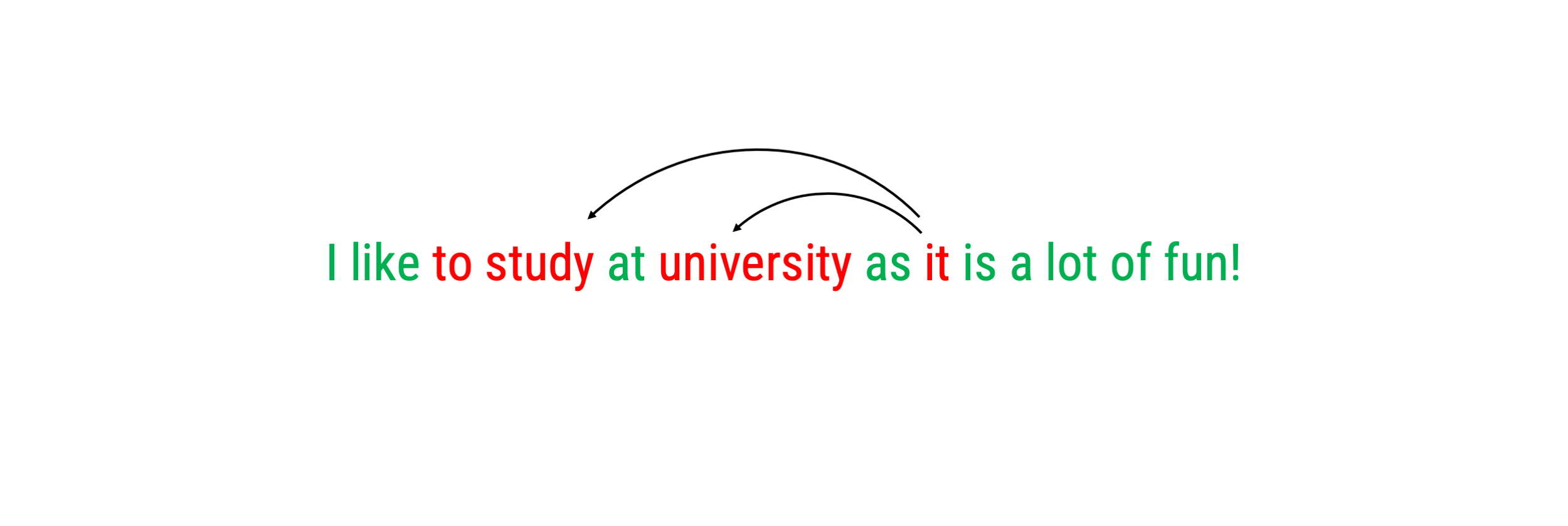

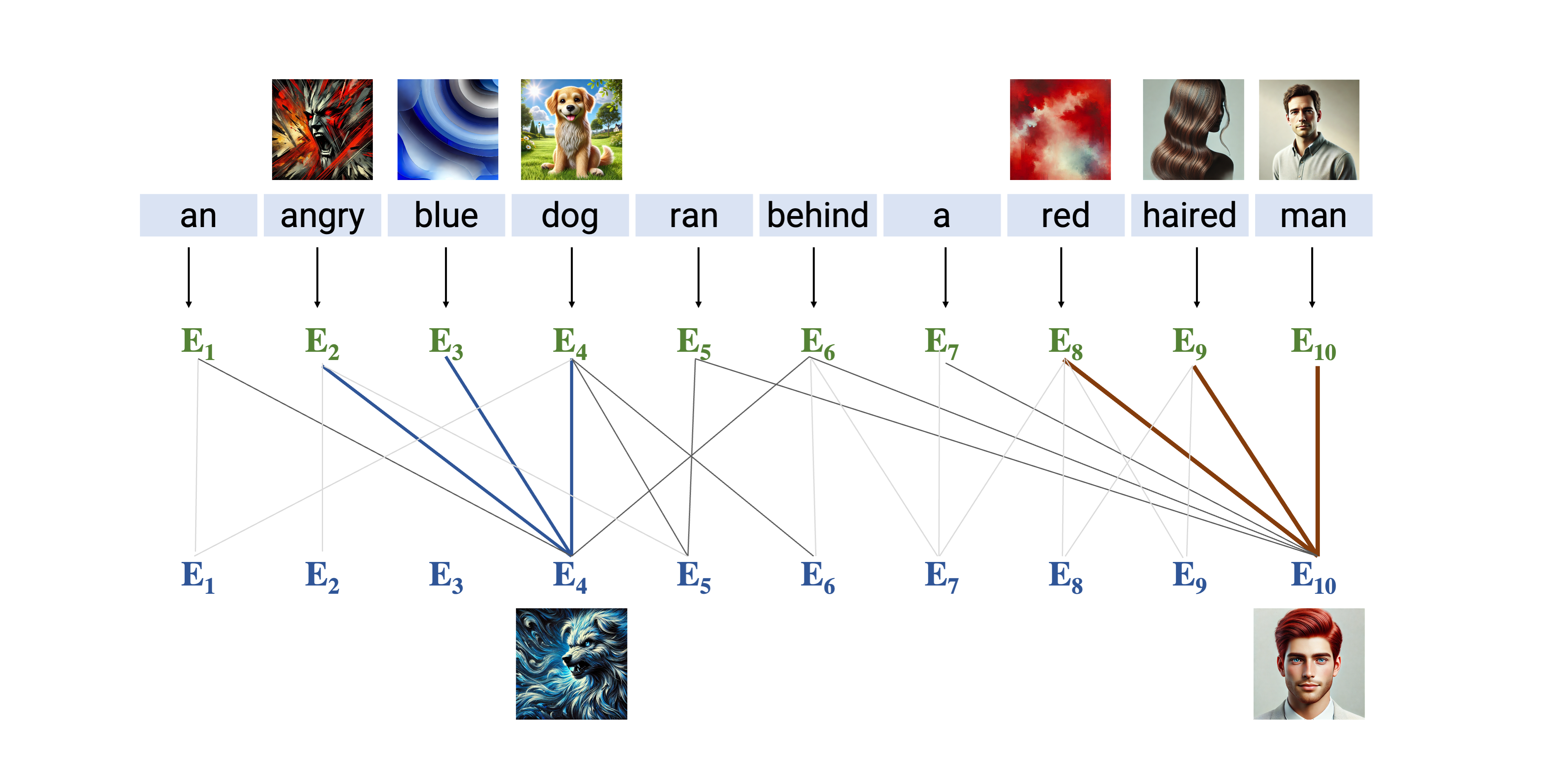

General idea behind Attention

In general terms, self-attention works encodes how similar each word is to all the words in the sentence, including itself

Once the similarities are calculated, they are used to determine how the transformers should update the embeddings of each word

General idea behind Attention

In general terms, self-attention works encodes how similar each word is to all the words in the sentence, including itself.

Once the similarities are calculated, they are used to determine how the transformers should update the embeddings of each word

General idea behind Attention

In general terms, self-attention works encodes how similar each word is to all the words in the sentence, including itself.

Once the similarities are calculated, they are used to determine how the transformers should update the embeddings of each word

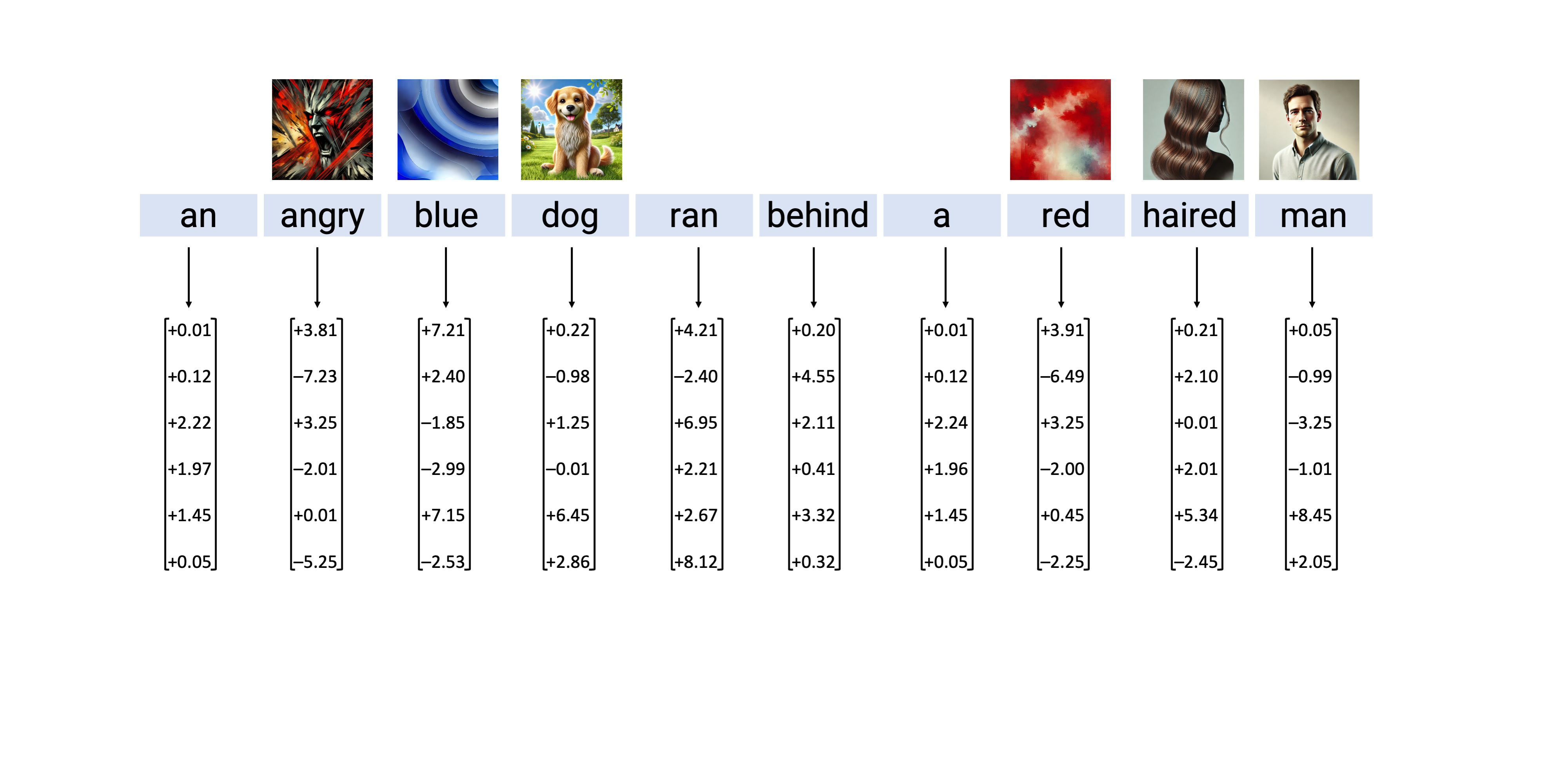

Attention in Detail

Attention in Detail

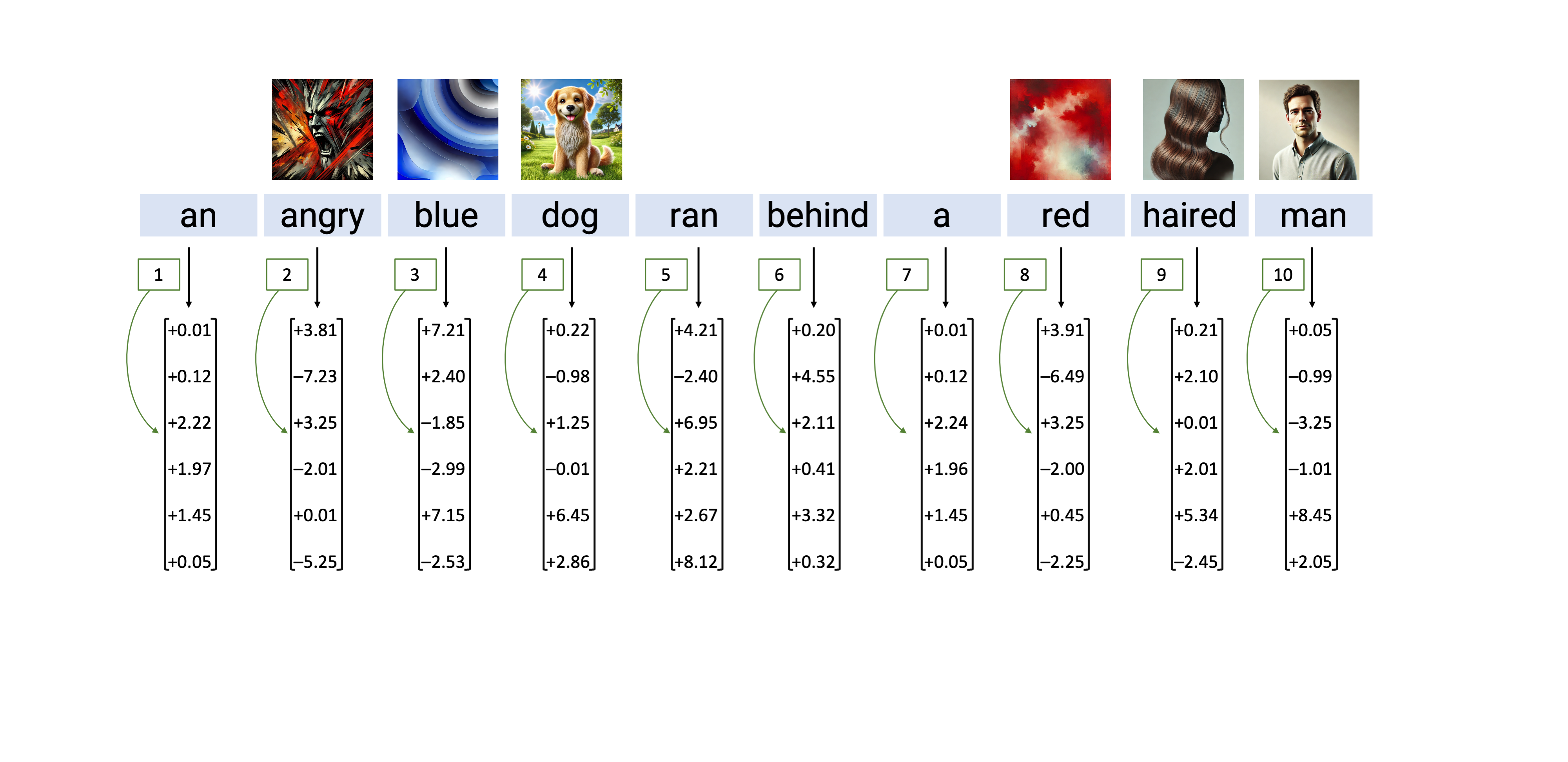

Attention in Detail: Static Embeddings

Inspiration: 3Blue1Brown, 2024

Attention in Detail: Positional encoding

Inspiration: 3Blue1Brown, 2024

Attention in Detail: Mechanism

Inspiration: 3Blue1Brown, 2024

Attention in Detail: Mechanism

Inspiration: 3Blue1Brown, 2024

Updating word embeddings in the k-dimensional space

Updating word embeddings in the k-dimensional space

Inspiration: 3Blue1Brown, 2024

Updating word embeddings in the k-dimensional space

Inspiration: 3Blue1Brown, 2024

Summary of the GPT Architecture

This was only a very short peek into the architecture of GPT (and to degree also into large language models generally)

Decoder-only model designed for text generation

Based on static word-embedding that are updated via postional encoding and many attention layers that can even encode distant relationships between words and sentences

In principle, just a lot of matrix algebra: We are constantly updating a multitude of vectors that represent words and their meaning in the context of a sentence and the entire text

Now, we can ask how we can use these models for text classification tasks

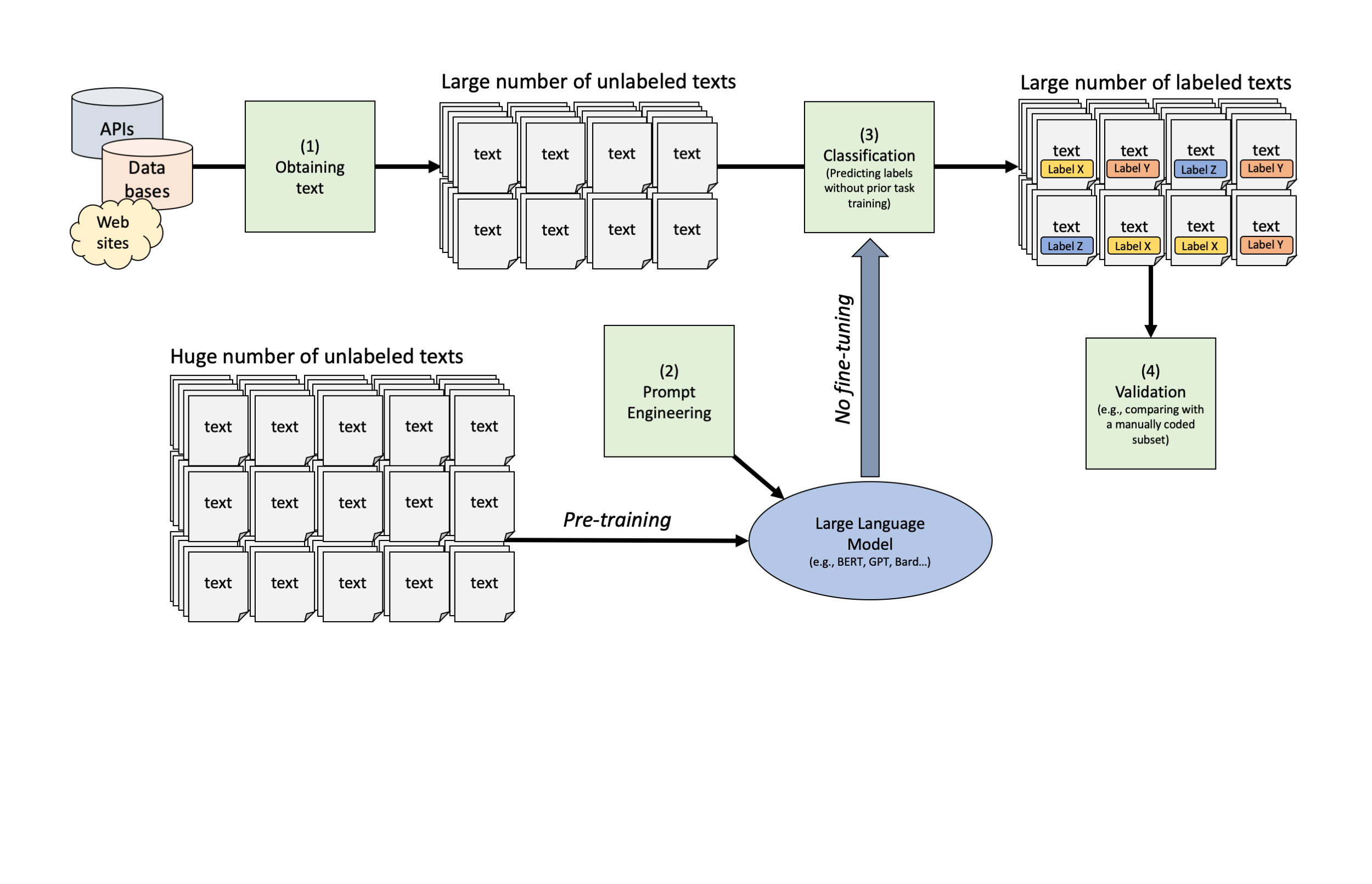

Using LLMs for Text Classification

Text Classification with Large Language Models

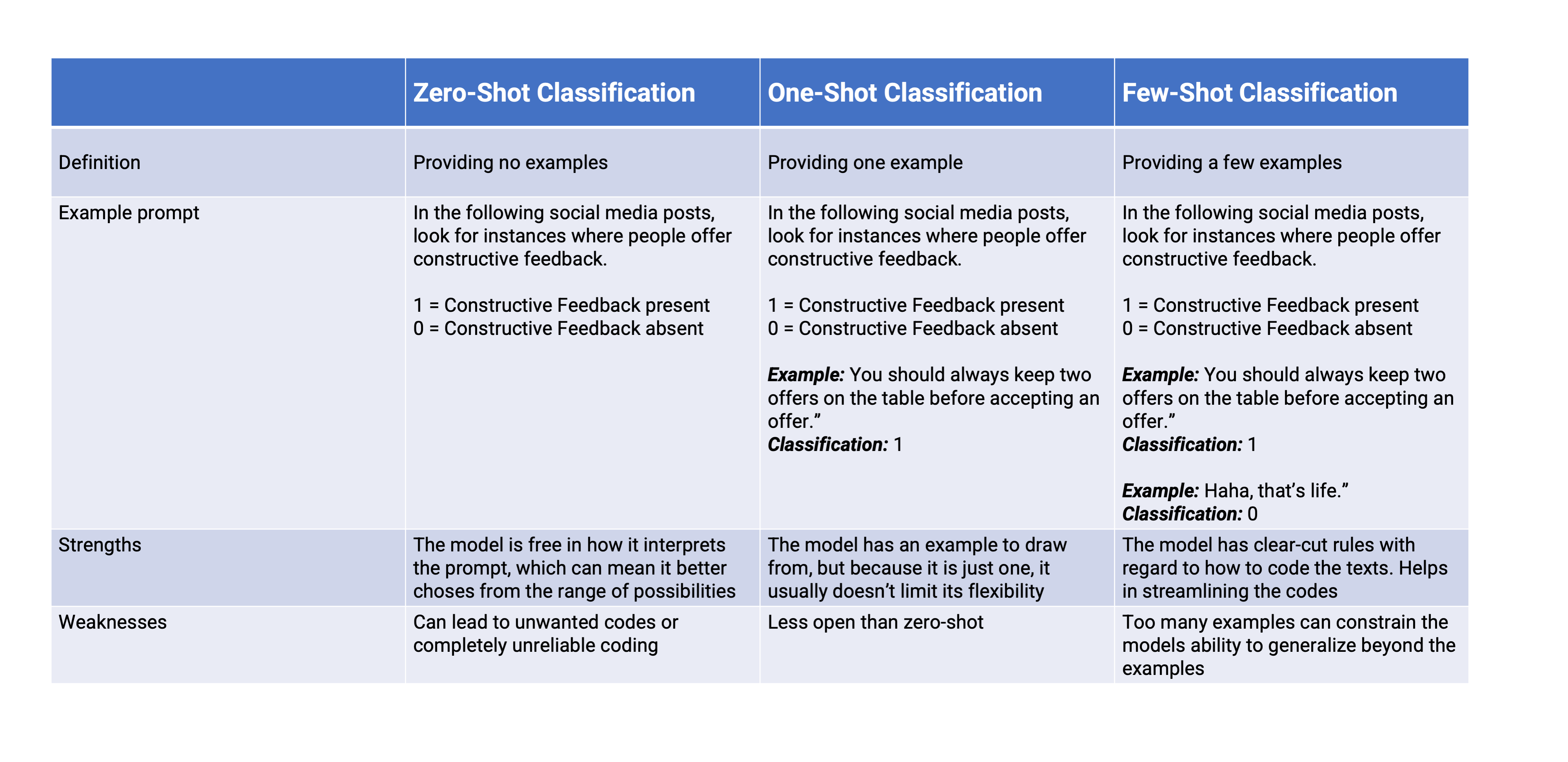

Zero-Shot, One-Shot, and Few-Shot Classification

Classic machine learning models (last lecture) are typically trained on a specific set of classes, and their performance is evaluated on the same set of classes during testing

LLMs have the ability to perform a task or make predictions on a set of classes that it has never seen or been explicitly trained on

In other words, the model can generalize its pre-trained knowledge to new, unseen tasks without training for those tasks

Depending on the type of prompt engineering, i.e., how we describe the task for the LLM, we differentiate three types of classifications:

- Zero-Shot: No examples are given

- One-Shot: One example is given

- Few-Shot: More than one example is given

It is not straight-forward which strategy works best for which task.

At times, zero-shot classification works well as the model is not too tied to the examples

Overview of classification without training

How to work with LLMs

There are generally three ways in which we can work with LLMs:

- Assess open source models via the hugging face API (only smaller rates per minute, but still useful)

- Assess models such as GPT via their respective API (limited rates per minute, and costs per token)

- Download and use models via “ollama” on our own computer (requires some space and can be computationally intensive)

In this course, we are going to “play around” with GPT-3.5 and GPT-4 via the OpenAI API and (if possible) via download small models via ollama

- The package

tidyllmprovides a straightforward workflow that intersects with the tidy-style analyses that we already now - API for openAI will be provided by teachers

- A tutorial on how to install ollama will be provided

- The package

A small example in tidyllm

In the package

tidyllm, we can create a prompt using the function `llm_message()``We then pass this prompt to a chat and specify the model we want to use (this can be a local model, like llama, or a model on a server, like GPT)

library(tidyverse)

library(tidymodels)

library(glue)

library(tidyllm)

llm_message("What is this sentence about: To be, or not to be: that is the question.") |>

chat(ollama(.model = "llama3", .temperature = 0)) Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: What is this sentence about: To be, or not to be: that is the question.

--------------------------------------------------------------

assistant: A classic!

This sentence is from the opening of William Shakespeare's play "Hamlet", Act 3, Scene 1. It is a famous soliloquy spoken by Prince Hamlet himself.

The sentence is about the existential crisis and philosophical dilemma that Hamlet is grappling with. He is contemplating whether to take action against his uncle Claudius, who has murdered his father (Hamlet's king) and taken the throne for himself. Hamlet is torn between two options:

1. To be: to exist, to live, to take action, and potentially face the consequences of doing so.

2. Not to be: to not exist, to die, to avoid the troubles and uncertainties of life.

The sentence sets the tone for the rest of the play, which explores themes of mortality, morality, and the human condition.

--------------------------------------------------------------Zero-Shot Classification: Prompt Engineering

In a zero-shot classification framework, we simply provide the task.

Additionally, we can give potential answer options, which help streamlining the code (otherwise, we get full text answers due to the next-token-prediction logic of LLMs!)

set.seed(42)

# We create a small test data set

test_data <- science_data |>

sample_n(size = 100)

# We create a codebook that includes all texts.

codebook <- glue("Identify the discipline based on this abstract: {abstract}

Pick one of the following numerical codes from this list.

Respond only with the code!

1 = Computer Science

2 = Physics

3 = Statistics",

abstract = test_data$text)

# Create a list of llm-message prompts

classification_task <- map(codebook, llm_message)- We create a list that contains already all prompts with all texts

Zero-Shot Classification: Sequential Prompting

Next, we create a function that sends the message via the API to the relevant model

Then, we use the function

pmap_dfr()to “map” this function across our list of prompts

# Create a function that to map across the prompts

classify_sequential_llama <- function(texts, message){

raw_code <- message |>

chat(ollama(.model = "llama3", .temperature = .0)) |>

get_reply()

tibble(text = texts, label = raw_code)

}

# Run the classification by sequentially prompting LLama3

results_llama <- tibble(texts = test_data$text,

message = classification_task) |>

pmap_dfr(classify_sequential_llama, .progress = T) As a result, we get a data set with the relevant code.

# A tibble: 6 × 2

text label

<chr> <chr>

1 "Optimal stopping via reinforced regression In this note we propose a n… 1

2 "Traces of surfactants can severely limit the drag reduction of superhy… 2

3 "A Unified Strouhal-Reynolds Number Relationship for Laminar Vortex Str… 2

4 "Spatio-Temporal Backpropagation for Training High-performance Spiking … 1

5 "Well quasi-orders and the functional interpretation The purpose of thi… 1

6 "Concentration of Multilinear Functions of the Ising Model with Applica… 2 Validation of our Classification with LLama3

We can use the same functions from the

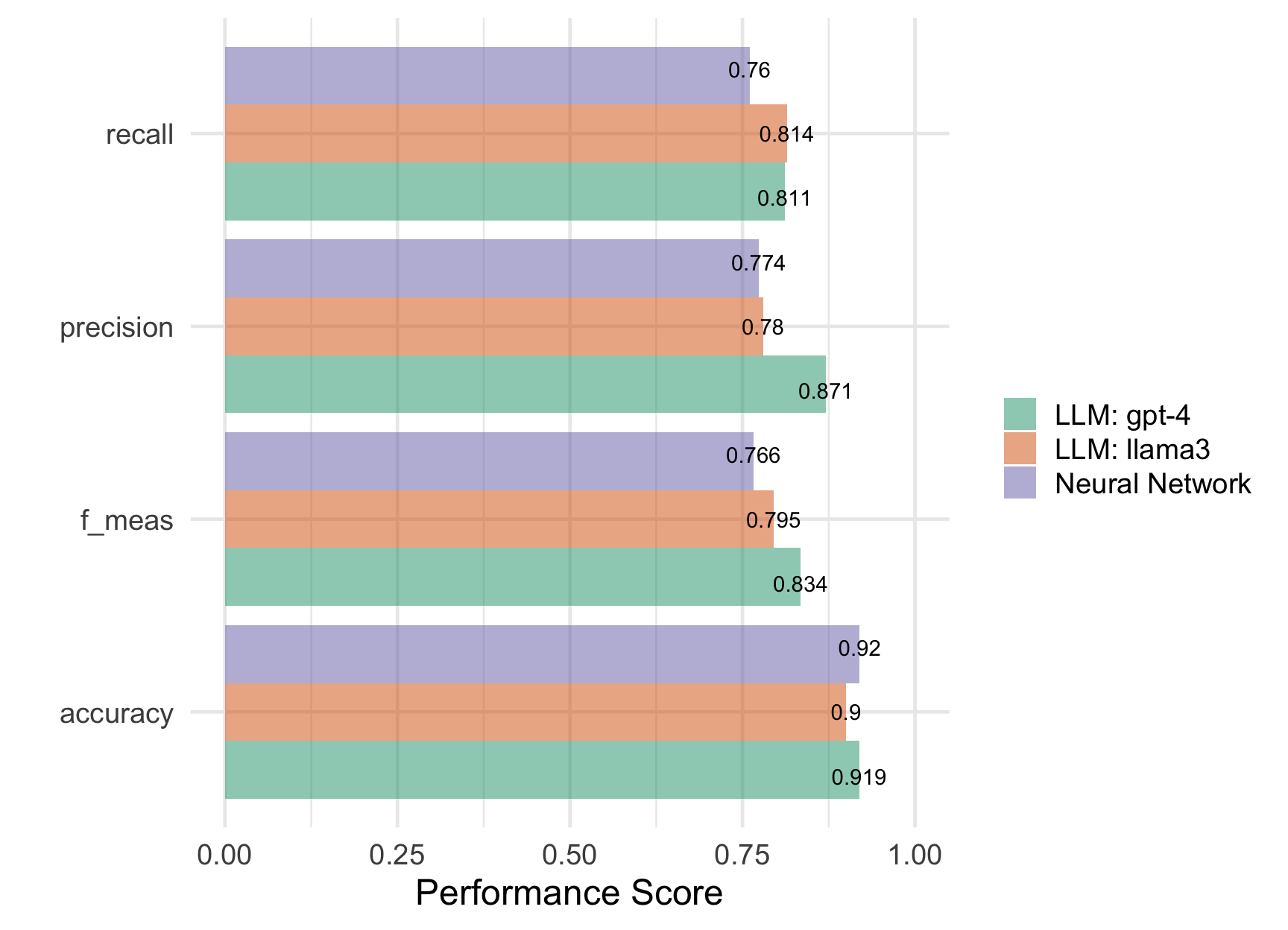

tidymodelspackage to get our performance scoresLlama3 actually does amazingly well on this task without any prior training!

# Create predict table

predict_llama <- test_data |>

bind_cols(results_llama |> select(predicted = label)) |>

mutate(truth = factor(label),

predicted = factor(case_when(predicted == 1 ~ "computer science",

predicted == 2 ~ "physics",

predicted == 3 ~ "statistics")))

# Define performance scores

class_metrics <- metric_set(accuracy, precision, recall, f_meas)

# Check performance

predict_llama |>

class_metrics(truth = truth, estimate = predicted)Testing with other models

Our neural network from last week:

# Create recipe

rec <- recipe(label ~ text,

data = science_data) |>

step_tokenize(text,

options = list(strip_punct = T,

strip_numeric = T)) |>

step_stopwords(text, language = "en") |>

step_tokenfilter(text, min_times = 20,

max_tokens = 1000) |>

step_tf(all_predictors()) |>

step_normalize(all_predictors())

# Create workflow

ann_workflow <- workflow() |>

add_recipe(rec_norm) |>

add_model(nnet_spec)

# Create predict table

predict_nn <- predict(m_ann, test_data) |>

bind_cols(test_data) |>

mutate(truth = factor(label),

predicted = .pred_class)The same classification with GPT-4:

# Create a function that to map across the prompts

classify_sequential_gpt4 <- function(texts,message){

raw_code <- message |>

chat(openai(.model = "gpt-4",

.temperature = .0)) |>

get_reply()

tibble(text = texts, label = raw_code)

}

# Run commands

results_gpt <- tibble(texts = test_data$text,

message = classification_task) |>

pmap_dfr(classify_sequential_gpt4, .progress = T)

# Create predict table

predict_gpt <- test_data |>

bind_cols(results_gpt |>

select(predicted = label)) |>

mutate(truth = factor(label),

predicted = parse_number(predicted),

predicted = factor(case_when(predicted == 1 ~ "computer science",

predicted== 2 ~ "physics",

predicted == 3 ~ "statistics")))Comparison

As we can see, both LLMs perform almost as good as out neural network, GPT-4 does even better, despite not having been trained on any of the data!

bind_rows(

predict_llama |>

class_metrics(truth = truth,

estimate = predicted) |>

mutate(model = "LLM: llama3"),

predict_nn |>

class_metrics(truth = truth,

estimate = predicted) |>

mutate(model = "Neural Network"),

predict_gpt |>

class_metrics(truth = truth,

estimate = predicted) |>

mutate(model = "LLM: gpt-4")

) |>

ggplot(aes(x = .metric, y = .estimate,

fill = model)) +

geom_col(position = position_dodge(), alpha = .5) +

geom_text(aes(label = round(.estimate, 3)),

position = position_dodge(width = 1)) +

ylim(0, 1) +

coord_flip() +

scale_fill_brewer(palette = "Dark2") +

theme_minimal(base_size = 18) +

labs(y = "Performance Score", x = "", fill = "")

Examples in the literature

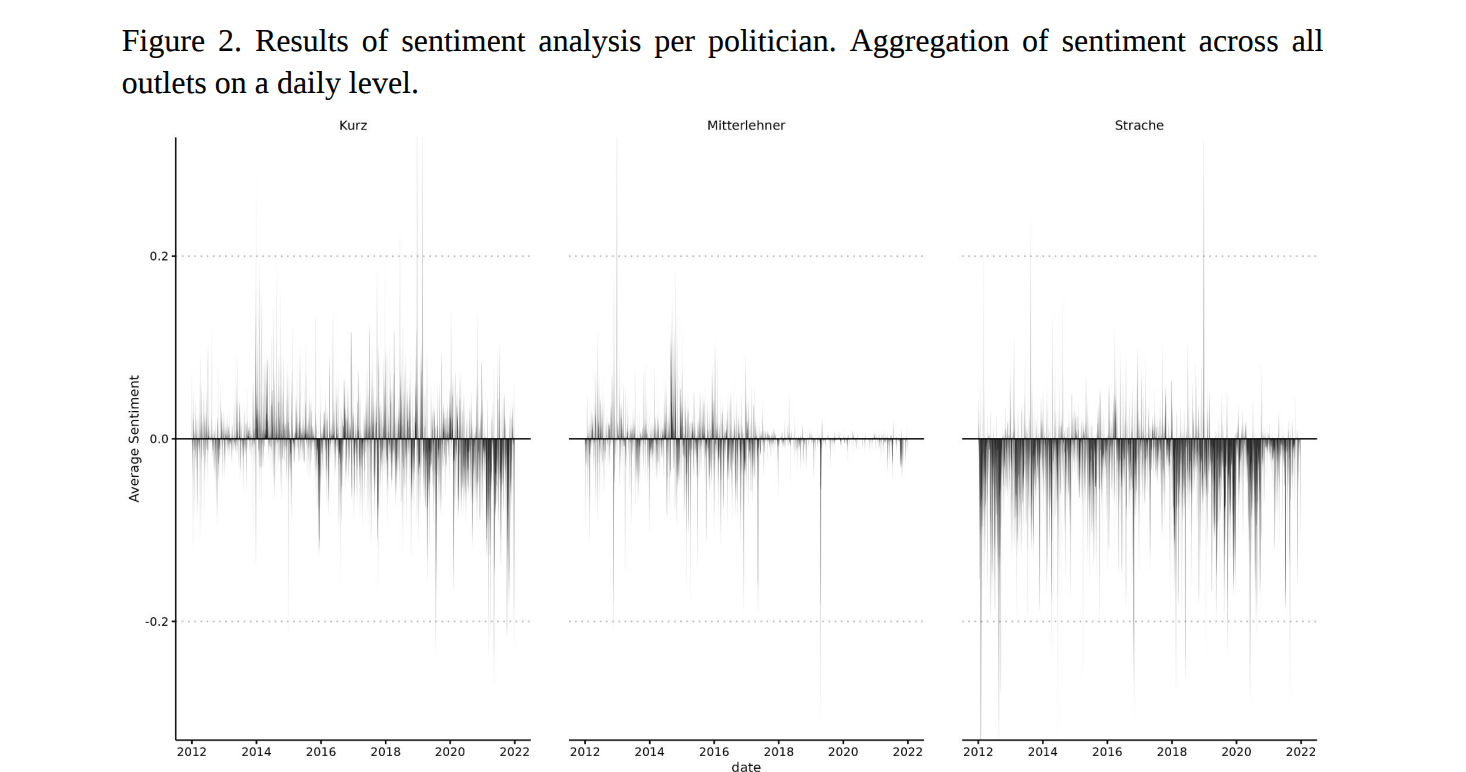

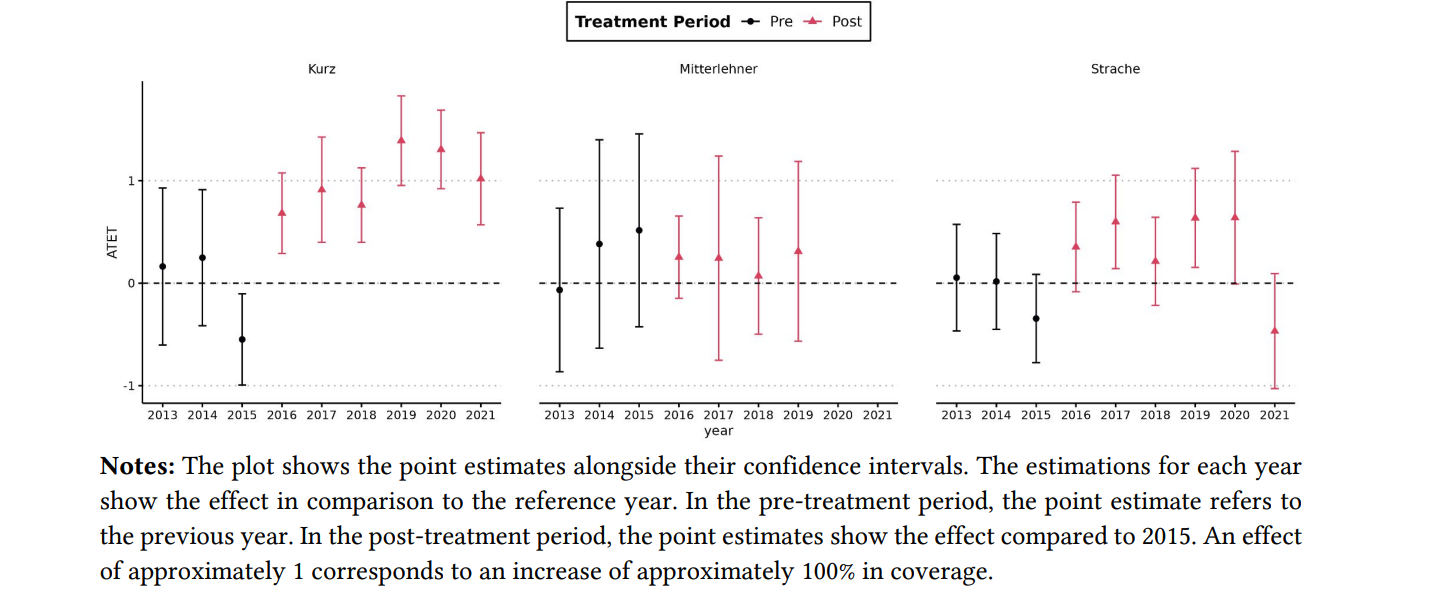

Baluff et al. (2023) investigated a recent case of media capture, a mutually corrupting relationship between political actors and media organizations.

This case involves former Austrian chancellor who allegedly colluded with a tabloid newspaper to receive better news coverage in exchange for increased ad placements by government institutions.

They implemented automated content analysis (using BERT) of political news articles from six prominent Austrian news outlets spanning 2012 to 2021 (n = 188,203) and adopted a difference-in-differences approach to scrutinize political actors’ visibility and favorability in news coverage for patterns indicative of the alleged serious breach of professional political and journalistic norms.

Methods

Used a German-language GottBERT model (Scheible et al., 2020) that they further fine-tuned for the task using publicly available data from the AUTNES Manual Content Analysis of the Media Coverage 2017 and 2019 (Galyga et al., 2022; Litvyak et al., 2022c)

Comparatively difficult task, but were able to reach a satisfactory F1-Score of 0.77 (precision = 0.77, recall = 0.77).

Findings

The findings indicate a substantial increase in the news coverage of the former Austrian chancellor within the news outlet that is alleged to have received bribes.

In contrast, several other political actors did not experience similar shifts in visibility nor are similar patterns identified in other media outlets.

Summary and conclusion

A Look Back at the Chronology of NLP

A Look Back at the Chronology of NLP

A Look Back at the Chronology of NLP

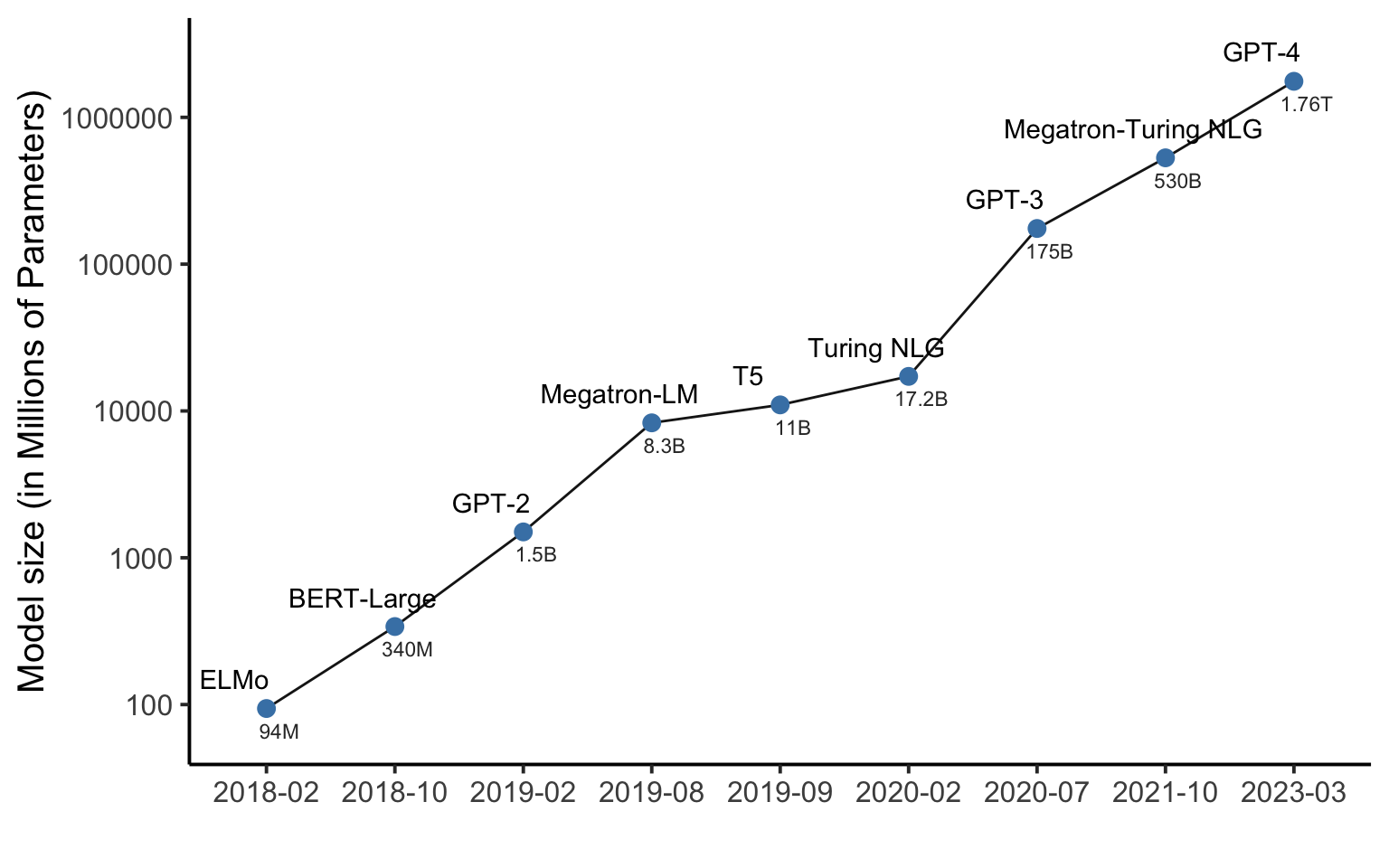

Explosion in model size?

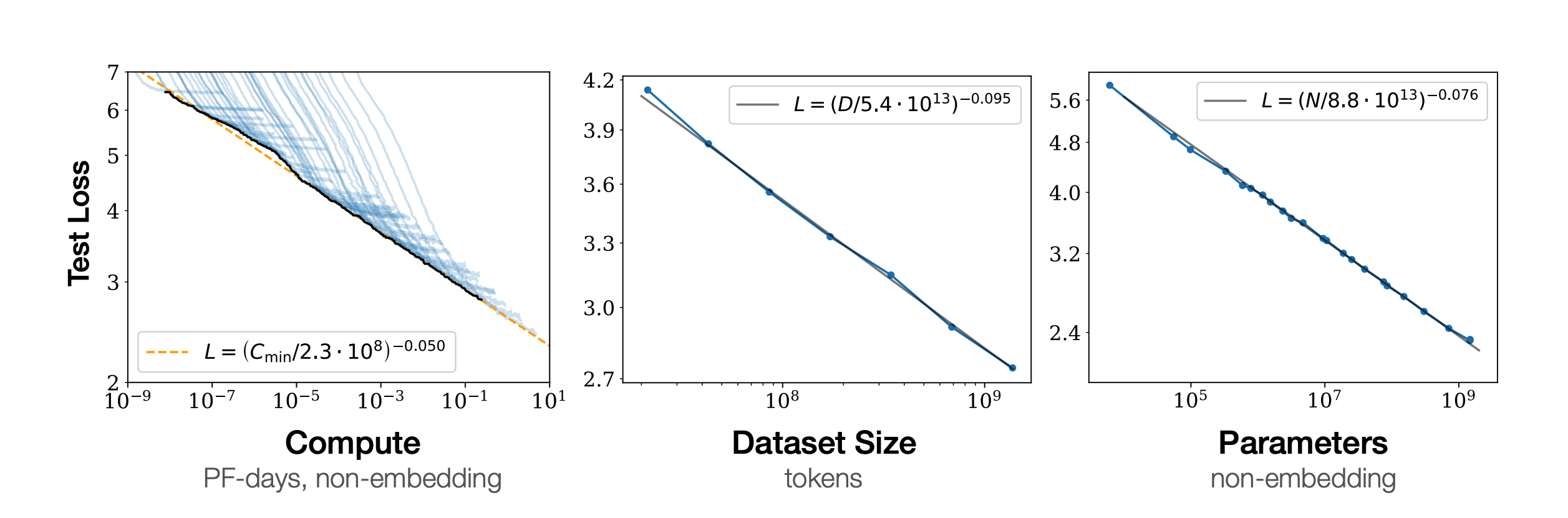

Performance depends strongly on scale

Kaplan et al, 2020

Performance depends strongly on scale, weakly on model shape

Model performance is mostly related toscale, which consists of three factors:

the number of model parameters (excluding embeddings)

the size of the dataset

the amount of compute used for training

Within reasonable limits, performance depends very weakly on other architectural hyperparameters such as depth vs. width

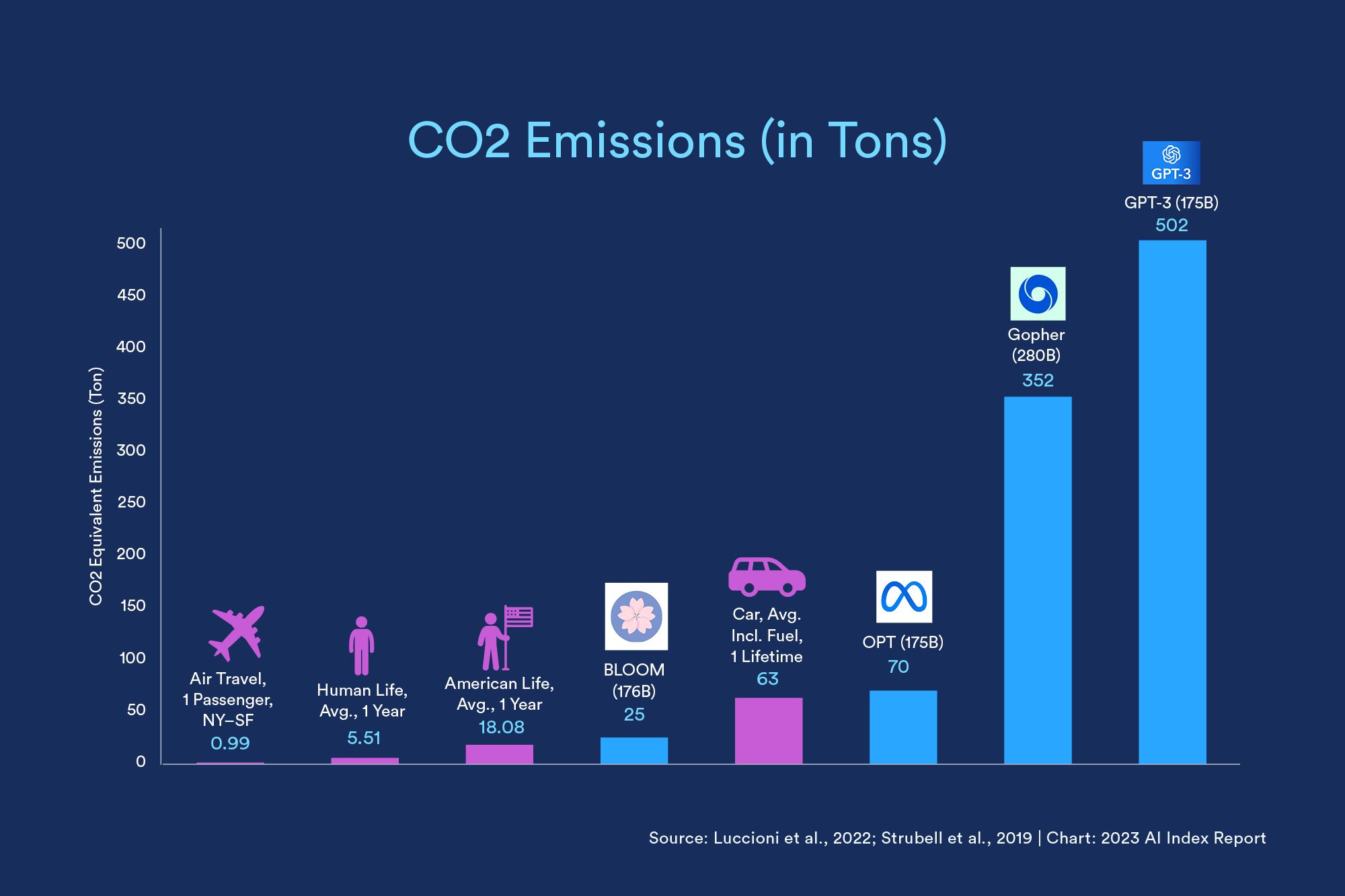

Environmental Impact

Ethical considerations

Training large language models requires significant computational resources, contributing to a substantial carbon footprint.

LLMs can inherit and perpetuate biases present in their training data, which can result in the generation of biased or unfair content, reflecting and potentially amplifying societal biases and stereotypes.

Developers and users must be aware of the potential for bias and take steps to mitigate it during model training and deployment.

The fact that some LLMs are developed, trained, and employed behind closed doors causes yet another ethical dilemma in using them!

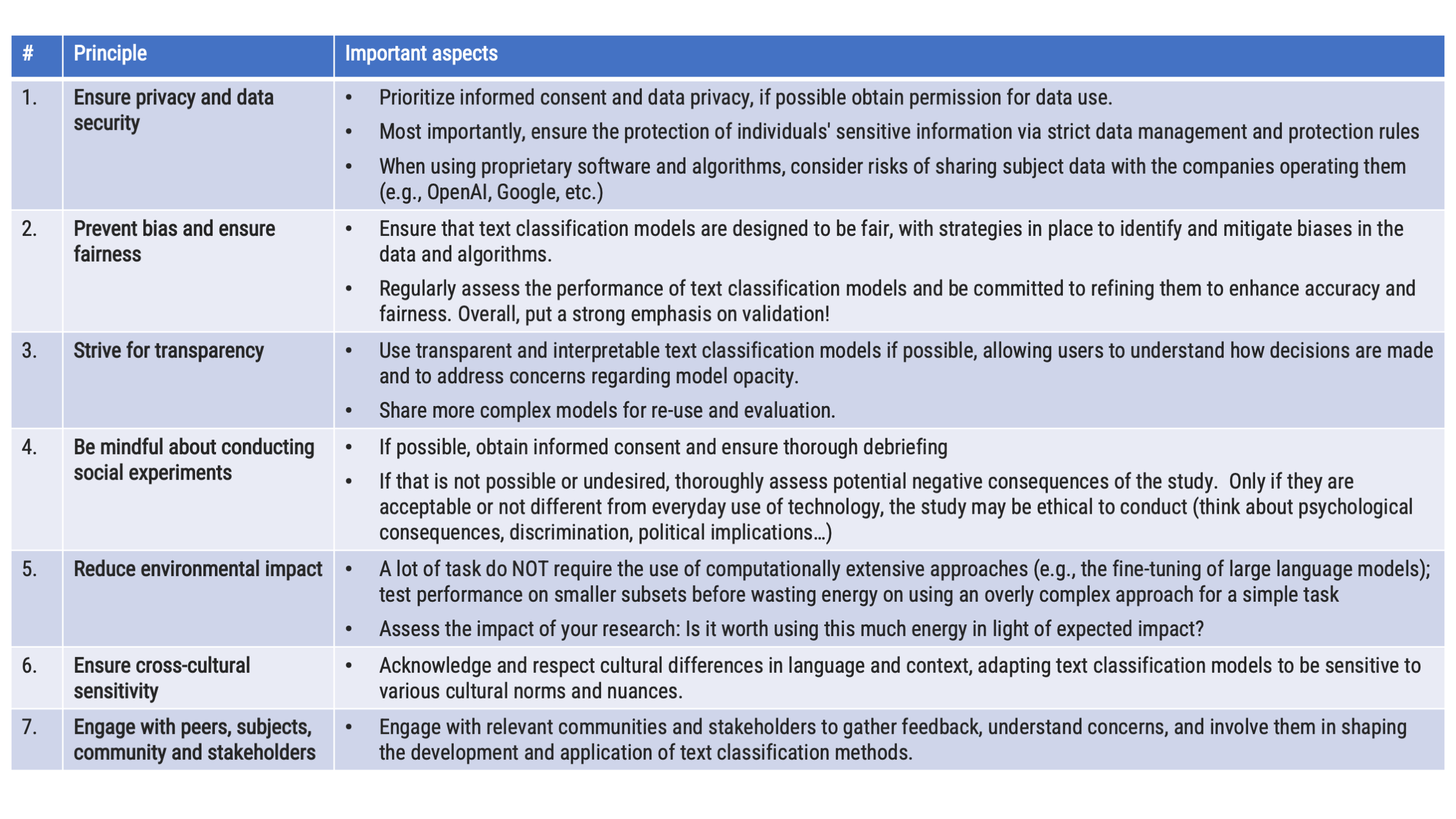

Reminder: Guidelines

Conclusion

Advancement in NLP and AI are fast-paced; difficult to keep up

LLMs promise immense potential for communication research

Yet, large language models can contain biases or even hallucinate!

- Validation, validation, validation!

Also: We see already that more and more content online is AI-based. What does it mean if in the future, LLMs are trained on their own content?

And it doesn’t stop here…

- Large language models like “llava” can also identify and describe images…

llm_message("Describe this picture? Can you guess where it was made?",

.imagefile = "img/england.jpeg") |>

chat(ollama(.model = "llava", .temperature = 0))Message History:

system: You are a helpful assistant

--------------------------------------------------------------

user: Describe this picture? Can you guess where it was made?

-> Attached Media Files: england.jpeg

--------------------------------------------------------------

assistant: The image shows a scene from London, England. There is a blue taxi cab in the foreground with the words "Look Right" on its side, indicating that drivers should look to their right when approaching an intersection, which is standard practice in the United Kingdom due to driving on the left side of the road. In the background, there's a famous landmark known as Big Ben, which is actually the nickname for the Great Bell housed within the clock tower at the north end of the Palace of Westminster, and the Elizabeth Tower, which houses Big Ben, is visible in the distance. The sky is overcast, suggesting it might be a cool or cloudy day. There are people walking on the sidewalks, and the overall atmosphere suggests a typical day in London with some tourists around.

--------------------------------------------------------------Thank you for your attention!

Required Reading

Kroon, A., Welbers, K., Trilling, D., & van Atteveldt, W. (2023). Advancing Automated Content Analysis for a New Era of Media Effects Research: The Key Role of Transfer Learning. Communication Methods and Measures, 1-21

(available on Canvas)

Reference

Alammar, J. (2018). The illustrated Transformer. Retrieved from: https://jalammar.github.io/illustrated-transformer/

Andrich, A., Bachl, M., & Domahidi, E. (2023). Goodbye, Gender Stereotypes? Trait Attributions to Politicians in 11 Years of News Coverage. Journalism & Mass Communication Quarterly, 100(3), 473-497. https://doi-org.vu-nl.idm.oclc.org/10.1177/10776990221142248

Balluff, P., Eberl, J., Oberhänsli, S. J., Bernhard, J., Boomgaarden, H. G., Fahr, A., & Huber, M. (2023, September 15). The Austrian Political Advertisement Scandal: Searching for Patterns of “Journalism for Sale”. https://doi.org/10.31235/osf.io/m5qx4

Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

Kaplan, J., McCandlish, S., Henighan, T., Brown, T. B., Chess, B., Child, R., … & Amodei, D. (2020). Scaling laws for neural language models. arXiv preprint arXiv:2001.08361.

Kroon, A., Welbers, K., Trilling, D., & van Atteveldt, W. (2023). Advancing Automated Content Analysis for a New Era of Media Effects Research: The Key Role of Transfer Learning. Communication Methods and Measures, 1-21

Touvron, H., Lavril, T., Izacard, G., Martinet, X., Lachaux, M. A., Lacroix, T., … & Lample, G. (2023). Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., … & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

Example Exam Question (Multiple Choice)

How are word embeddings learned?

A. By assigning random numerical values to each word

B. By analyzing the pronunciation of words

C. By scanning the context of each word in a large corpus of documents

D. By counting the frequency of words in a given text

Example Exam Question (Multiple Choice)

How are word embeddings learned?

A. By assigning random numerical values to each word

B. By analyzing the pronunciation of words

C. By scanning the context of each word in a large corpus of documents

D. By counting the frequency of words in a given text

Example Exam Question (Open Format)

What does zero-shot learning refer to in the context of large language models?

In the context of large language models, zero-shot learning refers to the ability of a model to perform a task or make predictions on a set of classes or concepts that it has never seen or been explicitly trained on. Essentially, the model can generalize its knowledge to new, unseen tasks without specific examples or training data for those tasks.

In traditional machine learning, models are typically trained on a specific set of classes, and their performance is evaluated on the same set of classes during testing. Zero-shot learning extends this capability by allowing the model to handle tasks or categories that were not part of its training set.

In the case of large language models like GPT-3, which is trained on a diverse range of internet text, zero-shot learning means the model can understand and generate relevant responses for queries or prompts related to concepts it hasn’t been explicitly trained on. This is achieved through the model’s ability to capture and generalize information from the vast and varied data it has been exposed to during training.

Computational Analysis of Digital Communication